I thought I'd mention the latest earnings roundup, starting with Gelsinger's stewardship of Intel,

courtesy of Anandtech. Year-over-year, team blue is down by $3.9 billion in revenue, while having an operating loss of $175 million. (Technically, they were in the black, thanks to a tax benefit.) It's not as bad as it was, but hardly the big turnaround that a lot of analysts had expected after Gelsinger was named CEO.

It's notable that the Client Computing group was down by -17%.

Now, for comparison, let us check in with AMD, and Lisa Su's endeavors. Preliminary results, again

courtesy of Anandtech. Unfortunately for team red, they missed guidance by $1.1 billion.

The massive embedded gains are from the Xilinx merger. Again, notable is the Client group, taking a massive hit at -40%, even worse than Intel.

While we don't have Jensen's team green results yet for this quarter, last quarter

they missed forecasts by $1 billion, matching their competitors in the industry, with significantly impacted sales.

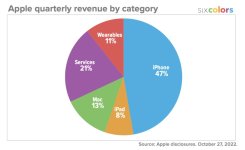

I'm sure that most of us are already aware that the fruit company also released results, this time courtesy of Jason Snell

over at Six Colors, with many useful charts. Unlike others in the tech industry, Apple beat analyst forecasts, with $90.15 billion in revenue, and $20.7 billion in profit.

The big standout for the quarter were Mac revenues, hitting an all-time high, at $11.5 billion.

In which the 38-year old platform achieved 25% year-over-year growth.

The reason I highlight the Mac is because most of the transition to Apple Silicon is complete, excluding the low-volume Mac Pro and a single token Intel Mac mini. That means that most of the early adopters have already made the switch, so a mad dash among the hardcore users is not responsible for that growth. While only a single data point,

according to Steam, Apple Silicon should surpass Intel among Mac gamers this month.

According to Tim Cook, nearly half of Mac purchases went to customers that are

new to the Mac.

When it was first announced, there were a lot of Mac users that were upset by the switch to Apple Silicon, claiming that the risk of dropping Intel and losing x86 compatibility would be the doom of the platform, but that appears to be opposite. Switching to AMD wouldn't have likely changed the equation. I highlighted the specific results above because of these basic revenue statistics:

Intel's Client group: -17%

AMD's Client group: -40%

Mac sales growth: +25%

I think it's fair to say that Apple proved its detractors wrong and that the transition to Apple Silicon was an extraordinary success. It's amazing how many times I hear "Apple can't do", and then they go and do it.

Finally, we've often discussed how power consumption is getting out of control, and that thanks to the switch to Apple Silicon, the Mac is the only platform that is keeping that under control. Recently, AMD admitted that power usage is increasing faster than process advancements can make up for. According to Sam Naffziger, AMD Vice President of Product Technology Architecture:

Intel, AMD and Nvidia are fighting each other into a death spiral that's eventually going to catch up with them. As long as they win by 2% in benchmarks, power usage be damned, while hiding behind fake fairytale power consumption specifications. Considering the

performance of Resident Evil Village on Apple Silicon, that one last bastion of PCs may have had its defenses broken. For the foreseeable future, the PC guys are going to continue to push the hardware with ever increasing wattage, until

something breaks.