diamond.g

Elite Member

- Joined

- Dec 24, 2021

- Posts

- 1,062

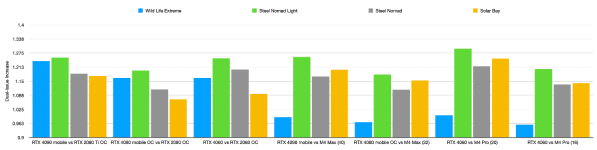

Your system has the same average score as a 3060 laptop. The 5060 laptops are averaging 2500. The M3 Ultra is the equivalent to a 4070ti super (500 points away from the 5090 notebook).Modern gaming laptops? Sure. I wonder about laptops of the same age. Even many modern non-gaming laptops get a lower score than yours.