As you all know, during the early days of SSD's, Anand Shimpli of anandtech.com emphasized the need to include 4k random reads and writes in benchmark testing, since those are more reflective of typical boot drive usage than the values for large sequential R/W's (which the SSD companies love to quote because they are the largest numbers).

However, with AS's 16 KiB page size, I'm wondering if looking at the 4 KiB values (which, thanks in large part to Shimpli, has become the standard for benchmark testing) understates the performance of AS SSD's, since the figures I'm seeing for those, with even the latest M3, are slower than those on my 2019 Intel iMac (see screenshots below). OTOH, comparing 16 KiB on AS to 16 KiB on x86 may be unfair to the latter. If both hold, then one can no longer use these small random R/W benchmarks to do cross-platform comparisons

I.e., the idea is that 4 KiB blocks unfairly favor x86 over AS, and visa versa for the 16 KiB blocks.

I wrote to the developer of Amorphous Disk Mark to ask whether that's the case, and he disagreed*, saying that 4 KiB is meant as the worst case, and thus it's worst case for both. But I'm not sure I agree. For instance, couldn't you test, say, 1 KiB on x86, and wouldn't that present an even worse case than 4 KiB? If so, then 4 KiB isn't the worst case on x86; rather, it's the worst

meaningful case, because of x86's 4 KiB block sizes. And wouldn't that make 16 KiB the worst

meaningful case on AS--such that, if you wanted apples-to-apples, you'd need to test the worst meaningful cases on each, which would be 4 KiB and 16 KiB, respectively; this would, of course, preclude cross-platform comparisons, as mentioned above.

*Here's the reply from Hidetomo Katsura:

right. in some cases, i did observe that [low 4k values on AS] was the case even though it shouldn't since the app is requesting one 4KiB block at a time. it could also be an optimization in the storage driver or file system to read 16KiB no matter how small the requested size is.

you can observe the 16KiB block size access for the 4KiB measurement using the iostat -w1 command. or maybe it was a bug (or behavior) on an earlier macOS version with the M1 chip support. maybe Apple fixed it in newer macOS versions.

anyways, it really doesn't matter though. it's measuring the "worst case" minimum block size disk access request performance. if it's slower on Apple silicon Macs, it's slow in real world on Apple silicon Macs.

-katsura

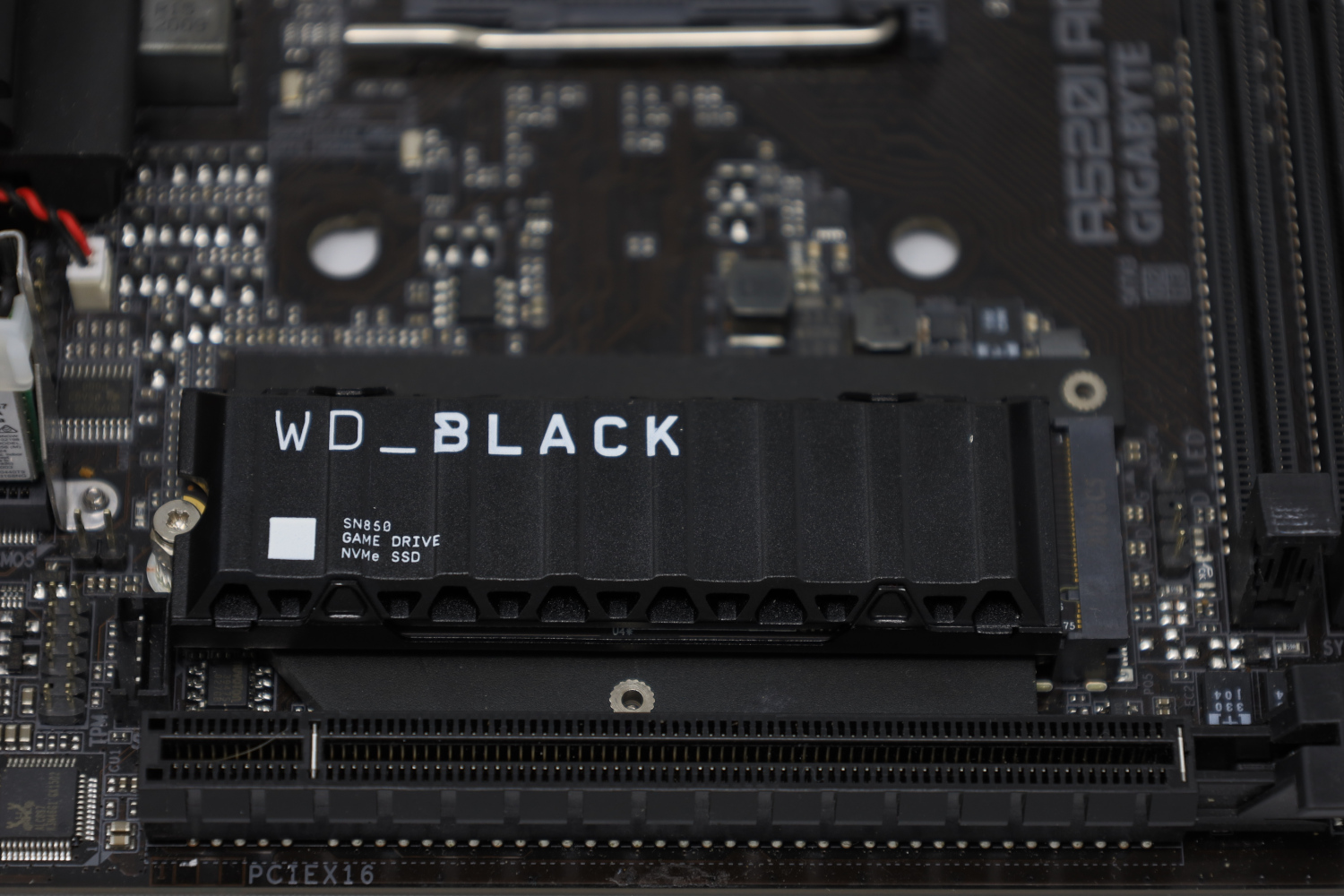

2019 i9 iMac (PCIe 3.0 interface). Stock 512 GB SSD on left. Upgraded 2 TB WD SN850 on right.

View attachment 28671

Two results for M3 Max MBP's (PCIe 4.0 interface, hence the higher sequential speeds) posted on MacRumors, by posters unpretentious and 3Rock:

View attachment 28672