@NotEntirelyConfused (and everyone else of course!

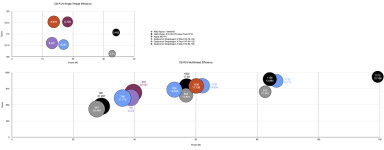

) Here's thumbnail of the findings for the new AMD chip, I just added the data to the previous graph (apologies it's a big chart and compression wasn't kind to the fuzziness of the text):

View attachment 30580

In addition to the

NotebookCheck article, I'm also going to talk about the

Anandtech article.

There are a few things to note here:

1) The new Zen 5 mobile P-cores on N4P represent a significant jump in ST performance compared to Zen 4. They are only 10% more efficient at the clock speed they are rated for (which is 200 MHz less than the max clocks of the Zen 4 mobile chips) however that's at better performance, implying that they could lower clockspeed even more and get more efficiency though they probably still wouldn't match the lower end Qualcomm cores never mind Apple cores. So single core efficiency is still way behind though at least they improved performance.

2) These Strix Point chips are manufactured on a slightly better node (N4P) than the Apple (N5P) or Qualcomm (N4) chips.

Judging from this chart, the difference isn't huge: N4 looks like a slightly more dense N5P but with almost identical power/performance and N4P has similar density to N4 but either 6% better performance at the same power or 11% better efficiency at the same performance for the chip TSMC bases there reference calculations on. While that certainly helps the AMD chip here, I don't expect that to predominate especially as these are load-idle power figures for the whole device.

3) The HX 370 is a much bigger chip with 12 cores and 24 threads, 4 more cores than the 8845HS chip it replaces. True, 8 of the cores are now "c" cores but while they are a smaller and more efficient than the their non-c counterparts for multithreaded workloads I suspect the difference is minimal in this respect.

In an interview with Tom's Hardware, Mike Clark states that the Zen 5c cores are about 25% smaller than the Zen 5 cores with most of what was taken out and rearranged having to do with silicon that allows the cores to boost to super high frequencies. Thus for the purposes of multithreaded workloads, especially endurance tests like CB, one might be tempted to say that they are effectively P-cores. However, they also have access to dramatically less cache, especially last level cache, than the standard P-cores and

have high core-to-core latency especially with the standard P-cores as they have to communicate through the last level cache with the standard P-cores. This will almost certainly limit their performance relative to the standard P-cores and, in some ways, this is similar to what I suspect is limiting the Qualcomm chips in multithreaded workloads (i.e. cache and bandwidth).

4) These extra cores means a significant percentage of the uplift in multithreaded performance simply comes from having more cores available. For instance,

jumping down to the 3D Mark CPU Profile Simulation HX 370 only gets a 5% jump over the previous gen chip (the 8845HS and 7840HS I believe have the same CPU) when both are restricted to 8 threads, but doubles its lead in the same benchmark at max threads. As shown in the graph above, for CB R24, its lead over its predecessor is more substantial. At 57 watts, it now gets the roughly same score (1022) as the Apple M2 Pro (1030, 60W) and Qualcomm X Elite 78 (1033, 62.1W) whereas the 8845HS was about 15-16% behind (842, 56.8W). The three newest chips all now have similar efficiencies at this point in their performance/watt curves.

As stated in my previous post, it is unfortunate that we don't have X Elite 80s or 84s to compare here at this performance cure which are higher binned chips and might perform better. I think I saw a Techlinked video which stated that these are apparently more difficult to source for review sites. The Apple M3 Pro by contrast score around 1055. Unfortunately NotebookCheck didn't do wall power measurements for it under CB R24 (I suspect they don't have one anymore), but it's in the mid-40Ws for wall power. That would make it somewhere in the range of 25-30% more efficient at ISO-performance.

The M3 Pro is on a slightly better node (N3B) but while transistor scaling increased dramatically for practical chips with increasing SRAM again difference in power/performance due to that alone aren't expected to predominate (a few percent compared to N4P). Overall, I suspect the M2 Pro, Qualcomm X Elite, and especially M3 Pro are a good deal more die efficient than the HX 370.

5)

The caveats from my previous post are expected to hold here too.

6) Anandtech compared the HX 370 to the base M3. AMD amusingly asked them to compare to the M3 in the Air, which Anandtech demurred and tested the M3 in the MacBook Pro. As I think you can see from the above chart, for multithreaded applications even comparing to the base M3 at all regardless of device, especially without very well controlled power data, is also not a great idea. This is a chip which can be pushed to 120W wall power (although at such little performance gain at that point I can't think why anyone would - a measly 4% increase in performance costs a whopping 44% more power at this point in the curve!). Anandtech measured the power draw of the HX 370's 28W setting to be around 33W. I suspect this is platform power from HW Info but Anandtech didn't specify in the article. Notebookcheck measured a different device but same chip from Asus and found that it drew 48W wall power at the 28W setting and both got the similar CB R24 scores of 927-950 (Notebookcheck-Anandtech) so I suspect we're talking similar power draws.

From what I can tell this is much more similar to the M3 Pro in powermetrics and wall power for a CPU-only test, which again scores around 1050 in CB R24 making ~10-14% more performant at ISO power. Meanwhile, the base M3's power metrics/wall power don't come even close to these figures under CPU load. Notebookcheck measured it in the Air and found it only drew 21W at the wall in the fanless design and got a score of about 600pts, Anandtech measured the M3 in the MacBook Pro to get 718, at presumably higher average power draw in an endurance test than the thermally limited Air, but almost certainly not anywhere near the same wattage as the HX 370 in its 28W TDP configuration.

7)

The single threaded SPEC results in the Anandtech article are interesting and here you can see the AMD chip losing in INT performance to the M3 P-core but slightly beating it at FP performance. Without power measurements, not even software ones, we can't get a sense of efficiency but we can readily assume that the the M3 is far superior in that respect. Still, shows that indeed in ST, AMD is doing very well. Might be time to update ISO-clock graphs (I keep saying that).

8) Geekbench 6 results tells a similar story for the new AMD chips. Here's

an example (2780/15267 ST/MT) for the same ASUS model as tested by NotebookCheck, unclear at what TDP. Geekbench single core for the M2 Pro is about (

2663/14568), the M3 Pro is about (

3179/18982), and the Qualcomm Elite 80 is (

2845/14458). Here the 12 cores + SMT probably isn't helping AMD as much especially relative to the M3 Pro given that GB 6 switched some of its MT workloads to task based parallelism to cut down on the "MoAr cores is better" phenomenon.

Conclusion: The new AMD chips are naturally still far behind the Qualcomm Elite and Apple M2 P-core in ST efficiency, but performance has been much improved. This remains the weakest element to AMD's chips and it will likely take awhile for x86 processors to match it if they ever do. For MT, while AMD had to add more cores, and one suspects use much more silicon, to compete with the M2 Pro and Qualcomm Elite, the end user doesn't likely care how they did it, only that they now match them, even slightly beat them. This is particularly bad for Qualcomm since they need to be much better than AMD as compatibility issues still abound and the rest of the SOC is underwhelming. If Qualcomm's die is cheaper though, then they can compete on price and that advantage shouldn't be discounted! Also, I've seen mixed reports about the iGPU in the AMD chip (haven't had time to look at that in depth, will try to report if there is anything interesting), but the Qualcomm's was I think worse and

of course the AMD Strix Point can still be paired with a dGPU (though that sorta defeats the purpose of the APU? I mean it's a sizable iGPU). For Apple, AMD may be matching the M2 Pro, but the current M3 generation remains comfortably ahead and of course the base M4 (

3715/14690 GB 6 in the fanless iPad) is expected to come to the Mac at the end of the year and Pro/Max chips as well. Apple remains the ST and MT mobile performance-efficiency kings for now.