Jimmyjames

Site Champ

- Posts

- 892

- Reaction score

- 1,021

Nvidia recently held an event where they stated that the upcoming “AI” PCs were not that great, and you’d be better buying an Nvidia GPU. Shocking.

Article here:

www.tomshardware.com

www.tomshardware.com

In any case I’m curious what would motivate them to pursue this line. They are killing it in the server AI space and that does look to be ending any time soon. Perhaps they are concerned that they will be pushed out of a growing sector of the market: thin laptops with great battery life which also have the power to perform useful AI/ML functions. It seems certain that this sector of the market isn’t going to use discreet gpus, so until they come up with their own SoC, they need to promote then solution they do have.

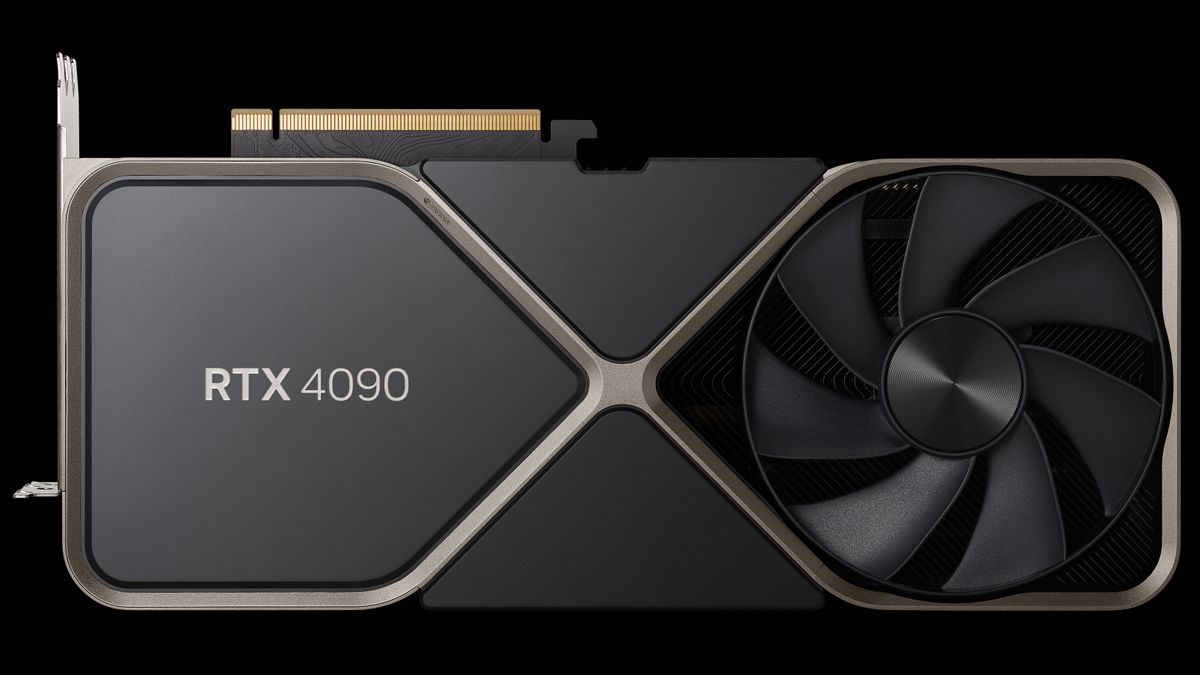

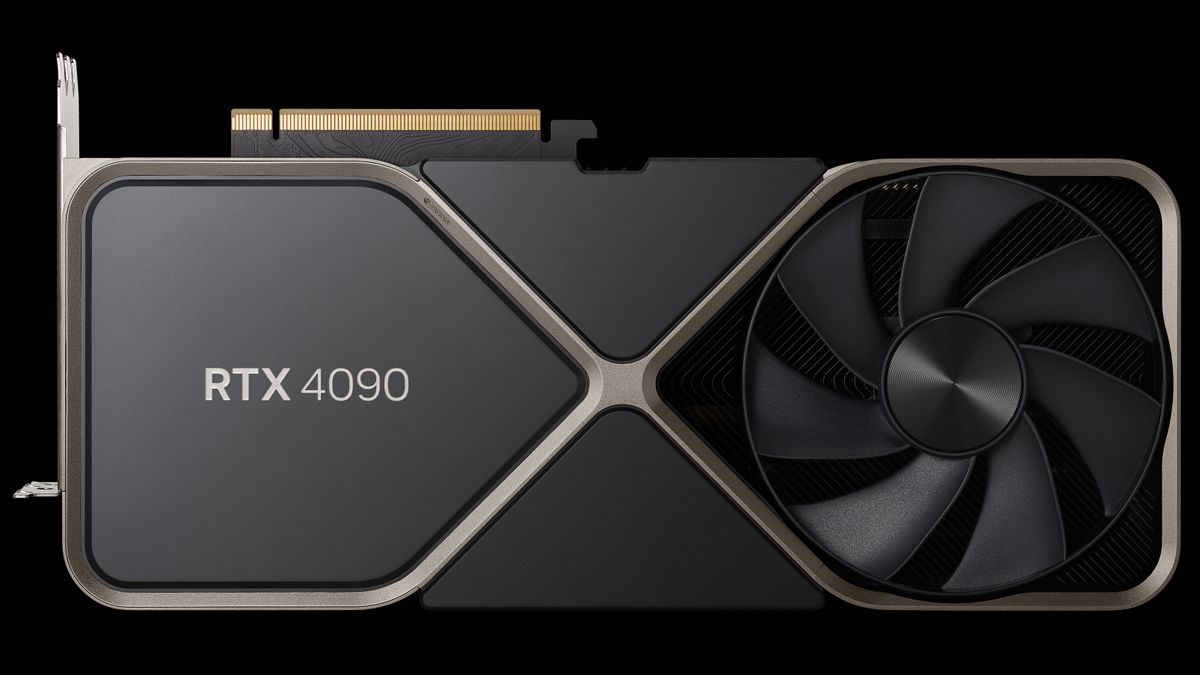

They also mention the M3 Max Mbp and compare it to the 4090/4050 laptop chip in terms of various AI tasks. Unsurprisingly the 4090 crushes the M3 Max, not always by as much as you might imagine however. It does show the gap between the two is significant however.

Article here:

Nvidia criticizes AI PCs, says Microsoft's 45 TOPS requirement is only good enough for 'basic' AI tasks

Nvidia says its GPUs provide substantially better AI-performance than today's bleeding edge NPUs.

In any case I’m curious what would motivate them to pursue this line. They are killing it in the server AI space and that does look to be ending any time soon. Perhaps they are concerned that they will be pushed out of a growing sector of the market: thin laptops with great battery life which also have the power to perform useful AI/ML functions. It seems certain that this sector of the market isn’t going to use discreet gpus, so until they come up with their own SoC, they need to promote then solution they do have.

They also mention the M3 Max Mbp and compare it to the 4090/4050 laptop chip in terms of various AI tasks. Unsurprisingly the 4090 crushes the M3 Max, not always by as much as you might imagine however. It does show the gap between the two is significant however.