Eric

Mama's lil stinker

- Joined

- Aug 10, 2020

- Posts

- 15,378

- Solutions

- 18

- Main Camera

- Sony

From the WP:

The video in question

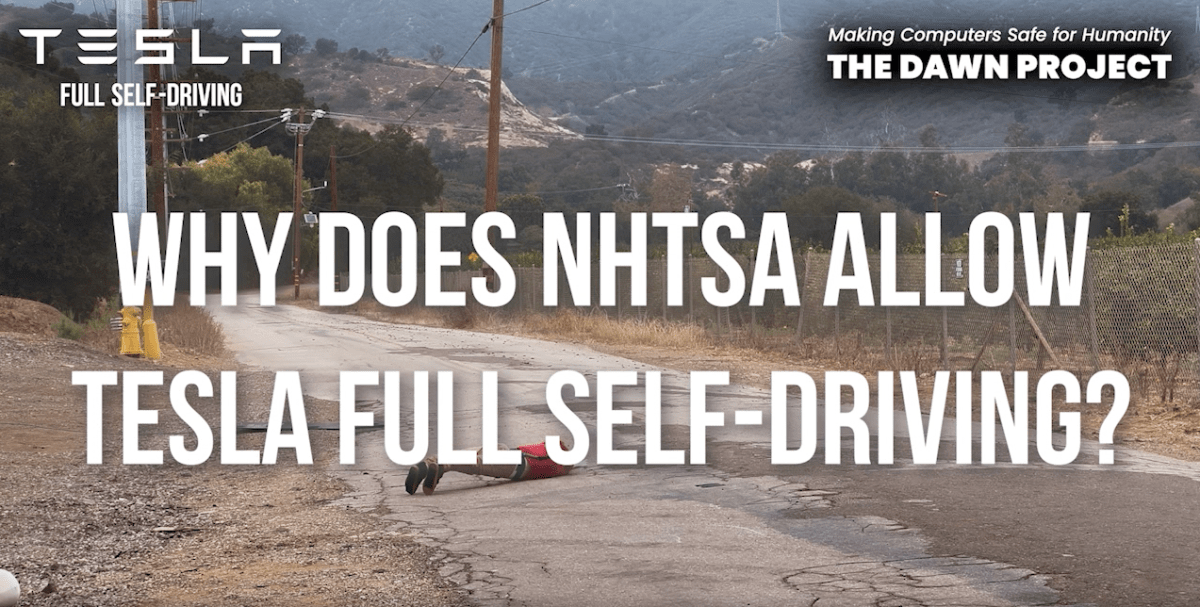

SAN FRANCISCO — Tesla is demanding an advocacy group take down videos of its vehicles striking child-size mannequins, alleging the footage is defamatory and misrepresents its most advanced driver-assistance software.

In a cease-and-desist letter obtained by The Post, Tesla objects to a video commercial by anti-“Full Self-Driving” group the Dawn Project that appears to show the electric vehicles running over mannequins at speeds over 20 mph while allegedly using the technology. The commercial urges banning the Tesla Full Self-Driving Beta software, which enables cars on city and residential streets to automatically lane-keep, change lanes and steer.

The video in question