”Architecture” may mean different things at different companies, but at the companies I worked at it had a fairly consistent meaning. “Architecture” should be distinguished from “instruction set architecture” (ISA). The vast majority of the time, when we are designing a CPU, the instruction set architecture is largely already defined. It’s rare that CPU designers get an opportunity to even add a new instruction or two, let alone design an entire instruction set from scratch. AMD64 (now called x86-64) is an example of a new ISA I had the privilege to work on.

Architecture, on the other hand, assumes that the ISA is largely set. The job of the architect is to figure out, at a high level, how to implement the instruction set. This results in the “microarchitecture” of the design. The microarchitecture includes things like:

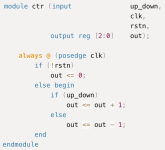

The architect typically writes a software model for the design in a high-level modeling language. Verilog and VHDL are traditional examples, but I’ve used C, C++, and extensions to those languages as well.

The model is a software program that can be executed to simulate the microprocessor. Sometimes there are two models - one model that is optimized for speed, and another model that is “cycle accurate.” The latter includes many more details of the structure of the microprocessor, and breaks computations into smaller steps. For example, the “fast” model may implement a multiplier like this:

Result := operandA * operandB

The cycle-accurate model may implement the multiplications with a Wallace tree that takes multiple cycles.

Sometimes the model is ”timing accurate” but not “cycle accurate.” Instead of implementing the details of what gets done in each clock cycle, the model uses simple logic to perform the calculation, but accurately reflects how many cycles it takes to do so.

The point of these models is three-fold:

Typically the architectural model is divided into different files and/or modules, each of which is intended to correspond, at least roughly, to a physical module. This is important because the module definition will declare each of the inputs and outputs (or, sometimes, “inouts”) that define the interface to the module. Because these decisions have physical consequences, it is important for the architect to work with the physical designers to understand what partitioning makes the most sense.

Once the architectural model is created and verified as functional, it may continue to change throughout the design process. Logic may have to be moved across module boundaries in order to enable timing requirements to be fulfilled, for example. Extra signals may need to be added to a module interface to take advantage of extra time available in one module or another. Or some other physical design problem may require a module to be redesigned in some way.

In a typical CPU core, top-level modules would typically include: instruction fetch, instruction decode, instruction scheduling, integer execution, floating point execution, the register file, caches and load/store. Each module will likely have submodules - for example, the integer execution unit may have one or more ALU submodules.

Computer architecture is a huge topic, encompassing everything from cache sizing and organization to branch prediction algorithms to how many pipeline stages should be used. Hennessy and Patterson is considered the bible in this field in case you want to learn more. A free electronic copy of the fifth edition of their quantitative architecture book is here: http://acs.pub.ro/~cpop/SMPA/Computer Architecture A Quantitative Approach (5th edition).pdf

A free pdf of their computer organization and logic design book is here: https://ict.iitk.ac.in/wp-content/u...mputerOrganizationAndDesign5thEdition2014.pdf

I particularly recommend the latter as a starting point for those interested in computer design as a whole.

Next time I will address what my employers’ always called “logic design,” but which many companies would probably call “physical design.”

Architecture, on the other hand, assumes that the ISA is largely set. The job of the architect is to figure out, at a high level, how to implement the instruction set. This results in the “microarchitecture” of the design. The microarchitecture includes things like:

- How is the CPU partitioned into high-level functional units

- How many, and what type, of cores

- How many ALUs (pipelines) in each core, and how many stages in each pipeline

- What functional units are present

Block Diagram for the Exponential x704 PowerPC | Block Diagram for the F-RISC/G GaAs Multi-chip Processor |

The architect typically writes a software model for the design in a high-level modeling language. Verilog and VHDL are traditional examples, but I’ve used C, C++, and extensions to those languages as well.

The model is a software program that can be executed to simulate the microprocessor. Sometimes there are two models - one model that is optimized for speed, and another model that is “cycle accurate.” The latter includes many more details of the structure of the microprocessor, and breaks computations into smaller steps. For example, the “fast” model may implement a multiplier like this:

Result := operandA * operandB

The cycle-accurate model may implement the multiplications with a Wallace tree that takes multiple cycles.

Sometimes the model is ”timing accurate” but not “cycle accurate.” Instead of implementing the details of what gets done in each clock cycle, the model uses simple logic to perform the calculation, but accurately reflects how many cycles it takes to do so.

The point of these models is three-fold:

1) they serve as the canonical definition for the behavior of the processor. As the design work progresses, the design is constantly compared to these models to ensure that the design is functionally correct. (This can be done in at least two ways, which are topics for another day)

2) they tell the logic designers what they are designing. The logic designers read the model to understand what each block they are designing is supposed to do.

3) in some design flows, they are used as inputs to automated ”synthesis” tools that produce gate-level designs from the functional models. This is usually a bad idea for anything other than the least important logic.

These models describe the behavior of the processor, not the structure of the processor. This can get somewhat confusing, because languages such as Verilog and VHDL can also be used to define the structure of a logic circuit, and for some times of products, including even some CPUs, designers may skip the purely-behavioral representation entirely. However, in my experience, for the most advanced and high performance processors, it is important to keep behavior and structure separate. Among other things, this simplifies the process of “verifying” the logical operation of the CPU.Typically the architectural model is divided into different files and/or modules, each of which is intended to correspond, at least roughly, to a physical module. This is important because the module definition will declare each of the inputs and outputs (or, sometimes, “inouts”) that define the interface to the module. Because these decisions have physical consequences, it is important for the architect to work with the physical designers to understand what partitioning makes the most sense.

Once the architectural model is created and verified as functional, it may continue to change throughout the design process. Logic may have to be moved across module boundaries in order to enable timing requirements to be fulfilled, for example. Extra signals may need to be added to a module interface to take advantage of extra time available in one module or another. Or some other physical design problem may require a module to be redesigned in some way.

In a typical CPU core, top-level modules would typically include: instruction fetch, instruction decode, instruction scheduling, integer execution, floating point execution, the register file, caches and load/store. Each module will likely have submodules - for example, the integer execution unit may have one or more ALU submodules.

Computer architecture is a huge topic, encompassing everything from cache sizing and organization to branch prediction algorithms to how many pipeline stages should be used. Hennessy and Patterson is considered the bible in this field in case you want to learn more. A free electronic copy of the fifth edition of their quantitative architecture book is here: http://acs.pub.ro/~cpop/SMPA/Computer Architecture A Quantitative Approach (5th edition).pdf

A free pdf of their computer organization and logic design book is here: https://ict.iitk.ac.in/wp-content/u...mputerOrganizationAndDesign5thEdition2014.pdf

I particularly recommend the latter as a starting point for those interested in computer design as a whole.

Next time I will address what my employers’ always called “logic design,” but which many companies would probably call “physical design.”