Honestly, only 6x faster AI than M1 is less progress than I’d have expected by now.

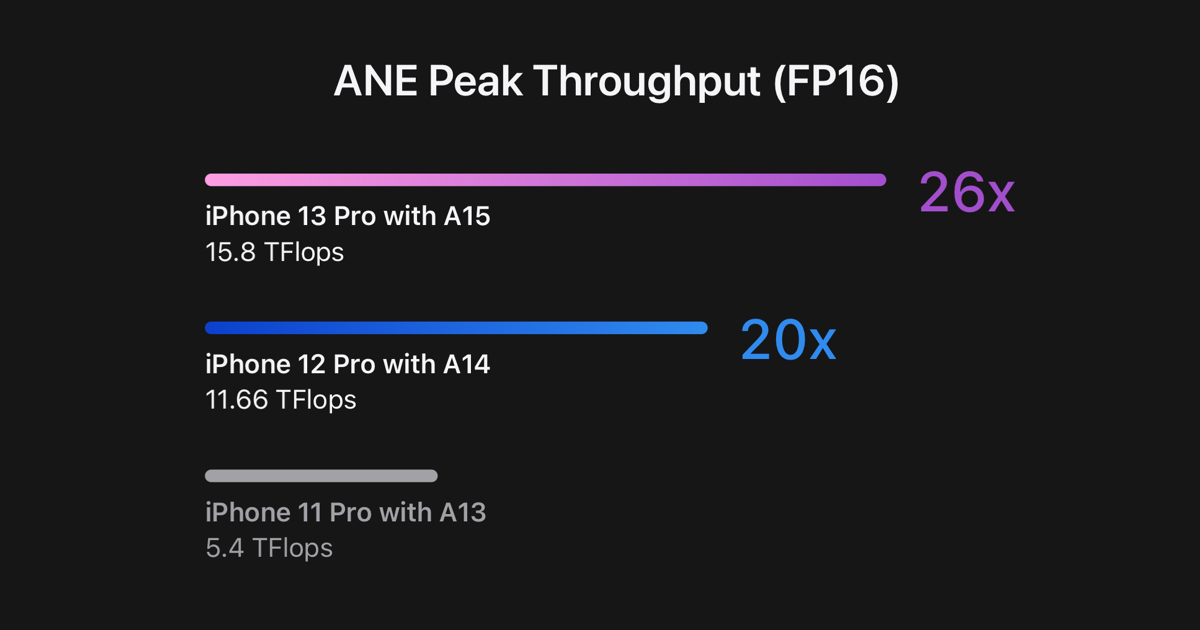

The clock frequency has increased only marginally (25%), we have two extra GPU cores, and the MXU units have 4x throughout in fp16. I can see how they arrive at 6x improvement. It’s still 15 TFLOPs - the same as M4 Max or half of Nvidia 5050. I think that’s not bad at all for starters. The GPU has seen some other massive improvements too. Integer multiplication is twice as fast, exp/log are twice as fast, and I’m sure there are other things too.

BTW, Apple undersells their MXU units. The 4x improvement applies to FP16 precision, but INT8 runs almost two times faster still. So I’d expect around 25-30 TOPS for INT8 out of M1 GPU.

Last edited: