- Joined

- Sep 26, 2021

- Posts

- 7,996

- Main Camera

- Sony

“Not great” is a fun way to put it.That's showing the X Elite pulling more than 70 watts in Cinebench for just the CPU cores... That's... Not great.

“Not great” is a fun way to put it.That's showing the X Elite pulling more than 70 watts in Cinebench for just the CPU cores... That's... Not great.

Right! 70 watts for the cpu, 30 or so watts for the gpu. Pretty soon we’re talking about serious numbers.That's showing the X Elite pulling more than 70 watts in Cinebench for just the CPU cores... That's... Not great.

Amazing coming from QC themselves with those slides.. I mean, they MAY be talking about the entire device power consumption? But even then, 70W for that kind of performance is terrible.Right! 70 watts for the cpu, 30 or so watts for the gpu. Pretty soon we’re talking about serious numbers.

That slide does seem to correspond to Notebook check's scores for it - it looks roughly 1.6x the score of the Asus Zenbook 14 in the chart - the only thing I can think of is in the fine print of Qualcomm's charts they mentioned the Asus Zenbook was running unconstrained? So maybe both scores at 70W would be higher than below? Notebook check does say the max score recorded for the 155H was about 1024 points. Otherwise a score of 1220 at 70W that's really, really bad. The M2 Pro scores roughly the same as the M3 Pro (a little lower). I think we discussed this last time but a 12 P-core chip drawing 70W should be much, much better than an 8+4 chip drawing half that (less?). Even compared to the 23W score it's 3x the power for 30% more performance? That shouldn't be the case, so maybe the 80W score here is not really the 70W from the charts? That would put it about 15-1600 at 70 Watts which is not great for 12 M2-like P-cores, but not awful?Amazing coming from QC themselves with those slides.. I mean, they MAY be talking about the entire device power consumption? But even then, 70W for that kind of performance is terrible.

For what it's worth the October slide actually seems to support this better score at 70W. The 13800H also is a pretty close score to the M2 Pro (again a little lower 976). The best score of the Elite looks better here than 25% more than the 13800H? Again maybe 1.5-1.6x?It’s all very confusing tbh. Here a slide from last October. I don’t know why Andrei obfuscates, but he should speak to qualcomms marketing department! And to be clear, I’m not saying the gpu reaches 80w, but the soc appears to at least.

View attachment 29136

Less than half.. The M3 Pro tops out around 29W on the CPU side from memory? M2 Pro around 34W?I think we discussed this last time but a 12 P-core chip drawing 70W should be much, much better than an 8+4 chip drawing half that (less?)

Yeah M2 Pro was the same as M2 Max (at 12 cores) and I think it was ~35W CPU and ~41W total package? Something like that? Obviously the M3 is just better than the Oryon ... but I think maybe given the node and the overall design the M2 is the better comparison in terms of where Qualcomm is. To me the Oryon is answering the question what would happen if you took 12 M2 P-cores with no E-cores and ramped the clock speed up? Obviously the fabric and SOC cache design is different too and Apple's may be better here. For the Oryon, I'm going with a CB24 score of 1500-1600 at 70W because I can't believe it's only 1220. But maybe it really is that bad ...Less than half.. The M3 Pro tops out around 29W on the CPU side from memory? M2 Pro around 34W?

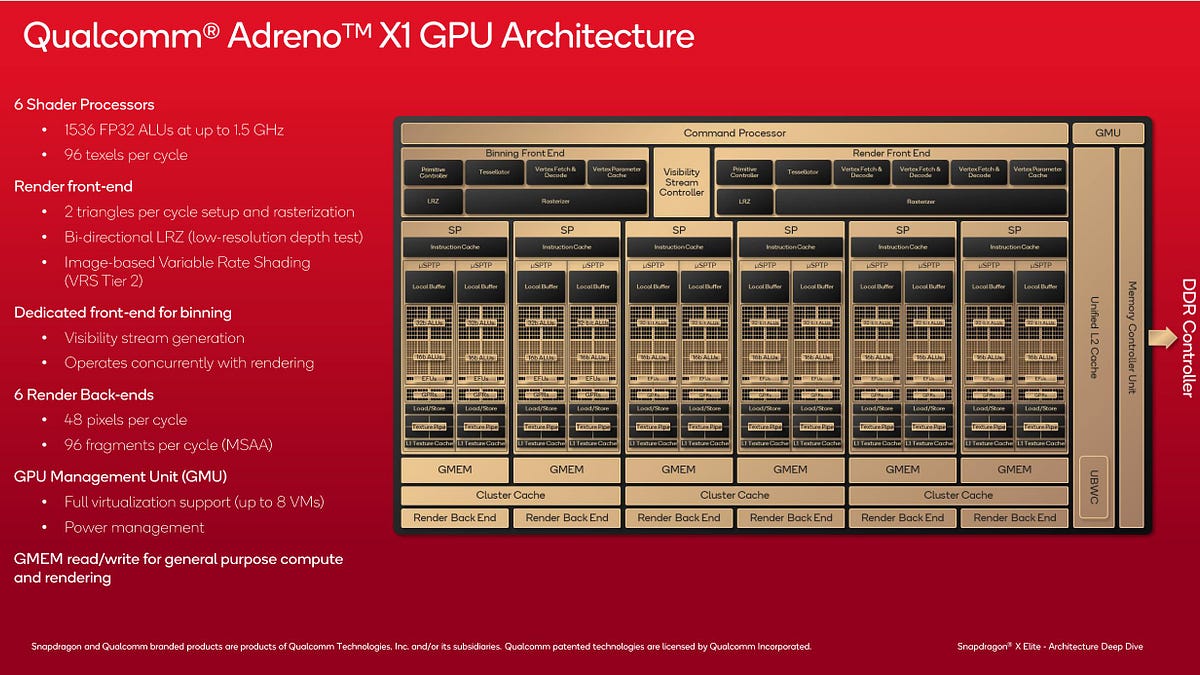

Well maybe I’m wrong but I don’t see how they get to 80W - certainly it ain’t from the GPU, which tapping out at 4.6TFLOPs is the same size as some of Qualcomm’s phone GPUs and basically moderately bigger than an M3 base GPU. So if they are hitting 80W from their SOC alone it’s because they are riding the CPU hard on GHz.

Edit: 4.6 not 4.2

but I think maybe given the node and the overall design the M2 is the better comparison in terms of where Qualcomm is. To me the Oryon is answering the question what would happen if you took 12 M2 P-cores with no E-cores and ramped the clock speed up?

Absolutely, in fact my point was the for the 80W form factor a 4.6TFLOP GPU isn't impressive at all. That's at the M2/M3 Max level and the M2/M3 Max GPU is nearly 3x that isn't it? They'll need a dGPU. Even without their compute troubles that level of GPU won't cut it except for maybe a coding/development machine which is otherwise unconcerned with GPU performance beyond driving a nice screen or two ... or three.A note on that: 4.6TFLOPs certainly sounds impressive, but since it’s the same GPU as in their high-end smartphones that excels at synthetic graphical benchmarks and fails badly in compute… I would expect too much. The current GB6 compute entries are between A14 and base M1.

Since Avalanche is rebuild of Firestorm at N4 (though maybe a better one) I think we're on the same page.I’ve said it before and I’ll say it again - to me it seems that Oryon is a rebuild of Firestorm at N4. They can run it at M2 clocks with slightly lower power, but it takes a huge efficiency hit if the clocks are pushed any further. I am still puzzled by Qualcomm claims that a single Oryon core outperforms an Avalanche while using less power yet 12 Oryon cores have difficulty keeping up with 8 Avalanche cores while consuming more power. No idea whether it’s the power system or some other factor, the scaling is really bad though.

Since Avalanche is rebuild of Firestorm at N4 (though maybe a better one) I think we're on the same page.

M2 was N5P I thought? No idea what the difference is.

Avalanche did introduce some tweaks to the buffer sizes etc.

They issued a correction though nothing concerning the caches and compute issue we were discussing:@leman posted over at Macrumors a recent chipsandcheese article that is highly relevant!

They appear to conclude that a lot of Qualcomm’s difficulties in compute is a result of cache (or lack thereof). I’ll need a more thorough reading.

I wrote about Qualcomm iGPUs in three articles. All three were difficult because Qualcomm excels at publishing next to no information on their Adreno GPU architecture.

I originally started writing articles because I felt tech review sites weren’t digging deep enough into hardware details. Even when manufacturers publish very little info, they should try to dig into CPU and GPU architecture via other means like microbenchmarking or inferring details from source code. A secondary goal was to figure out how difficult that approach would be, and whether it would be feasible for other reviewers.

Adreno shows the limits of those approaches.

I really don’t see 5.7TFLOPs FP32 on 750 happening, at least not in any way that’s meaningful. First, that number alone is ridiculously high. It would require 2048 FP32 pipelines running at 1.4GHz to get to that number. Are they claiming that 8gen3 has a GPU as an RX 7600? I mean, sure, if they use very wide SIMD and sacrifice precision, they could get there, but the shader utilization in real world work would be terrible. Look at GB compute results, they have difficulty competing with older Apple designs, does it look like a 5+ TFLOPs GPU to you?

Where Qualcomm has a big advantage is memory bandwidth. Then again, Apple probably has more cache.

| A18 Pro | 8 Elite | Dimensity 9400 | |

| GPU | 6-core Family 9 | Adreno 830 | Immortalis G925 MC12 |

| Clock speed | 1.5 GHz | 1.1 GHz | 1.6 GHz |

| FP32 ALUs | 768 | 1536 | 1536 |

Both Qualcomm and ARM use separate Int/FP pipes. The original post about Qualcomm you’re quoting and the follow-ons about ARM basically came to the conclusion that the >5 TFLOP figures were based on incorrect information for those older GPUs. The 750 wasn’t over 5 and the old Immortalis wasn’t close to 6. You can even see that in the 8 Elite info in your chart (which is correct) the resulting TFLOPs is about 3.4. The new Immortalis is just under 5 TFLOPS in your chart though I have doubts that it stays at 5 TFLOPS for any significant time given actual results from performance data as well as a simple power cost estimate, which would overwhelm a typical phone.

A18 Pro 8 Elite Dimensity 9400 GPU 6-core

Family 9Adreno 830 Immortalis

G925 MC12Clock speed 1.5 GHz 1.1 GHz 1.6 GHz FP32 ALUs 768 1536 1536

What if Qualcomm/ARM(Mali, Immortalis) GPUs use the FP32 pipes for INT operations also?

This would be similar to Nvidia's Pascal GPU architecture (GTX 10 series). Pascal's FP32 pipes did INT32 operations also.

It's successor Turing (RTX 20 series) added a separate INT32 pipes to the SM.

Maybe Apple's GPU is similar to Turing. That is, they have separate pipes for INT and FP.

According to Nvidia, while the majority of PC gaming is FP32 operations, about 30% are INT32 operations.

This would mean that when you are running a game/benchmark on an Adreno/Immortalis GPU, it cannot utilise it's full FP32 throughput because part of the ALUs have to do INT32 operations. Apple's GPU wouldn't have such an issue, if they have seperate INT pipes, so that the FP pipes can be utilised to maximum.

www.notebookcheck.net

www.notebookcheck.net

Yeah, if anything I think Apple narrowed the gap on some easier tests and games. Many of these games seem capped at 60fps and one or two issues can bring down the average. In Steel Nomad Lite, the last gen 8 Elite had a bigger lead over the A18 Pro, than the 8 Elite Gen 5 does over the A19 Pro. On Solar Bay Extreme, the A19 Pro leads.Qualcomm seems to have improved their GPU in Gen 5 (at least in gaming, not sure about compute):

Snapdragon 8 Elite Gen 5 dominates Apple's A19 Pro in gaming tests aboard the Xiaomi 17 Pro Max

The Xiaomi 17 Pro Max and Apple's iPhone 17 Pro Max are the two most powerful smartphones on the market at the moment. Gaming tests show, however, that the Snapdragon 8 Elite Gen 5-powered phone holds consistent advantages in gaming performance, efficiency, and thermal management.www.notebookcheck.net

Haven’t watched the original Geekerwan video this video is based on yet though.

People who complain about using a different compiler for SPEC do not know what they're talking about. SPEC deliberately does not constrain your choices of compiler, compiler flags, linked libraries, and so on. Most choices are perfectly valid, including many that dramatically affect scores either positively or negatively.Geekerwan also stated they use motherboard power measurements, so those figures are a little sus. Not to say they aren’t correct, but there have been some issues with Geekerwan’s testing lately. Some complaints that their Spec tests are off on Android due to the use of Flang vs gfortran. Another Chinese reviewer, Xiaobai, also does Spec tests and results are better on the integer part. Also interestingly, while Geekerwan and Xiaobai agree on Android power measurements for the Gen 5, Xiaobai gets much lower power measurements for the A19 Pro.

Thanks for this. Super interesting context.People who complain about using a different compiler for SPEC do not know what they're talking about. SPEC deliberately does not constrain your choices of compiler, compiler flags, linked libraries, and so on. Most choices are perfectly valid, including many that dramatically affect scores either positively or negatively.

This is due to SPEC's origins as an industry consortium run by competing UNIX workstation vendors. These were quite hostile to one another, but managed to get together to agree upon a common standard benchmark they could use for marketing purposes, since before SPEC it was difficult to make a case for "we're faster than the other guys".

Most of these companies had their own private RISC ISA, their own private UNIX, and their own private compiler and other software development tools. If they wanted to put their hardware in the best possible and most realistic light, they wanted to use their own toolchain. (Note that at the time, open source compilers weren't nearly as good as they are today.)

As a result, SPEC CPU is not a benchmark for comparing CPUs in isolation. It's for comparing CPU performance in the context of systems. A system includes the CPU, memory, OS, toolchain, etc.

SPEC isn't the wild west and does have some rules, but choosing to use flang over gfortran (or vice versa) is fine. Also, rules are only enforced on submissions for the official spec.org score database. Most independent reviewers like Geekerwan don't submit official runs; instead they're using SPEC as a tool they can use to accumulate their own private score database. As such, what you should be looking for from Geekerwan is whether their SPEC methodology stays self-consistent over time, so that all the scores in their own database can meaningfully be compared to each other. (Anandtech was pretty good about this back when they started using SPEC as a benchmarking tool.)

Disagreement between Geekerwan and Xiaobai, though? Perfectly normal and expected as far as I'm concerned, and it shouldn't be assumed to reflect negatively on either.

This site uses cookies to help personalise content, tailor your experience and to keep you logged in if you register.

By continuing to use this site, you are consenting to our use of cookies.