Jimmyjames

Elite Member

- Joined

- Jul 13, 2022

- Posts

- 1,434

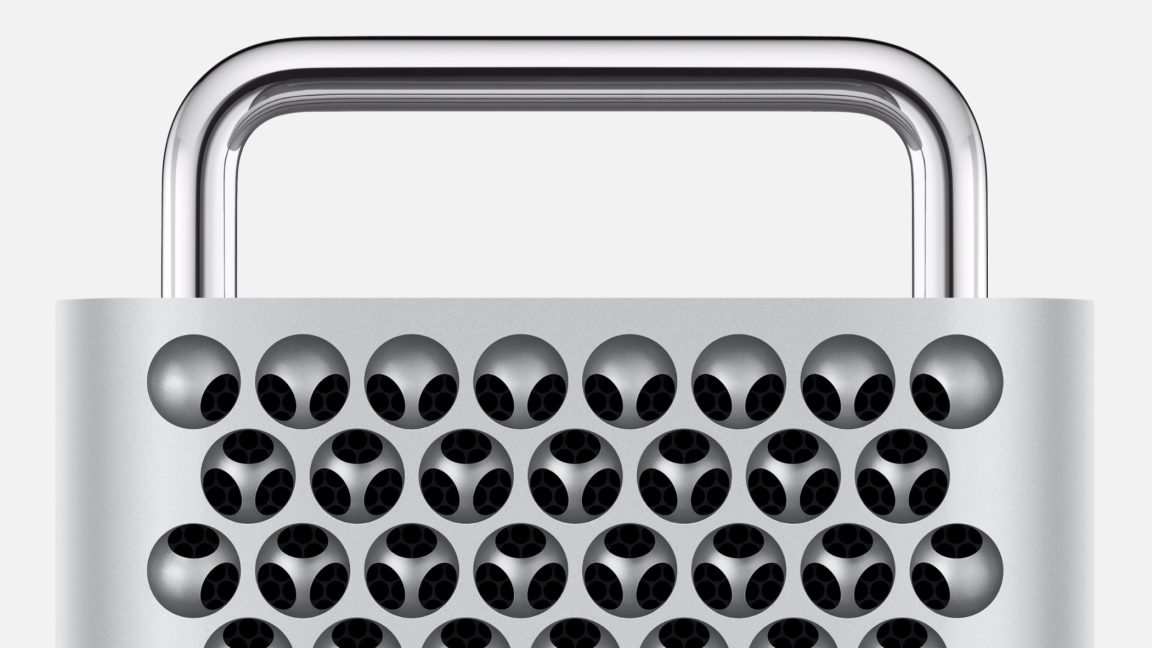

This whole thing is bizarre to me. It seems Apple has planned a very complex transition and yet without completing one full generation of chips, completely underestimated what was necessary for the high end desktops, and more importantly high end gpu performance.I find this believable. It's not like an M2 Extreme is going to look good next to Genoa or Saphire Rapids, and it won't Abel to compete with high-end GPUs either. Who would buy an extremely expensive underperforming tower? If Apple is serious about desktop they have to invest into vertical scaling and scaling in general. There is also still a severe software issue. You probably don't need a Mac Pro for photo or even video editing, the Studio does it all beautifully. And Apple Silicon currently underperforms for 3D rendering — a common market for larger workstations. What's left for the Mac Pro? Software development? Massive overkill. Number crunching? Not the best use of the money. GPU processing or ML work? Not mature or fast enough...

So yeah, from how things are going now, Apple either has to recalibrate their approach and come up with more powerful/scalable chips, or move to mobile only.

So I'm wondering if it's Gurman's story that's incorrect, or Apple just doesn't care about desktops. If it's the latter, why bother with the 2019 Mac Pro?

I've said it previously, but I have surprised myself at how quickly my feelings on Apple Silicon have gone from absolute certainty that it's the future and the correct decision...to a growing sense of dread (like the 2013 Mac Pro) that they've taken a terrible wrong turn for more demanding users.