It's not bad compared to x86 cores, at the same time I don't really follow the node advantage argument. Nuvia team is using quite a lot of power to reach these scores at N4P (node either identical or superior to what M2 uses, depending on which analysis you trust).

Eh. M2 Macs in practice is not actually using like 5W of active power for full ST. An A15 iPhone might (actually even then it can go higher) but some (AMD and Intel fans are worse in other ways, obviously, and just totally disingenuous and think power is NBD, but still) have a habit of downward estimating Apple power by using Power Metrics which is not only badly modeled and inaccurate but now only captures SoC power and not even the DRAM much less power delivery etc (which isn’t happening here) s of updates.

The wall minus idle or ideally direct PMIC fuelguage instrumentation is the gold standard.

And FWIW, this [playing the software game] isn’t actually good turf for Apple (or QC, or soon MediaTek and Nvidia) grand scheme of things because part of how Apple and mobile vendors like them keeps power down is reducing DRAM accesses and not using junk PMICs or power partitioning in their chips. The Qualcomm graphs are for platform power which is SoC/Package + DRAM + VRMs, so not ultra dissimilar from what you’d get from the wall, which is what you actually want to measure, and is surprisingly honest.

This is also exactly what Geekerwan does, and what Andrei F (who now works at Qualcomm btw and is doing some of those measurements) measured for years now.

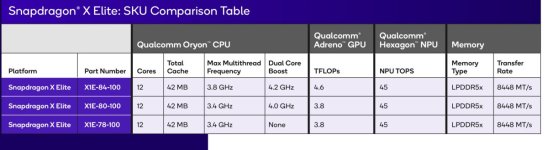

The Qualcomm graph goes up to 12W platform for a GB6 of 2850-2900 for the X Elite on Windows. Taking it down to 8-10 is about going to match an M2 ST, I think.

And FWIW when you look at some M2 Macs hooked up to an external display doing Cinebench 23 (which is bad benchmark because of lack of NEON but 2024 fixes this), you do basically see this, it’s more like 9-11W. That said, I don’t think they took idle/static out, if they did it would probably remove a few watts, but their idle power is also fairly reasonable.

We don’t have an actual Geekbench 6 ST comparison from Qualcomm inclusive of the M2 and the X series, and FWIW I believe that’s intentional by QC because the M2 would have a better curve or similar, but maybe not at all by much as you think.

Even using the A15 as a lower bound, where it has 4x the SLC cache (32 v 8) half the DRAM bus width, smaller DRAM, a smaller overall chip, is pushed 10% less on frequency (3.23GHz vs 3.5GHz, and where that frequency really counts too, just not as much as AMD/Intel), something more like 6.5-8W active power makes sense.

I suspect it’s about 7-9W depending on the load. Again, I do think Apple’s is probbaly still more efficient, and even 7.5W vs 9.5W is a significant difference, but you’re probably overstating the gaps on the same node.

In the meantime M4 delivers almost 4000 GB6 points at 7 watts. That is a huge difference which cannot be explained by 3nm node alone.

Well it’s never just the node but usually architectural and cache adds too with the added density or spending, I agree, but I would wager Qualcomm with Nuvia unlike AMD (see Zen in the graph) and Intel are not going to sit around or waste the new nodes re: mobile.

The 8 Gen 4 is shipping with Oryon on N3E this fall fwiw, so I suspect there are also some integration pains that led to less than perfect laptop active power, because they absolutely wouldn’t throw that in a phone even with N3E if they thought they couldn’t tweak it..