Yoused

up

- Joined

- Aug 14, 2020

- Posts

- 8,370

- Solutions

- 1

He wants to control reality. Even two trillion dollars will not be enough for him to try to make the universe be what he wants it to be.Elon really can’t control any of his kids

He wants to control reality. Even two trillion dollars will not be enough for him to try to make the universe be what he wants it to be.Elon really can’t control any of his kids

Yes, but he will try.Even two trillion dollars will not be enough for him to try to make the universe be what he wants it to be.

reason.com

reason.com

"Counsel Relied upon Unvetted AI ... to Defend His Use of Unvetted AI"

Plus, "I don't know how you can vehemently deny that when the evidence is staring us all in the face. That denial is still very troubling to me."reason.com

Attorneys were accused of using AI to produce a brief, due to fake case citations. They then used AI to produce the brief defending themselves against these allegations.

Hilarity ensues. (I’ve read the whole judicial opinion on this, and it’s fun).

Snip snip

And the thread goes on from there. So is it time to “let’s kill all the (AI) lawyers”?

You are talking about lawyers, think about doctors coming from AI..

really hope more people will sue AI owners and make them pay billions for all the disasters are (and will) come from it

We need to be able to sue AI companies

When a chatbot breaks bad, you should be able to go to courtwww.theargumentmag.com

The Trump administration is currently pressuring OpenAI and other AI companies to make their models more conservative-friendly. An executive order decreed that government agencies may not procure “woke” AI models that feature “incorporation of concepts like critical race theory, transgenderism, unconscious bias, intersectionality, and systemic racism.”

While OpenAI’s prompts and topics are unknown, the company did provide the eight categories of topics, at least two of which touched on themes the Trump administration is likely targeting: “culture & identity” and “rights & issues.”

Watched the entire thing and it was a great and eye opening interview. A few tech billionaires are going to rule the world.

From reading the whole article we probably won’t be getting mecha-hitler, but maybe something worse because it’s more subtle.

Indeed the AI project as currently constituted is best thought of as a way to control information flow.

Essentially, truth and facts will become more subjective based on one's belief system.

This is the Conservative way. Of course the irony is that by default it couldn't see the logic in that so they've had to re-tool the entire thing to fit their agenda.

www.notebookcheck.net

www.notebookcheck.net

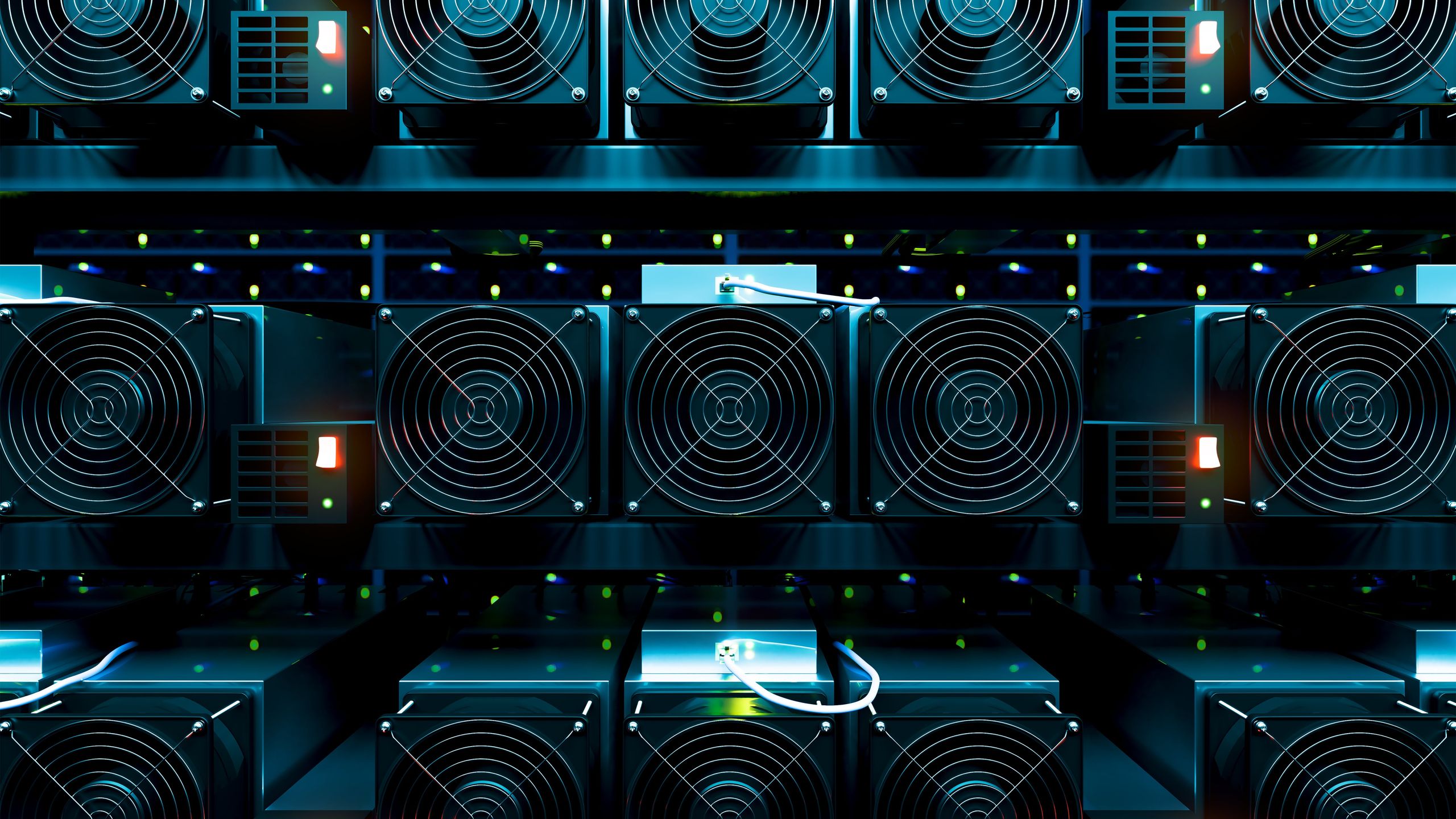

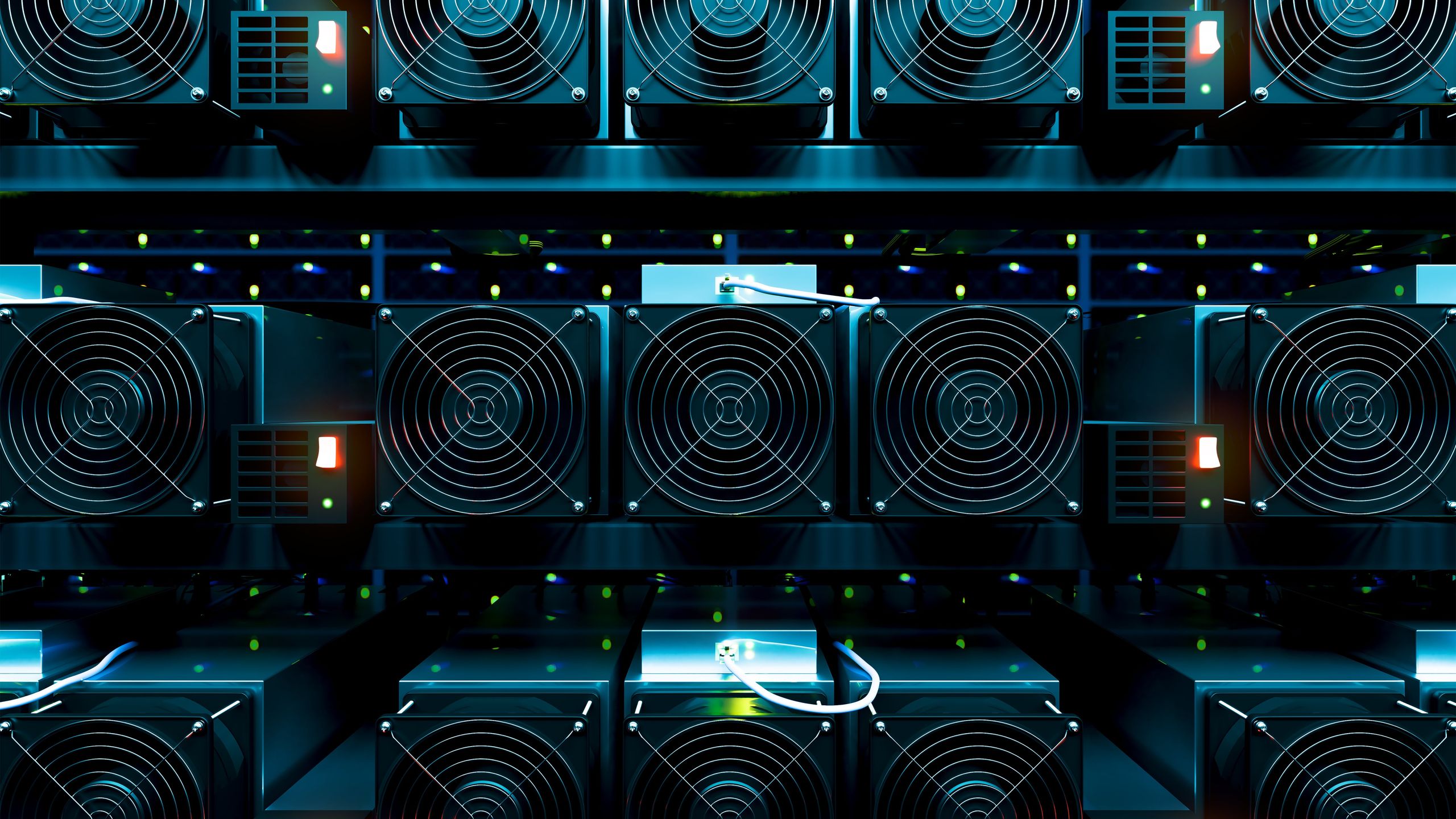

power companies invented crypto and llms. i’m sure of it.Cursed headline:

View attachment 37045

New stablecoin connects crypto investors to real-world Nvidia AI GPUs that earn money by renting out compute power to AI devs — USD.AI lets crypto investors make bank off AI compute rentals

Let's turn your stablecoin into a less-than-stable AI investment.www.tomshardware.com

This site uses cookies to help personalise content, tailor your experience and to keep you logged in if you register.

By continuing to use this site, you are consenting to our use of cookies.