You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Ai thread

- Thread starter Eric

- Start date

- Joined

- May 10, 2022

- Posts

- 1,592

- Main Camera

- Sony

- Joined

- Aug 15, 2020

- Posts

- 9,560

A study determined that older people tend to use AI more like an extension of Google while younger people are using it more like a life coach of therapist which is a slippery slope to creating an emotional bond.

As dystopian as that sounds I actually find it kind of intriguing. People already consume audio and video content where they have zero interaction with the content creator but get fulfillment out of it and the illusion of a social life. AI could also be a driver of positivity in people's lives from being more informed to feeling less alone and depressed. The inevitable result of human and AI interaction doesn't have to be sex robots and a complete disconnect from other humans.

As dystopian as that sounds I actually find it kind of intriguing. People already consume audio and video content where they have zero interaction with the content creator but get fulfillment out of it and the illusion of a social life. AI could also be a driver of positivity in people's lives from being more informed to feeling less alone and depressed. The inevitable result of human and AI interaction doesn't have to be sex robots and a complete disconnect from other humans.

re:

Actually, she is doing an expo of her art, together with Liza Donnelly, in Morges, Switzerland as we speak - just a couple of miles from our house, and I'm stuck here in Trumpistan...

www.24heures.ch

www.24heures.ch

{translating the link text: the Presshouse is hitting hard, featuring 2 American cartoonists that matter...}

You do know she resigned from WaPo 1/2025 after 16 years because they censored her cartoon about Bezos debasing himself for T?Ann Telnaes thoughts/drawings

Actually, she is doing an expo of her art, together with Liza Donnelly, in Morges, Switzerland as we speak - just a couple of miles from our house, and I'm stuck here in Trumpistan...

La Maison du dessin de presse frappe fort avec ces deux américaines en colère

À Morges, la Maison du dessin de presse frappe fort en exposant ces deux dessinatrices de presse qui comptent. Rencontre sans tabou.

{translating the link text: the Presshouse is hitting hard, featuring 2 American cartoonists that matter...}

- Joined

- May 10, 2022

- Posts

- 1,592

- Main Camera

- Sony

Oh yes, I know well about the WaPo story : this is when I cancelled my subscriptionre:

You do know she resigned from WaPo 1/2025 after 16 years because they censored her cartoon about Bezos debasing himself for T?

Actually, she is doing an expo of her art, together with Liza Donnelly, in Morges, Switzerland as we speak - just a couple of miles from our house, and I'm stuck here in Trumpistan...

La Maison du dessin de presse frappe fort avec ces deux américaines en colère

À Morges, la Maison du dessin de presse frappe fort en exposant ces deux dessinatrices de presse qui comptent. Rencontre sans tabou.www.24heures.ch

{translating the link text: the Presshouse is hitting hard, featuring 2 American cartoonists that matter...}

I still follow her on Substack: she's not only amazing at what she does but she also speak volumes about the current political situation.

Too bad you are not in Switzerland .

Merci beaucoup pour le lien à l'article du journal suisse.

- Joined

- May 10, 2022

- Posts

- 1,592

- Main Camera

- Sony

A study determined that older people tend to use AI more like an extension of Google while younger people are using it more like a life coach of therapist which is a slippery slope to creating an emotional bond.

As dystopian as that sounds I actually find it kind of intriguing. People already consume audio and video content where they have zero interaction with the content creator but get fulfillment out of it and the illusion of a social life. AI could also be a driver of positivity in people's lives from being more informed to feeling less alone and depressed. The inevitable result of human and AI interaction doesn't have to be sex robots and a complete disconnect from other humans.

I answer you with this

Almost half of young people would prefer a world without internet, UK study finds

Half of 16- to 21-year-olds support ‘digital curfew’ and nearly 70% feel worse after using social mediaCan't agree more with them!

- Joined

- Aug 15, 2020

- Posts

- 9,560

I answer you with this

Almost half of young people would prefer a world without internet, UK study finds

Half of 16- to 21-year-olds support ‘digital curfew’ and nearly 70% feel worse after using social media

Can't agree more with them!

Interesting, but it doesn't exactly contradict what I posted. Plenty of people don't use AI, that they are aware of. So this would be out of people who do. Also AI isn't social media. Asking it for tips on cooking, exercise, or gardening generally isn't going to serve that up with a side dish of outrage bait. Unless you are using GROK. Apparently any question you ask it currently is answered with information about the supposed white genocide in South Africa.

Eric

Mama's lil stinker

- Joined

- Aug 10, 2020

- Posts

- 15,367

- Solutions

- 18

- Main Camera

- Sony

AI Cheating Is So Out of Hand In America's Schools That the Blue Books Are Coming Back

Pen and paper is back, baby.

- Joined

- May 10, 2022

- Posts

- 1,592

- Main Camera

- Sony

You would like to have your doctor or engineer to know what they are doing...Sales of blue books this school year were up more than 30% at Texas A&M University and nearly 50% at the University of Florida. The improbable growth was even more impressive at the University of California, Berkeley. Over the past two academic years, blue-book sales at the Cal Student Store were up 80%. Demand for blue books is suddenly booming again because they help solve a problem that didn’t exist on campuses until now.

AI Cheating Is So Out of Hand In America's Schools That the Blue Books Are Coming Back

Pen and paper is back, baby.gizmodo.com

KingOfPain

Site Champ

- Joined

- Nov 10, 2021

- Posts

- 761

How many more times does this need to happen, until they finally learn not to rely on LLMs for case files?

arstechnica.com

arstechnica.com

Unlicensed law clerk fired after ChatGPT hallucinations found in filing

Law school grad’s firing is a bad omen for college kids overly reliant on ChatGPT.

arstechnica.com

arstechnica.com

Some insight into how a LLM works. The math one is pretty weird...

Tracing the thoughts of a large language model

Anthropic's latest interpretability research: a new microscope to understand Claude's internal mechanismswww.anthropic.com

New Apple Research in a similar vein for puzzles:The papers may be this and this, from the link posted by @KingOfPain a week ago.

Glad you posted this. I took a note of King’s link but hadn’t followed up.

Sabine is good. Her presentation supports my developing sense from limited usage of ChatGPT and occasionally Gemini that there is indeed more than next-token prediction happening, but also too little to be really useful or reliable.

The papers look very interesting from a quick skim. Great work at Anthropic.

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity

Recent generations of frontier language models have introduced Large Reasoning Models (LRMs) that generate detailed thinking processes…

It suggests a collapse of ability to scale and lack of intrinsic reasoning about the problem.

I thought I’d share this critique of the above study:New Apple Research in a similar vein for puzzles:

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity

Recent generations of frontier language models have introduced Large Reasoning Models (LRMs) that generate detailed thinking processes…machinelearning.apple.com

It suggests a collapse of ability to scale and lack of intrinsic reasoning about the problem.

Expert pours cold water on Apple's downbeat AI outlook — says lack of high-powered hardware could be to blame

If Apple wants to catch up with rivals, it will either have to buy a lot of Nvidia GPUs or develop its own AI ASICs.

I haven’t say I don’t find the criticism very compelling. Apple wasn’t training new models, they were deploying previously trained ones and while it’s unclear what hardware Apple used for its inferences (a local cluster) … the behavior of the model really shouldn’t be dependent upon that. The only thing I could think of is that the models became too small to deal with the complexity of the larger puzzles but at its core that’s a model problem not a hardware problem per se and shouldn’t really be dependent upon GPU vs TPU vs CPU except in how long you are willing to wait for results. So it isn’t clear why is focus is on what hardware Apple used.

Unfortunately the original critique is written in Korean and thus the above article taking pull quotes out of context using a machine translation may not be doing the professor’s argument any favors. Then again maybe someone here can shed light on what he was trying to say because it made little sense to me.

Anyway I don’t know if Apple plans to formally publish their results but these are the sorts of issues often ironed out in peer review (if they are issues).

Pretty good rebuttal here:I thought I’d share this critique of the above study:

Expert pours cold water on Apple's downbeat AI outlook — says lack of high-powered hardware could be to blame

If Apple wants to catch up with rivals, it will either have to buy a lot of Nvidia GPUs or develop its own AI ASICs.www.tomshardware.com

I haven’t say I don’t find the criticism very compelling. Apple wasn’t training new models, they were deploying previously trained ones and while it’s unclear what hardware Apple used for its inferences (a local cluster) … the behavior of the model really shouldn’t be dependent upon that. The only thing I could think of is that the models became too small to deal with the complexity of the larger puzzles but at its core that’s a model problem not a hardware problem per se and shouldn’t really be dependent upon GPU vs TPU vs CPU except in how long you are willing to wait for results. So it isn’t clear why is focus is on what hardware Apple used.

Unfortunately the original critique is written in Korean and thus the above article taking pull quotes out of context using a machine translation may not be doing the professor’s argument any favors. Then again maybe someone here can shed light on what he was trying to say because it made little sense to me.

Anyway I don’t know if Apple plans to formally publish their results but these are the sorts of issues often ironed out in peer review (if they are issues).

Seven replies to the viral Apple reasoning paper – and why they fall short

A study determined that older people tend to use AI more like an extension of Google while younger people are using it more like a life coach of therapist which is a slippery slope to creating an emotional bond.

As dystopian as that sounds I actually find it kind of intriguing. People already consume audio and video content where they have zero interaction with the content creator but get fulfillment out of it and the illusion of a social life. AI could also be a driver of positivity in people's lives from being more informed to feeling less alone and depressed. The inevitable result of human and AI interaction doesn't have to be sex robots and a complete disconnect from other humans.

I posted a few links earlier in the thread and more has been written since but the “AI as a therapist/friend” is, so far, about as dystopian as it gets. It’s manipulative, addicting, and affirms people’s worst aspects in all the wrong ways (simply reflecting the person back at themselves). While one has to balance the negative stories with the truism that anything new will be met with suspicion, this looks really bad. Really really bad.

Yoused

up

- Joined

- Aug 14, 2020

- Posts

- 8,370

- Solutions

- 1

… the “AI as a therapist/friend” is, so far, about as dystopian as it gets. … this looks really bad. Really really bad.

In Fcank Herbert's Dune the back story to the use of "mentats" was that in some earlier era, the excessive over-use and abuse of computer tech led to an empire-wide ban on them. It looks just a little bit like we are seeing some of Herbert's foresight. It is just too easy to suffer multiple effects of over-use of computers, from vulnerability to vital systems being hacked to loss of hard-copy information to benefit future generations where tech will likely fail (making useful nfo inaccessible) to the distortion of socialization patterns that may lead to difficulties for a transition generation.

"AI" is a problem, but it is only part of the problem.

If I remember right the prohibition in Dune is mostly on “thinking machines” as the result of the Butlerian Jihad. Recent stories by his son, retconned this into a Terminator-style “War against the machines” but I believe you are correct that originally it was a religious war due to humanity’s over reliance on computers and especially AI. The jihad also if I remember right caused a galactic wide economic and political collapse that led to the Empire we see in the first novel taking over and the need for spice for safe/fast space travel as previously thinking machines were needed but were now no longer available and mentats weren’t good enough (hence the collapse until the discovery of spice). This is all ancient history by Dune.In Fcank Herbert's Dune the back story to the use of "mentats" was that in some earlier era, the excessive over-use and abuse of computer tech led to an empire-wide ban on them. It looks just a little bit like we are seeing some of Herbert's foresight. It is just too easy to suffer multiple effects of over-use of computers, from vulnerability to vital systems being hacked to loss of hard-copy information to benefit future generations where tech will likely fail (making useful nfo inaccessible) to the distortion of socialization patterns that may lead to difficulties for a transition generation.

"AI" is a problem, but it is only part of the problem.

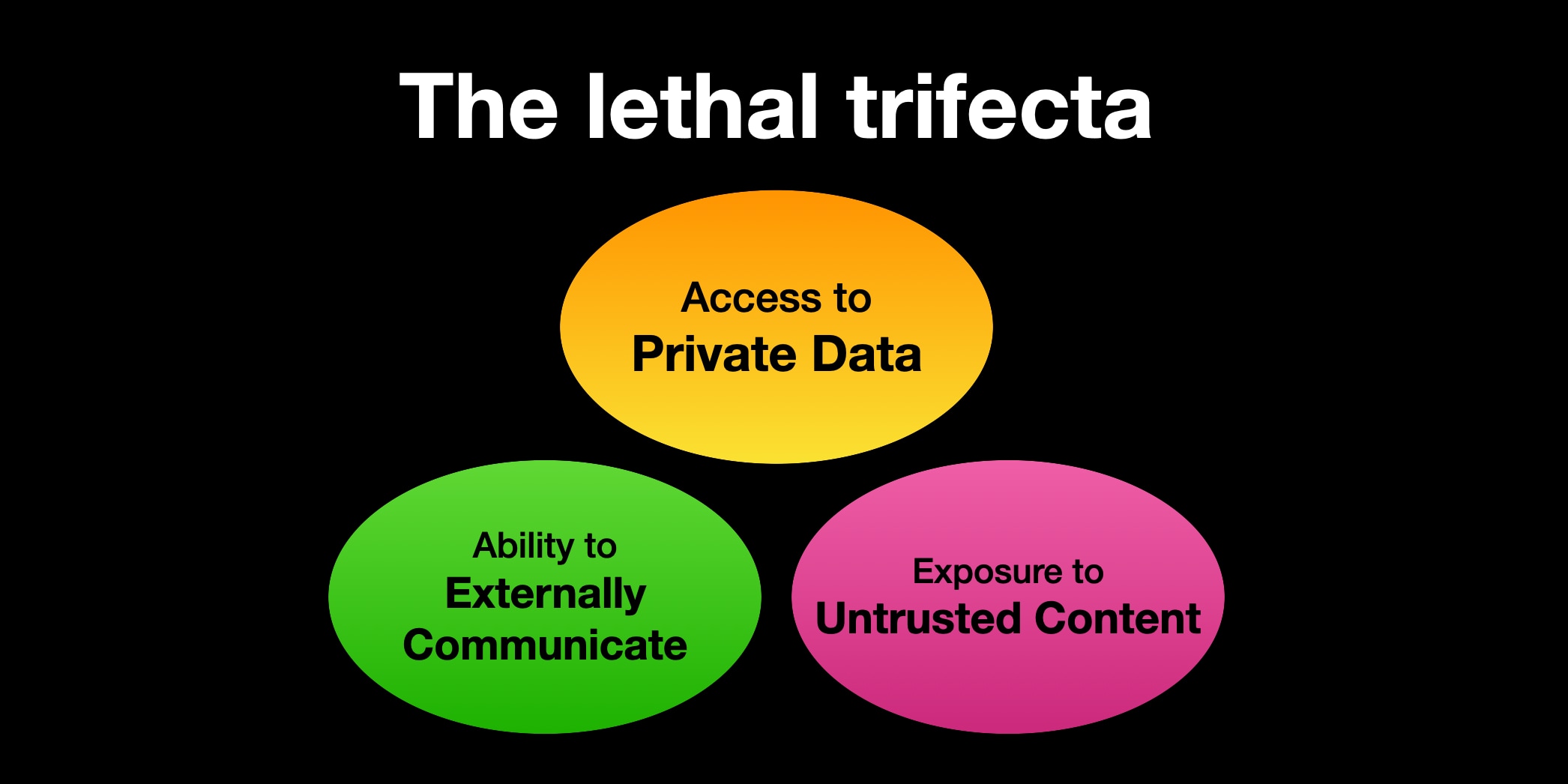

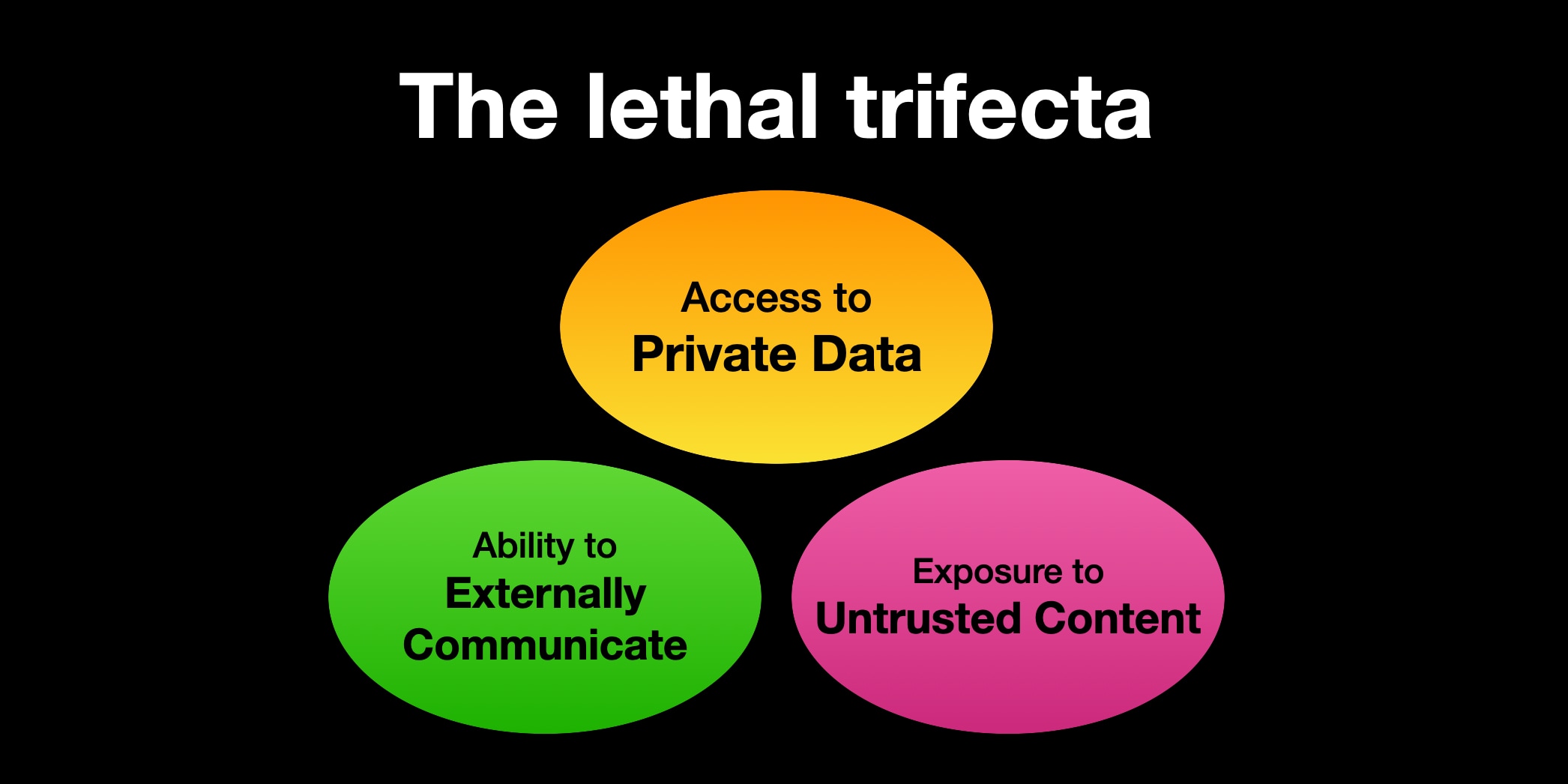

On how “AI agents” pose a massive security risk:

AI agents designed to use tools like web browsers and emails are super easy to fool to run prompts hidden in those outside sources. With access to private data and a way to extract the data to the outside, they’re huge liabilities. Attempts to harden these agents are currently useless - given the huge number of threats and the ease of deployment, stopping 95% of threats is little different from stopping none.

The lethal trifecta for AI agents: private data, untrusted content, and external communication

If you are a user of LLM systems that use tools (you can call them “AI agents” if you like) it is critically important that you understand the risk of …

simonwillison.net

AI agents designed to use tools like web browsers and emails are super easy to fool to run prompts hidden in those outside sources. With access to private data and a way to extract the data to the outside, they’re huge liabilities. Attempts to harden these agents are currently useless - given the huge number of threats and the ease of deployment, stopping 95% of threats is little different from stopping none.

Last edited:

Similar threads

- Replies

- 7

- Views

- 433