Apple has been "eh, do your best" as far as making best use of lotsa cores. But, at least they have Dispatch, which (I think, anyway, have not tried it yet) simplifies widening out your app while also being core-agnostic.

Show me a platform that does it much better than Apple though. Between GCD and Swift Concurrency (I won't repeat

@casperes1996's good details here), Apple's been one of the few actually tackling this in any way at the platform level. Last time I checked Windows, the thread pool APIs required you to handle scaling to the CPU core count, etc, etc. Linux is much the same, but I could be wrong.

EDIT: I guess .NET has their own async/await, but some of this depends on the internal implementation of the thread pool these tasks run on. The default ThreadPool implementation in .NET was quite vulnerable to thread explosions if your queued tasks blocked for I/O when I used it. It was not recommended to be used for blocking I/O for that reason. So if async/await avoids this problem, it might be similar enough to Swift Concurrency to be useful, but .NET isn't exactly a popular platform.

The thing about multithreading a process is it causes your problems to expand, shall we say, exponentially with each added thead. Classic threading is nightmarish. From what I understand about Dispatch, it looks to be somewhat-to-a-lot less headache-inducing, and, theoretically, lets you make use of as many cores as the OS wants to give you. So, going wide in macOS could be a significant gain. Maybe.

I've been working with Dispatch and Switch Concurrency since they were introduced, and honestly, I have yet to find a better way to multi-thread general purpose apps. Because you can simply dispatch tasks to the managed thread pool, and with Swift Concurrency, have the language itself manage data access bottlenecks with actors. It's much easier on Apple platforms to say "Hey, this thing really should fork into an extra thread, do the work, and then update some state for the UI". But the gotcha is that this still doesn't lead apps to suddenly be embarrassingly parallel. But they do get the benefit of freeing up the main thread from processing images/etc, which makes the UI feel better than it would otherwise.

The future is specialised processors, and until then, scaling out on general purpose hardware - if possible.

This is exactly why I am skeptical of a 50-core consumer processor though. Taking the example from

@casperes1996, where they spike the CPU cores going wide processing images, this isn't that uncommon these days. My own app does something similar. But going wider just means worse utilization overall because these spikes are brief. Yet, I'm somewhat surprised that Apple's only enabled GPU processing for this, when an ASIC block that provides JPEG/WEBP compression/decompression similar to H264/265/etc would be quite beneficial for these common use cases.

And for tasks that are already embarrassingly parallel, the GPU is just sitting there waiting for you in so many cases outside some specifics that are not well suited for the sort of GPU pipeline (code compilation for example).

This smells more like a Threadripper competitor, and those who need it know who they are. I certainly don't do enough to spend the premium on a Threadripper when I could put that money towards a beefier GPU if I was doing neural networks or Blender.

I think part of the reason current software is so thread-ignorant is due to the legacy of 4 cores for so long.

As someone working on "thread-ignorant" software for the last couple decades, and working to get wins by forking things off onto separate threads where we can, I can confirm this isn't as big a reason as one might expect. Even in cases where threads are employed, the average consumer-level app spends so much time idle with bursts of activity, the sort of trade-offs being asked here are: Do we underutilize

even more cores to gain X ms of latency improvement between someone hitting a button and seeing an image load, and are we even able to spin up enough work to do this? Do we even need more cores if our 16 threads are all blocked waiting on I/O?

Heavier tasks that can be embarrassingly parallel generally already are taking advantage of it and can scale up, if they haven't already moved to the GPU. There's some legacy stuff that underutilizes what's already available, but that's legacy more than anything else. The promise of everything becoming an embarrassingly parallel problem just hasn't materialized.

And if AI does take over everything, then that will all be running on something like a GPU anyways, rather than a CPU.

Yes, granted - of course some software is inherently difficult to break into smaller chunks you can scale out - but I think intel has a lot to answer for as well - the incentive simply hasn't been there because the available thread count available has been small for so long, threading is hard and software moves slowly in general.

However, the days of regular 2x IPC improvements have been well and truly over for decades at this point; hence the CPU vendors suddenly adding a heap more cores at an accelerated rate. Those software architects who can scale out will win. Those who can't will be marginalised and left behind.

Honestly, I think the reality is that the software that can't scale out is already pretty quick and nobody cares. Spotify, Facebook or YouTube can't really scale and nobody is asking it to. 3D rendering, neural networks, code compilation all can, but GPUs are already the place you want to run it rather than the CPU where you can. Affinity Photo as an example makes Photoshop feel old because it uses Metal for processing. A really wide CPU won't help it handle more layers in real time, but a wider GPU would. The major space right now where CPUs going wider and wider are niches where people are well aware of what they need. Running simulations

that they wrote on the CPU before expending the effort making it run in CUDA (if possible), compiling large projects, etc.

Once apps can scale to beyond 1 thread, N threads is less problematic.

Disagree, in part because of the bit you reference immediately after this:

I did however see an article some years ago where ~ 8 cores is about the limit to scaling for typical multi-threaded end user apps. Forget where. I guess more threads just enables you to run more apps.

I can't make 5 network requests (say, syncing the database of a music library to local storage) against a single server benefit from more than 5 threads at most. And even that is overkill because of data dependencies (I need data from the first request for 2 of the others to make any sense, etc) and the fact that the threads I just spawned are all really just waiting on I/O. Research shows that you really don't want to spread your network requests across N threads anyways. The processing itself isn't necessarily expensive other than it can cause the UI thread to hitch during a UI update. So a thread pool where I can dispatch the work can do this in just 2 threads and be more efficient in terms of resource utilization, both in CPU time spent, and memory/cache usage.

When our devices are becoming more and more thin clients again (talk about coming full circle) talking to the cloud when it comes to the average use case, you wind up with apps that behave very similarly: 1 UI thread, a handful of background processing threads to handle networking and munching on data from the server into something the UI can use, and whatever idle threads the OS spins up on your behalf. It gets a little more complicated if you are building an electron app or need to process what comes from the server (images), but generally these apps are already low CPU utilization except for very brief periods.

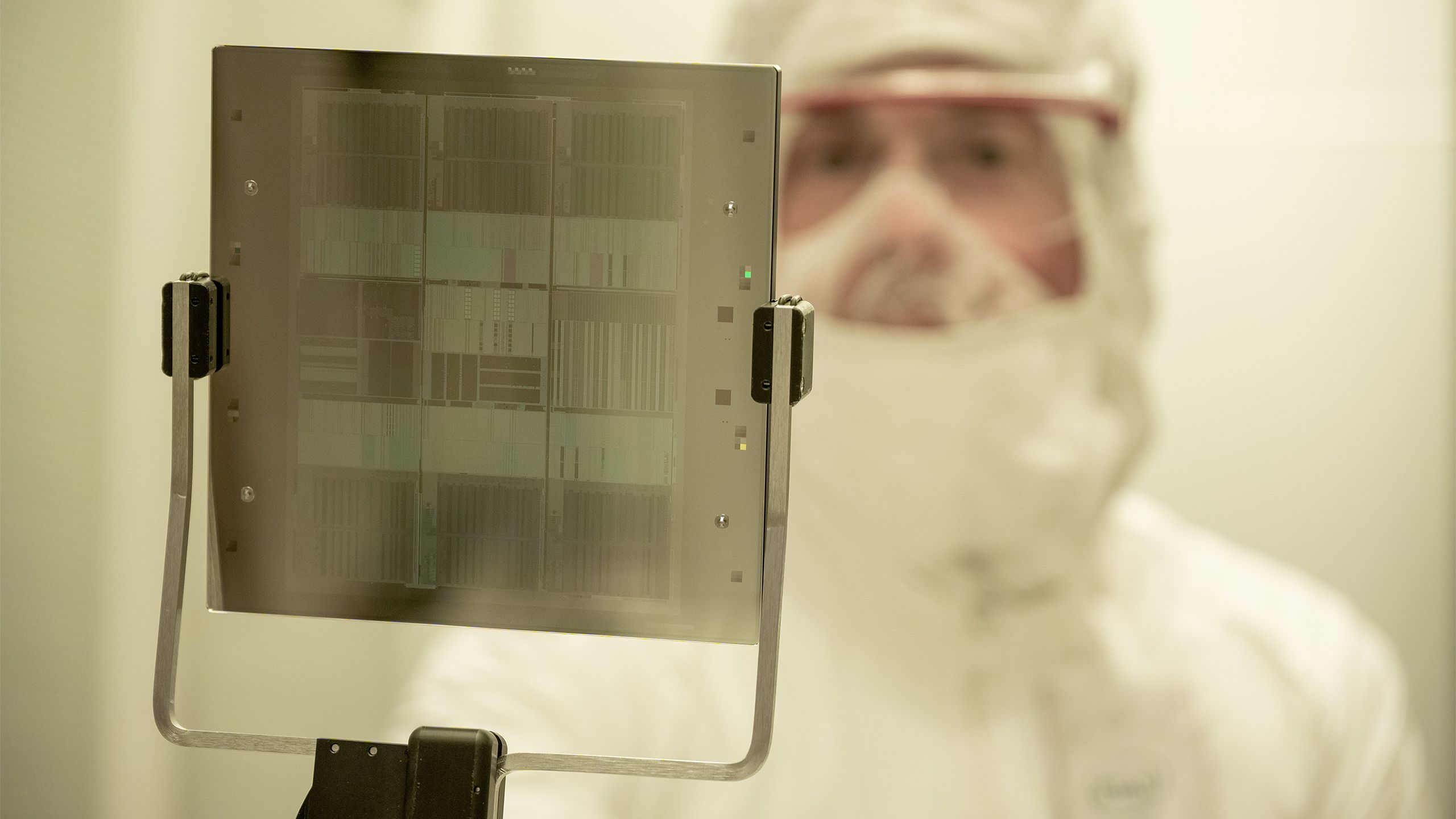

I do find this amusing, I've been around long enough to see this come full circle: back in the 80s platforms like Amiga, the consoles, arcade machines, etc. were doing great things with highly specialised ASICs, until general processing scaled quickly enough via IPC improvement and process size reduction to build this all into the CPU and building dedicated ASICs was not worth it due to economy of scale. Same with WinModems, etc.

Now? We've hit the wall on IPC improvement so we're back toward specialist ASICs again

Yup, and I'm not sure why this would be bad?