Intel has one here:I think we’ve discussed that testing just has to be taken as a kind of big mixed bag. Noting that those would also be informative.

I still haven’t seen ST perf/w curves for LNL at a platform level from Intel or even package (whatever), and I strongly suspect the M3’s curve is meaningfully superior tbh

Slide #22 (numbers aren't on the presentation itself annoyingly, I just downloaded the PDF).

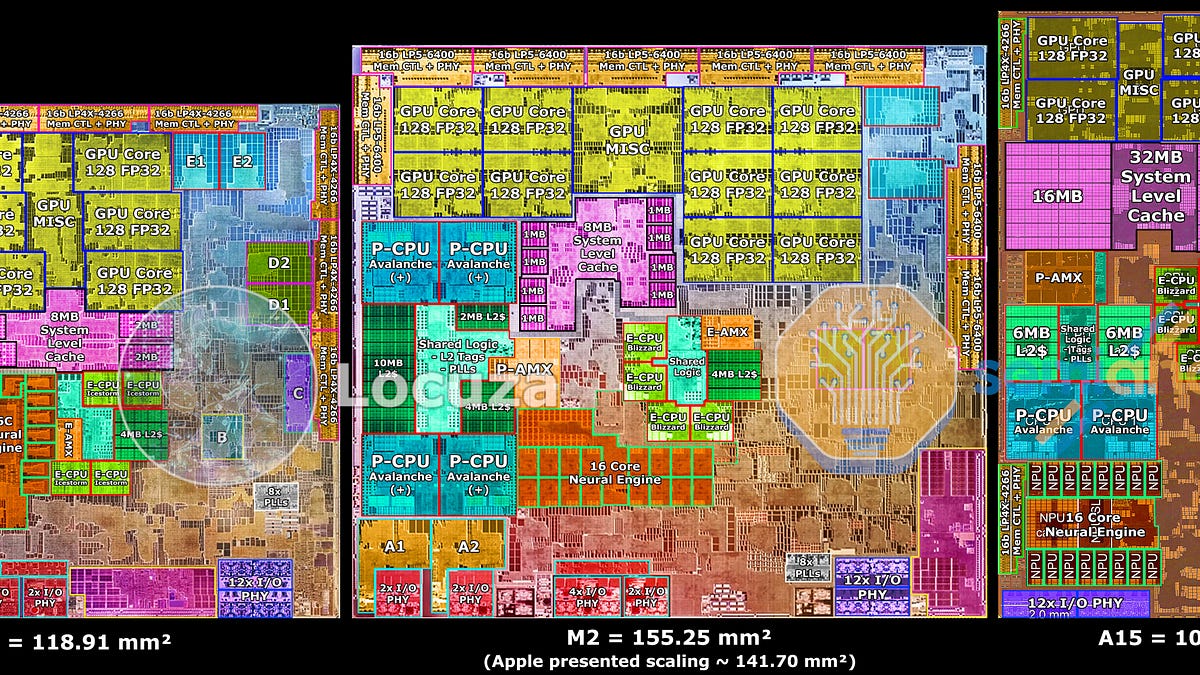

They claim the M3 is on their power curve. However, they use SpecInt for their performance metric and as usual they use their own Intel compiler to give themselves a huge advantage in that one test. You can see that on slide 20 comparing SpecInt with CB R24 and GB 6.3. So those results are kinda meaningless. Also on slide 22 they seemingly have the Elite 80 getting the same SpecInt MT performance as the base M3 when drawing 50W (which I cannot believe is accurate), but then draw the arrow from its performance/watt dot not to their own perf/W line and say "~40% lower power!" and have some note about "Intel Instrumented". This isn't explained as far as I can tell in the notes either on the bottom or at the end but maybe I missed it. Slide #22 is a very odd graph all around basically.

I suspect Intel is also downclocking the cores or rather using the E Cores almost entirely as much as possible to save power. Now, Apple uses theirs too and even Qualcomm we found out depending on the OEM will limit clocks, and all that matters is actual responsiveness and efficiency from a user perspective. But since I can’t actually experience that web browsing, I don’t know what it’s like.

It’s not really a task completion thing where we can measure efficiency (performance/watts) it’s just a run-on test with breaks.

Which is ecologically relevant! It’s fair! But as to the chip it means Intel could cheat this to a degree and the end user experience might feel a bit smoother on the Mac (or the X Elite system which is also quite close albeit with a higher resolution display) etc.

In other words when you have tests like this and low idle power you can get a good result and maybe people are fine with it but it may not be the case that the E or P cores are actually that impressive on a performance/W level, and we’re not really going to be able to know due to how the test is.

That's exactly what they're doing. They even say so themselves, explicitly, on the slide 19 about the Thread Director. Basically everything starts on the E-core only moves to the P-core if it actually has to. Which as you say, for something like watching video and other such tasks is perfectly fine! And is a huge improvement over where Intel was before so Dave2D's results from @Jimmyjames' video hold with respect to that. But yeah it doesn't tell us much about perf/W under real load and Intel's own results with respect to the latter are a bit sketchy to say the least.