Yes Apple is allowed to do things others can'tnope. the OEM i am selling it to has no alternative source.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nuvia: don’t hold your breath

- Thread starter Cmaier

- Start date

On Snapdragon vs AMD and Apple performance and efficiency in Cinebench R24:

I wasn't feeling too well last night and decided to make the above bubble graphs based on the Notebookcheck data. Because I agree with @Nycturne that their bar graphs, especially the separation between performance and efficiency for the MT tests make for some hard reading. Inside the bubbles for the single thread efficiency I list the points per watt (the size of the bubble corresponds to the points/watt value) and for multi-thread efficiency inside the bubbles (or around it when it got too crowded) I have both the score and the points per watt as it gets a little more tricky to track and I have a point about that later. Here are the standout observations that I see:

1) The Qualcomm core doesn't quite match Avalanche's (the M2 P-core) performance/efficiency in CB R24.

2) The Qualcomm Elite 78 is in an Asus laptop while the 64 and 80 are from Microsoft Copilot (tablet hybrid I believe). My working hypothesis is that that Asus has much worse power delivery efficiency to the chip under load. It *should* be a better binned silicon than the 64, but it is obviously worse than both 64 and the 80 which either achieve better ST performance at the same watt or lower watt at same ST performance. Unfortunately, in MT Notebook check only had performance curves for the Asus, but again we can see that the 80 and 64 are superior (the 64 is technically slightly worse, but given that it has 2 fewer cores it should be much, much worse, and isn't - like 5% less efficient at roughly the same power/performance). This is where having software measurement of core power to see its estimates relative to the hardware measurement would've been really beneficial.

3) The Ryzen 7 does not come of well here at all. The Intel chip, not pictured, is worse, but in single core the AMD Ryzen pulls almost as much wattage as the entire 64/78/80. It is nearly 3x less efficient than the M2 Pro and 2-2.6x less efficient than the Oryons for much less ST performance. In other words if they tried to boost it even further to match the performance, its efficiency would get even worse. In multicore its best showing is around the 56W mark where it closes the efficiency gap, but once again when tried to actually match the performance of the Snapdragon at that wattage it has to draw over 80W and still doesn't manage it. And as I said, the 80-class chip would've been even better, it achieves at 39.6W nearly the same level of performance (within 2%) as the AMD at 82.6W, again, almost 2x the efficiency at that performance level. This is why I wanted to emphasize the score along with the efficiency in the multi-thread test. Having said all that at the 56W the AMD processor gets close (within 12-20% efficiency) to the Snapdragon/M2 Pro and I suspect this where in the 30-60W range the AMD chip is best suited, a particularly inefficient implementation of the Snapdragon chip by an OEM and particularly good implementation of the AMD chip (the AMD is German Schenker VIA I don't know its reputation) and yeah they could absolutely line up. Also not clear what the Snapdragon perf/W curve looks like below 35W, if it steepens (likely) it could naturally match the AMD processor here. But as bad as the perf/W of the Asus Oryon is relative to the MS Oryon here, its perf/W curve is still clearly above AMD's curve for the tested values.

4) The two MS Snapdragons kinda support that the 12-core Snapdragon chip is hamstrung. At roughly the same power with two more cores the 80-class is only able to muster ~10% greater efficiency than the 64-class processor. That's not *bad*, but I *think* that really should be better, closer to 20%.

Caveats: Qualcomm's CB R24 scores relative to Apple don't look as good as one would think they should and in GB 6's short ray tracing subtest the Oryon cores improve significantly relative to Apple's M2 Pro and come out closer to what one would expect from the design. However, CB R24 is even worse for the AMD processor and in GB6 it catches up a little (in performance) to both Nuvia and Apple (power untested, but probably still bad, especially in single core). I might try to recapitulate the Geekbench ISO graphs @leman and I created awhile back, but include one of these Snapdragons. Thus, CB R24 may represent a "worst case scenario" for the AMD processor here. Further as outlined in my and @Nycturne's conversation, the full Qualcomm chip has 12 cores, 12 threads vs 8 cores and 16 threads for the AMD processor. It's unclear how much silicon each needs yet but it is possible that the 64-class Snapdragon may represent the more "fair" comparison as it has 10 cores, 10 threads. Assuming a 22% increase from SMT for the AMD processor that's roughly 10 effective threads and even M2 Pro has 8+4 configuration which again is about 9-10 effective P-core threads. What the best way to normalize is for multi-threaded tests is debatable. I'm leaning towards silicon area since the point is for embarrassingly parallel operations you can always throw more cores/threads at the problem and dramatically improve performance and performance/watt. So IMO figuring out how performant an architecture in MT scenarios needs some sort of normalization to rationalize the results or you can wind up with absurdities like comparing the efficiency of down clocked threadrippers to base M processors.

Anyway, I hope you all found this way of revisualization the Notebookcheck data useful!

I wasn't feeling too well last night and decided to make the above bubble graphs based on the Notebookcheck data. Because I agree with @Nycturne that their bar graphs, especially the separation between performance and efficiency for the MT tests make for some hard reading. Inside the bubbles for the single thread efficiency I list the points per watt (the size of the bubble corresponds to the points/watt value) and for multi-thread efficiency inside the bubbles (or around it when it got too crowded) I have both the score and the points per watt as it gets a little more tricky to track and I have a point about that later. Here are the standout observations that I see:

1) The Qualcomm core doesn't quite match Avalanche's (the M2 P-core) performance/efficiency in CB R24.

2) The Qualcomm Elite 78 is in an Asus laptop while the 64 and 80 are from Microsoft Copilot (tablet hybrid I believe). My working hypothesis is that that Asus has much worse power delivery efficiency to the chip under load. It *should* be a better binned silicon than the 64, but it is obviously worse than both 64 and the 80 which either achieve better ST performance at the same watt or lower watt at same ST performance. Unfortunately, in MT Notebook check only had performance curves for the Asus, but again we can see that the 80 and 64 are superior (the 64 is technically slightly worse, but given that it has 2 fewer cores it should be much, much worse, and isn't - like 5% less efficient at roughly the same power/performance). This is where having software measurement of core power to see its estimates relative to the hardware measurement would've been really beneficial.

3) The Ryzen 7 does not come of well here at all. The Intel chip, not pictured, is worse, but in single core the AMD Ryzen pulls almost as much wattage as the entire 64/78/80. It is nearly 3x less efficient than the M2 Pro and 2-2.6x less efficient than the Oryons for much less ST performance. In other words if they tried to boost it even further to match the performance, its efficiency would get even worse. In multicore its best showing is around the 56W mark where it closes the efficiency gap, but once again when tried to actually match the performance of the Snapdragon at that wattage it has to draw over 80W and still doesn't manage it. And as I said, the 80-class chip would've been even better, it achieves at 39.6W nearly the same level of performance (within 2%) as the AMD at 82.6W, again, almost 2x the efficiency at that performance level. This is why I wanted to emphasize the score along with the efficiency in the multi-thread test. Having said all that at the 56W the AMD processor gets close (within 12-20% efficiency) to the Snapdragon/M2 Pro and I suspect this where in the 30-60W range the AMD chip is best suited, a particularly inefficient implementation of the Snapdragon chip by an OEM and particularly good implementation of the AMD chip (the AMD is German Schenker VIA I don't know its reputation) and yeah they could absolutely line up. Also not clear what the Snapdragon perf/W curve looks like below 35W, if it steepens (likely) it could naturally match the AMD processor here. But as bad as the perf/W of the Asus Oryon is relative to the MS Oryon here, its perf/W curve is still clearly above AMD's curve for the tested values.

4) The two MS Snapdragons kinda support that the 12-core Snapdragon chip is hamstrung. At roughly the same power with two more cores the 80-class is only able to muster ~10% greater efficiency than the 64-class processor. That's not *bad*, but I *think* that really should be better, closer to 20%.

Caveats: Qualcomm's CB R24 scores relative to Apple don't look as good as one would think they should and in GB 6's short ray tracing subtest the Oryon cores improve significantly relative to Apple's M2 Pro and come out closer to what one would expect from the design. However, CB R24 is even worse for the AMD processor and in GB6 it catches up a little (in performance) to both Nuvia and Apple (power untested, but probably still bad, especially in single core). I might try to recapitulate the Geekbench ISO graphs @leman and I created awhile back, but include one of these Snapdragons. Thus, CB R24 may represent a "worst case scenario" for the AMD processor here. Further as outlined in my and @Nycturne's conversation, the full Qualcomm chip has 12 cores, 12 threads vs 8 cores and 16 threads for the AMD processor. It's unclear how much silicon each needs yet but it is possible that the 64-class Snapdragon may represent the more "fair" comparison as it has 10 cores, 10 threads. Assuming a 22% increase from SMT for the AMD processor that's roughly 10 effective threads and even M2 Pro has 8+4 configuration which again is about 9-10 effective P-core threads. What the best way to normalize is for multi-threaded tests is debatable. I'm leaning towards silicon area since the point is for embarrassingly parallel operations you can always throw more cores/threads at the problem and dramatically improve performance and performance/watt. So IMO figuring out how performant an architecture in MT scenarios needs some sort of normalization to rationalize the results or you can wind up with absurdities like comparing the efficiency of down clocked threadrippers to base M processors.

Anyway, I hope you all found this way of revisualization the Notebookcheck data useful!

Last edited:

Long video mostly on how terrible the Windows-Snapdragon experience has been for developers and generally just how bad Windows has gotten in general and MS’s inability to execute, especially on ARM for the past decade. Apparently engineers at MS and Qualcomm are working crunch time to try to solve these issues but some things are just impossible to fix quickly like the 2023 DevKit being largely unhelpful for the porting process and the 2024 DevKit that is more representative of the hardware and drivers isn’t out and won’t be for awhile. MS is very good on support on some issues but on the Qualcomm-MS border like drivers and software developers aren’t always sure who is actually responsible and no one seems to be.

It’s interesting some of these complaints are things I’d heard about Apple and gaming over the years so it was actually odd to hear Apple being lauded from a game developer’s perspective during the interview portion as how to do a transition right. Developers apparently can’t even ship universal binaries for Windows?

This video also touches on my previous thread about enshittification where developers and power users really are looking elsewhere like Linux (and maybe macOS). Sadly I’m not sure how viable Linux will be for most users outside of SteamOS but if this video is any indication at least some fraction of people seem really unhappy with MS and that doesn’t have anything to do with x86. While Apple’s superior battery life is a reason why some people switched, more appears to be rotten in the state of Redmond than Intel/AMD chips.

There are some aspects of the video I’m not as sold on (like I’d say its performance and perf/watt is a bit better than Wendell gives it credit for). I might also criticize it for being long winded and repetitious but considering my own writing I’d be a little hypocritical

Last edited:

Microsoft screwed this up a long time ago; Windows doesn't support multi-architecture binaries at all. This has been a pain point for a long time even on x86, since Microsoft has to support both 32- and 64-bit x86 applications.Developers apparently can’t even ship universal binaries for Windows?

Because it's Windows, there's an extra layer of confusion, even. 64-bit x86 windows has both C:\Windows\system32 and C:\Windows\SysWOW64, but system32 is the one with 64-bit binaries, and SysWOW64 is the one with 32-bit binaries. You see, the "system32" path dates back to before there was such a thing as 64-bit x86, so they decided that it's always the primary directory for the native ISA of that computer's CPU. Compatibility components go in confusingly named extra directories like SysWOW64. Windows!

A lot of what's behind the recent PC user interest in Linux is that Microsoft has been ratcheting Windows-as-adware up to an almost intolerable degree for home users.This video also touches on my previous thread about enshittification where developers and power users really are looking elsewhere like Linux (and maybe macOS). Sadly I’m not sure how viable Linux will be for users but if this video is any indication at least some fraction of people seem really unhappy with MS and that doesn’t have anything to do with x86. While Apple’s superior battery life is a reason why some people switched, more appears to be rotten in the state of Redmond than Intel/AMD chips.

KingOfPain

Site Champ

- Joined

- Nov 10, 2021

- Posts

- 754

Developers apparently can’t even ship universal binaries for Windows?

Mr_Roboto confirms what I suspected: While PE binaries have lots of architecute options, it only supports one architecture per binary.

If you take a look at the structure of the Portable Executable in the Wikipedia entry, you'll see that "Machine" an entry in the COFF header. IIRC, this should be the processor architecture. But there is only one COFF header...

Portable Executable - Wikipedia

But PE binaries to this day start with a small DOS program that tells you that you need Windows to run the program, just in case you happen to execute it under DOS.

Although I have to admit that I have no idea if that's also the case for ARM binaries...

So IMO figuring out how performant an architecture in MT scenarios needs some sort of normalization to rationalize the results or you can wind up with absurdities like comparing the efficiency of down clocked threadrippers to base M processors.

Case in point:

AMD has traditionally shied away from direct performance comparisons with Apple’s M series processors. However, Asus presented its new AMD-powered Zenbook S 16 at the event and shared some of its own benchmarks to highlight the Ryzen AI 9 HX 370’s performance against a competing Apple MacBook Air 15 with an M3 processor. Asus provided sparse test config info in the slide, so we’ll have to take these benchmarks with more than the usual amount of salt.

Asus claims substantial leads over the MacBook Air 15, with wins ranging from a 20% advantage in the Geekbench OpenCL CPU score benchmark to a whopping 118% lead in the UL Procyon benchmark. Other notable wins include a 60% advantage in Cinebench (certainly the multi-threaded benchmark) and a 20% lead in the Geekbench CPU score.

With respect to the CPU benchmarks, the Ryzen AI 9 HX 370 is a 4/8c 12-core, 24 thread processor being compared to a 4P/4E 8-core, 8-thread processor. It’s also a “default” 28W TDP processor which can be set as high as 54W.

So showing off that they beat the M3 in multithreaded tests … I mean … yeah?

The iGPU, the 890M, is very nice though again way outside the weight class of the MacBook - with still decent power consumption I might add. I’m looking forwards to AMD’s entrance into the even larger APUs later - as well as hopefully Nvidia’s.

Overall these look good, I just wish Asus marketing was as smart as AMD’s and shied away from direct comparisons that aren’t really in its favor. Then again, it probably works. So maybe it’s “smart” after all.

Last edited:

No info on battery life. The Tom’s article doesn’t mention it and the Asus website says “Zenbook S 16 has the day-long stamina you need, and more." Whatever that means.Case in point:

With respect to the CPU benchmarks, the Ryzen AI 9 HX 370 is a 4/8c 12-core, 24 thread processor being compared to a 4P/4E 8-core, 8-thread processor. It’s also a “default” 28W TDP processor which can be set as high as 54W.

So showing off that they beat the M3 in multithreaded tests … I mean … yeah?Even if performance is comparable at around 20W … only okay? (the M3 is also on N3 vs N4P which isn’t as big a difference in performance and power as one might’ve hoped, but still worth noting).

The iGPU, the 890M, is very nice though again way outside the weight class of the MacBook - with still decent power consumption I might add. I’m looking forwards to AMD’s entrance into the even larger APUs later - as well as hopefully Nvidia’s.

Overall these look good, I just wish Asus marketing was as smart as AMD’s and shied away from direct comparisons that aren’t really in its favor. Then again, it probably works. So maybe it’s “smart” after all.

Nycturne

Elite Member

- Joined

- Nov 12, 2021

- Posts

- 1,743

Microsoft screwed this up a long time ago; Windows doesn't support multi-architecture binaries at all. This has been a pain point for a long time even on x86, since Microsoft has to support both 32- and 64-bit x86 applications.

In fairness, only a handful of platforms have ever really used FAT binaries. Apple's the only one I'm aware of that uses it consistently (68K/PPC, PPC/Intel, 32/64Bit, Intel/ARM).

Someone tried to introduce FatELF and had some fun with that, but the end result is that it never took off and multi-arch Linux installs look surprisingly similar to Windows multi-arch installs. Only with better folder names.

Ah but the OEM you are trying to sell your chip to might if you double the cost of producing the chip - either it becomes lower profit for you, the OEM, or lower volume because the end-user doesn't want to pay that much even if it means better battery life and quieter fans.

When talking about CPU cores on modern dies, double die area on a core isn't going to lead to double the die area of the chip. With all the integration going on with Intel, AMD, Apple, etc, there's a lot more going on these days. The CPU cores and cache on a base M3 is what, 1/6th of the die (rough estimate from annotated die shots)? Doubling that is around 16% more die area. But this is all us kinda exaggerating the point, though. The result is that a larger die area will increase costs, but that's all part of the balancing act here.

That's multithreaded efficiency and yes they are much, much closer there. Sorry for being unclear, I was referring to ST efficiency when referring to Oryon having greater >2x efficiency compared to AMD. You were saying how "The 7745HX has about 12% more ST than the 7840HS according to Geekbench." Which might match the Snapdragon in terms of performance though the processor here is only a few percent higher than the 8845HS/7840HS recorded in Notebookcheck and still slower than the (higher) Snapdragon. As you said, it really depends on how the OEM has set its power and thermals and even single threaded is a pain. I mean if you check the chart below the X1P-64-100 (108 pts) got massively higher efficiency than the 80-100 (123 pts) and the 78-100 (108 pts) despite being a lower binned processor and the 64 and 78 scoring the same with the same clockspeed!

That's more interesting, but they are measuring system draw which itself is fraught with issues because you are measuring more than just the CPU cores. Is this result because boost clocks? Because of differences in the graphics feeding the external display? Does Ryzen and Intel have a high base load even when the cores are asleep? That last one is something I have seen before. My i7 Mac mini can get under 10W when idle, yet I had a Ryzen 5600 desktop that drew 30W just sitting at the desktop doing nothing. Because these figures are using system measurements, it's harder to make claims about the cores themselves. It's certainly a statement that you can get more battery from a Qualcomm system in this specific scenario though, and that Apple systems are consistently good across the board.

BTW how do you resize your picture? When I look on the phone mine is fine, but on the computer screen mine is absolutely massive and yours looks normal - I just took a screen shot and pasted it in. Edit: actually yours look small on the phone, huh, either way how did you resize?

Using the WYSIWYG editor, you can select and set a fixed size on images.

True. And even with Apple, only the latter three are a clean, generalized approach. 68K/PPC fat binaries for classic MacOS were slightly hack-ish. They kept 68K executable code in the same format in resource forks, where it always had been. PPC was added in by using the data fork (normally unused in classic MacOS 68K apps) to store a PEF format executable.In fairness, only a handful of platforms have ever really used FAT binaries. Apple's the only one I'm aware of that uses it consistently (68K/PPC, PPC/Intel, 32/64Bit, Intel/ARM).

By contrast, the 'universal' scheme in Mac OS X onwards is just NeXT's Mach-O binary format. Mach-O headers can describe an arbitrary number of code segments and architectures stored in one file - you can make quad (or more) architecture binaries if you like.

I remember that, and also remember much of the resistance to the idea being kneejerk "well why should we do that when everything's compiled for your CPU by your distro". (Sigh.)Someone tried to introduce FatELF and had some fun with that, but the end result is that it never took off and multi-arch Linux installs look surprisingly similar to Windows multi-arch installs. Only with better folder names.

Nycturne

Elite Member

- Joined

- Nov 12, 2021

- Posts

- 1,743

True. And even with Apple, only the latter three are a clean, generalized approach. 68K/PPC fat binaries for classic MacOS were slightly hack-ish. They kept 68K executable code in the same format in resource forks, where it always had been. PPC was added in by using the data fork (normally unused in classic MacOS 68K apps) to store a PEF format executable.

It's been a hot minute, but keep in mind that a lot changed between the 68000 in the early Macs and the 601, and it's probably not as hacky as you remember. When Virtual Memory was enabled on PPC, one of the benefits was being able to fault in code pages which wasn't supported on 68k (despite virtual memory being a thing, interestingly). I'd wager that a good chunk of the difference was in service towards newer memory management techniques, and simplifying what the kernel had to do when dealing with page faults for code pages.

That said, there's two versions of 68k code. There's "classic" 68k and CFM-68k. PPC and CFM-68k both used the data fork. You could build a fat binary that put both your PPC and 68k code in the PEF container stored in the data fork easily enough. Doing it this way meant that your software functionally wasn't useful for 68k machines on System 7.1 and earlier. 7.1.2 which first introduced the CFM required for this type of FAT binary only shipped on a handful of 68k machines. So there was a good reason for developers to let 68k machines pull the CODE resource instead to support System 7 and 7.1.

But really, PEF was the full evolution of how code fragments were stored on Classic MacOS, and supported 68k. Much like how Mach-O replaced PEF. And yet, because there's all the legacy stuff you have to support, the old executable formats were still supported for quite a while.

EDIT: Interestingly, like Mach-O, PEF does support arbitrary numbers of code segments in a single file. But it only ever supported 32-bit PPC and 68k as architectures.

By contrast, the 'universal' scheme in Mac OS X onwards is just NeXT's Mach-O binary format. Mach-O headers can describe an arbitrary number of code segments and architectures stored in one file - you can make quad (or more) architecture binaries if you like.

Which in fairness was needed because NeXT supported a couple architectures itself. And yeah, well aware just how heavily MacOS X took from NeXTStep to deliver a modern OS.

Last edited:

I had forgotten these details about the evolution of Classic's PPC support, thanks! Now that you've nudged my brain cells I do remember them trying to modernize that and other things. Stuff like the nanokernel... iirc we never got to take full advantage of its features before end of the road for Classic.But really, PEF was the full evolution of how code fragments were stored on Classic MacOS, and supported 68k. Much like how Mach-O replaced PEF. And yet, because there's all the legacy stuff you have to support, the old executable formats were still supported for quite a while.

EDIT: Interestingly, like Mach-O, PEF does support arbitrary numbers of code segments in a single file. But it only ever supported 32-bit PPC and 68k as architectures.

Classic MacOS had so much technical debt. Cramming a GUI as sophisticated as 1984 Macintosh into 64K ROM, 128K RAM, and a 400K boot floppy created lots of design compromises that were unfortunate in the long term. (So much so that sometimes "long term" meant maybe two or three years in the future.)

NeXT even had their own 68K to PowerPC transition (hardware and software) mostly ready to go, but it didn't make it out the door before NeXT's dismal revenues forced them to abandon making hardware.Which in fairness was needed because NeXT supported a couple architectures itself. And yeah, well aware just how heavily MacOS X took from NeXTStep to deliver a modern OS.

I do wonder how different things would be today if Apple had good and effective leadership in the early 1990s. If they'd directed the resources wasted on things like Taligent and OpenDoc towards less glamorous incremental improvement of Mac OS, would they have been successful enough to not even need to go looking for a different OS by 1996?

Yeah I was just exaggerating to make the point. I agree that it’s the total SOC size that matters not just the CPU when it comes to cost and I should add that supposedly the Qualcomm chips are indeed cheaper. However, the other part of the balancing act is that CPUs are expected to take on a number of different workloads with different levels of multi threading and which workloads it should focus on depends heavily on the device class. This stands in stark contrast with GPUs where cost and power limits may apply limits to core counts, but for the workloads themselves, more cores will always simply be better. For the CPU, targeting one set of workloads like heavily multi threaded can be counterproductive to single threaded and lightly multithreaded tasks. As John Poole said in his posts about why he changed GB6's multithreaded scoring system, relying solely on infinitely scalable multithreaded benchmarks users were getting suckered into not only buying systems with power they didn't need but even worse were actually slower at some of their most common tasks than "lower-tier" systems they could've bought instead. And that's on HEDT systems never mind processors destined for "thin and lights"! Even if the negative consequences don't come into play, at the very least, users of such devices will either not benefit from high multithreaded capabilities or find heavy throttling and low battery life when they do try to make use of their MT capabilities in such systems. Thus, there is a penalty to be paid for not developing a chip targeted for the right device. This isn't to say that a chip shouldn't be the best it can be but what is best can be heavily context dependent. This is why I was no more impressed with Qualcomm/MS's marketing about multithreaded workload claims than I am with ASUS's for the upcoming AMD chips (AMD did not make those claims themselves).In fairness, only a handful of platforms have ever really used FAT binaries. Apple's the only one I'm aware of that uses it consistently (68K/PPC, PPC/Intel, 32/64Bit, Intel/ARM).

Someone tried to introduce FatELF and had some fun with that, but the end result is that it never took off and multi-arch Linux installs look surprisingly similar to Windows multi-arch installs. Only with better folder names.

When talking about CPU cores on modern dies, double die area on a core isn't going to lead to double the die area of the chip. With all the integration going on with Intel, AMD, Apple, etc, there's a lot more going on these days. The CPU cores and cache on a base M3 is what, 1/6th of the die (rough estimate from annotated die shots)? Doubling that is around 16% more die area. But this is all us kinda exaggerating the point, though. The result is that a larger die area will increase costs, but that's all part of the balancing act here.

That's more interesting, but they are measuring system draw which itself is fraught with issues because you are measuring more than just the CPU cores. Is this result because boost clocks? Because of differences in the graphics feeding the external display? Does Ryzen and Intel have a high base load even when the cores are asleep? That last one is something I have seen before. My i7 Mac mini can get under 10W when idle, yet I had a Ryzen 5600 desktop that drew 30W just sitting at the desktop doing nothing. Because these figures are using system measurements, it's harder to make claims about the cores themselves. It's certainly a statement that you can get more battery from a Qualcomm system in this specific scenario though, and that Apple systems are consistently good across the board.

This isn't much of an issue here for multiple reasons. 1) The chosen Ryzen laptop has a 15W iGPU and gets the same idle wattage than the MacBook Pro/Qualcomm (all are about 7-9W on average). 2) Notebookcheck do pretty good quality controls to ensure as even a test setup as possible between devices. 3) They subtract idle from load to get rid of differences at idle. Thus the remainder is load power.

Now I'll admit this doesn't fully eliminate every factor other than the CPU cores, heck that's the basis for my previous claims about the discrepancy between the Qualcomm Asus and Qualcomm MS devices, but that was on the order of 10-20% power delivery inefficiency under load, but nothing to come close to 2-3x difference we see in the ST bubble chart I made from Notebookcheck's data. While I'd have to dig up a reference I'm pretty sure this is recapitulated in software measurements from HWInfo and powermetrics. HWInfo is tough to come by for the Qualcomm devices unfortunately as I think it was only recently enabled and many reviews don't have it, especially for ST tasks. And naturally software measurements have their own limitations. It’s why I’d prefer to have both whenever possible.

That said, these relative power numbers just make sense. All the devices listed are on TSMC N4 or N5P which are basically identical nodes but the AMD device is pushing its cores as high as 5.1GHz compared to 3.4-4.0GHz. Now admittedly the AMD core won't spend its entire 10 minute CB ST run at 5.1GHz but the core is going to be running much, much higher clock speeds for longer on the same node. If the cores are indeed bigger, then in terms of CfV^2 they're getting hit on every single variable, including the squared voltage. Now because they're running their cores so far out on the curve it is also true that they could back off clocks and get say 10% less performance for half the power (I'm making the numbers up) but they're already 6-20% slower in CB R24. For obvious reasons AMD don't want to lose an additional X% of performance even if their efficiencies would go up dramatically. Heck that's a major reason why AMD catches up in MT threaded tests, Apple and Qualcomm of course back off clocks too, but AMD backs off much more. Throw in a 20% boost to SMT and suddenly AMD's efficiencies look a lot better even if they can't match Qualcomm/ARM for performance (and when they do efficiency drops even faster for them).

A qualifying statement that I made in my bubble chart post, it should also be noted that in GB 6.2, AMD does much, much better relative to its ARM rivals as an average across the GB subtests than it does in CB R24. AMD are almost certainly still drawing a hell of a lot more power than the ARM-based cores to attain those scores still (probably even worse relative to CB R24 given the bursty nature of the GB workload means a proportionally higher time spent at max boost) but at least the performance deficit is gone and they actually beat some Qualcomm models and nearly match the M2 Pro, while the higher end Qualcomm supersedes both in this test though - just a side note in contrast to CB R23, Apple does incredibly well in CB R24 so yeah Maxxon fixed whatever that problem was, at least for Apple chips. So 2-3 fold differences in power efficiency may be on the high end for benchmarks, but there is still a very substantial gap in ST performance and efficiency between the best ARM (well M2, but still) and the currently best x86 cores.

Using the WYSIWYG editor, you can select and set a fixed size on images.

Ah I thought it was a forum setting. I did notice on the forums we can create thumbnails but testing previews of it I'm not sure I like that any better to be honest as a readability measure. I guess I'll play around with an editor to choose a picture size that looks good on both mobile and desktop versions of this website.

No info on battery life. The Tom’s article doesn’t mention it and the Asus website says “Zenbook S 16 has the day-long stamina you need, and more." Whatever that means.

Yeah no hard info on battery life, but as I linked to in my previous post even from AMD's website we can see that it says it's a 28W TDP device that can go as high as 54W. No doubt that they will have a "whisper" mode, but the HX 370 is fundamentally a different device class than the base M models. It might go into "thin and lights", but I doubt fanless models, if there are any, will be able to exercise all that power ... The GPU at 15W is very impressive even accounting for AMD's use of "double FLOPs" which gives them 11.88 TFLOPs (basically under certain circumstances with ILP, AMD can do two FP32 calculations at the same time. However, that's not always possible and how likely it is for typical GPU workloads is up for debate. But even accounting for that the GPU is still nearly 6 TFLOPs base!). It's basically the equivalent of an Mx Pro, both in GPU and CPU.

Last edited:

Nycturne

Elite Member

- Joined

- Nov 12, 2021

- Posts

- 1,743

Yeah, this was around the time I was starting to try to get my head wrapped around the Inside Macintosh books. I had a physical copy of the PPC Architecture book among a smattering of others found at swap meets and the like. Never really played much with Multiprocessing Services which promised partial preemptive multitasking, although I think I remember reading up on it.I had forgotten these details about the evolution of Classic's PPC support, thanks! Now that you've nudged my brain cells I do remember them trying to modernize that and other things. Stuff like the nanokernel... iirc we never got to take full advantage of its features before end of the road for Classic.

Interestingly, it looks like someone has reverse engineered the nanokernel used for the early PPC machines and put the annotated assembler files up on github.

Yuuup. And then you had all sorts of stuff just sitting there for you to hook into with an INIT or two. At one point I did hook the heap manager (why is this even possible?) with a custom INIT while I was in middle school. Mostly just crashed the Finder, but I was able to prove to myself that it was possible to hook the heap manager and write a custom implementation if you knew what you were doing. At least at the time, I didn’t.Classic MacOS had so much technical debt. Cramming a GUI as sophisticated as 1984 Macintosh into 64K ROM, 128K RAM, and a 400K boot floppy created lots of design compromises that were unfortunate in the long term. (So much so that sometimes "long term" meant maybe two or three years in the future.)

I forget what heap allocation behavior I even wanted to try. I think it had something to do with trying to make it so that you didn’t need to call MoreHandles().

Hard to say. But I think such a beast would look a lot more like say, Windows 98 or XP, than MacOS X.I do wonder how different things would be today if Apple had good and effective leadership in the early 1990s. If they'd directed the resources wasted on things like Taligent and OpenDoc towards less glamorous incremental improvement of Mac OS, would they have been successful enough to not even need to go looking for a different OS by 1996?

With no memory protection, if someone outside Apple wanted to hook anything badly enough, they absolutely could. So maybe Apple was just going with the flow, there!Yuuup. And then you had all sorts of stuff just sitting there for you to hook into with an INIT or two. At one point I did hook the heap manager (why is this even possible?)

For sure. I don't think that alt-history version of Apple would have been doing the right thing for the long term, for what it's worth.Hard to say. But I think such a beast would look a lot more like say, Windows 98 or XP, than MacOS X.

Come to think of it, Copland was sort of that beast, but iirc extremely poor execution (terrible performance, lots of bugs) doomed it.

Nycturne

Elite Member

- Joined

- Nov 12, 2021

- Posts

- 1,743

With no memory protection, if someone outside Apple wanted to hook anything badly enough, they absolutely could. So maybe Apple was just going with the flow, there!

It did mean we got tools like RAM Doubler which took advantage of the MMU in ways that Apple wasn’t, so it wasn’t all bad. But yeah, not an era I want to go back to, except via my vintage hardware.

For sure. I don't think that alt-history version of Apple would have been doing the right thing for the long term, for what it's worth.

Come to think of it, Copland was sort of that beast, but iirc extremely poor execution (terrible performance, lots of bugs) doomed it.

That’s pretty much what I’m thinking of, yes. But had Blue/Pink played out the way it was intended, it wouldn’t have languished so long to become Copland in the first place. But had Copland landed in good shape, the argument for a “fresh start” would have been quite hard to make I think. At least until a project like the iPhone.

I should make it clear that I'm comapring *single threaded* GB 6.2 and CB R24 results - it gets more complicated for MT.A qualifying statement that I made in my bubble chart post, it should also be noted that in GB 6.2, AMD does much, much better relative to its ARM rivals as an average across the GB subtests than it does in CB R24. AMD are almost certainly still drawing a hell of a lot more power than the ARM-based cores to attain those scores still (probably even worse relative to CB R24 given the bursty nature of the GB workload means a proportionally higher time spent at max boost) but at least the performance deficit is gone and they actually beat some Qualcomm models and nearly match the M2 Pro, while the higher end Qualcomm supersedes both in this test though - just a side note in contrast to CB R23, Apple does incredibly well in CB R24 so yeah Maxxon fixed whatever that problem was, at least for Apple chips. So 2-3 fold differences in power efficiency may be on the high end for benchmarks, but there is still a very substantial gap in ST performance and efficiency between the best ARM (well M2, but still) and the currently best x86 cores.

Long video mostly on how terrible the Windows-Snapdragon experience has been for developers and generally just how bad Windows has gotten in general and MS’s inability to execute, especially on ARM for the past decade. Apparently engineers at MS and Qualcomm are working crunch time to try to solve these issues but some things are just impossible to fix quickly like the 2023 DevKit being largely unhelpful for the porting process and the 2024 DevKit that is more representative of the hardware and drivers isn’t out and won’t be for awhile. MS is very good on support on some issues but on the Qualcomm-MS border like drivers and software developers aren’t always sure who is actually responsible and no one seems to be.

It’s interesting some of these complaints are things I’d heard about Apple and gaming over the years so it was actually odd to hear Apple being lauded from a game developer’s perspective during the interview portion as how to do a transition right. Developers apparently can’t even ship universal binaries for Windows?

This video also touches on my previous thread about enshittification where developers and power users really are looking elsewhere like Linux (and maybe macOS). Sadly I’m not sure how viable Linux will be for most users outside of SteamOS but if this video is any indication at least some fraction of people seem really unhappy with MS and that doesn’t have anything to do with x86. While Apple’s superior battery life is a reason why some people switched, more appears to be rotten in the state of Redmond than Intel/AMD chips.

There are some aspects of the video I’m not as sold on (like I’d say its performance and perf/watt is a bit better than Wendell gives it credit for). I might also criticize it for being long winded and repetitious but considering my own writing I’d be a little hypocritical. Overall though some interesting points and again shows that building these devices is more than just CPU architecture and Qualcomm needs serious improvement and so does MS for Windows on ARM despite 10 years of trying.

Speaking of Windows engineering failures, here’s a good one:

“Something has gone seriously wrong,” dual-boot systems warn after Microsoft update

Microsoft said its update wouldn't install on Linux devices. It did anyway.

arstechnica.com

arstechnica.com

In an effort to plug terrible security holes in their “Secure boot” boot loader, MS broke a bunch of dual boot systems.

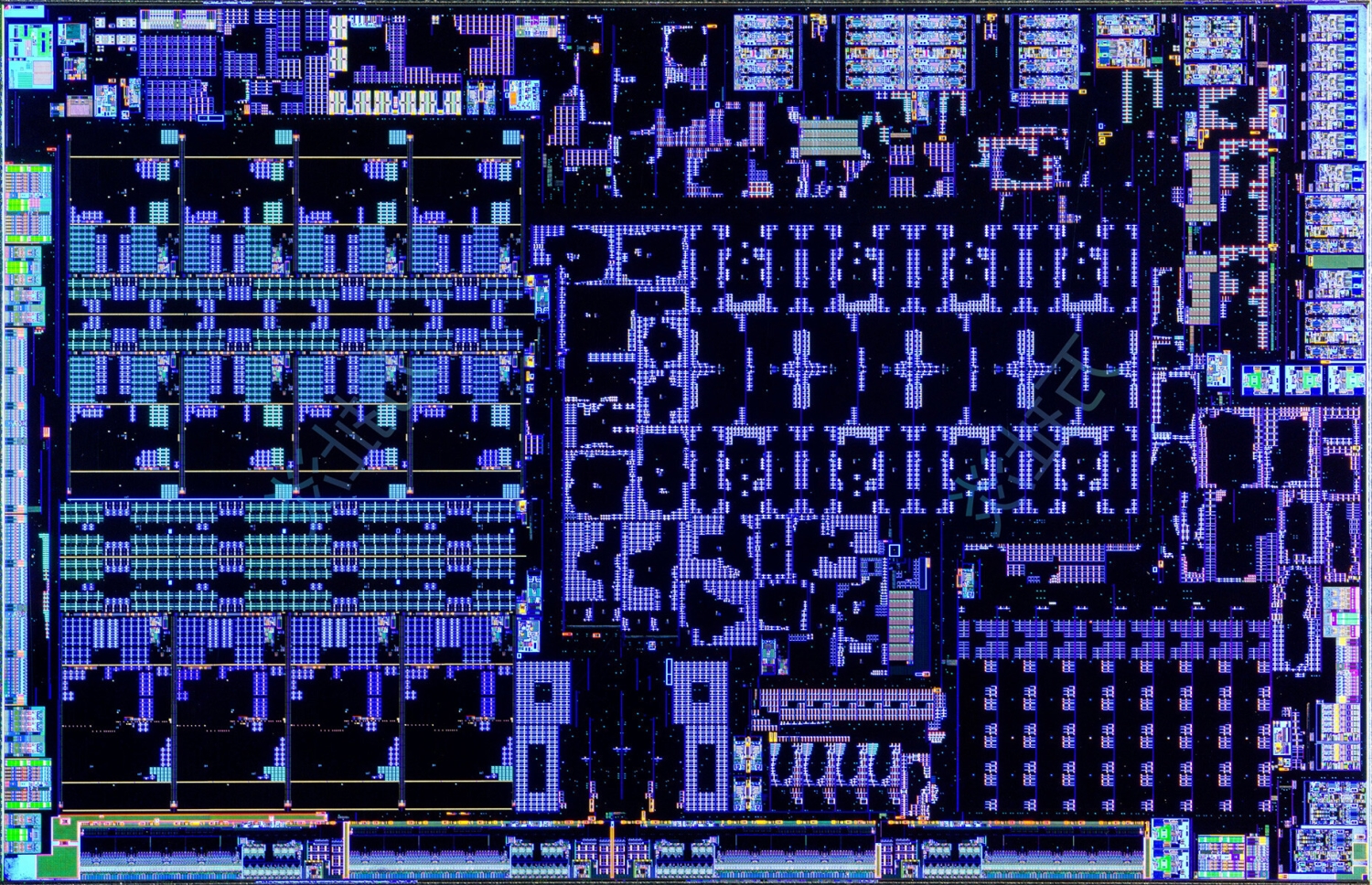

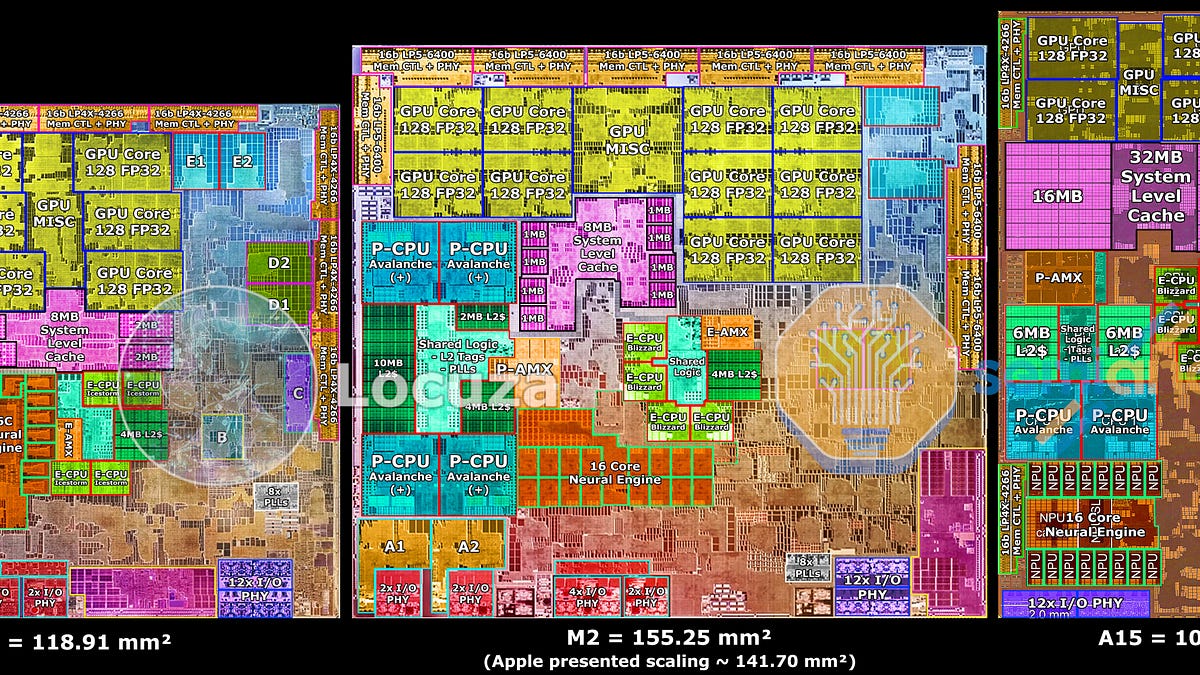

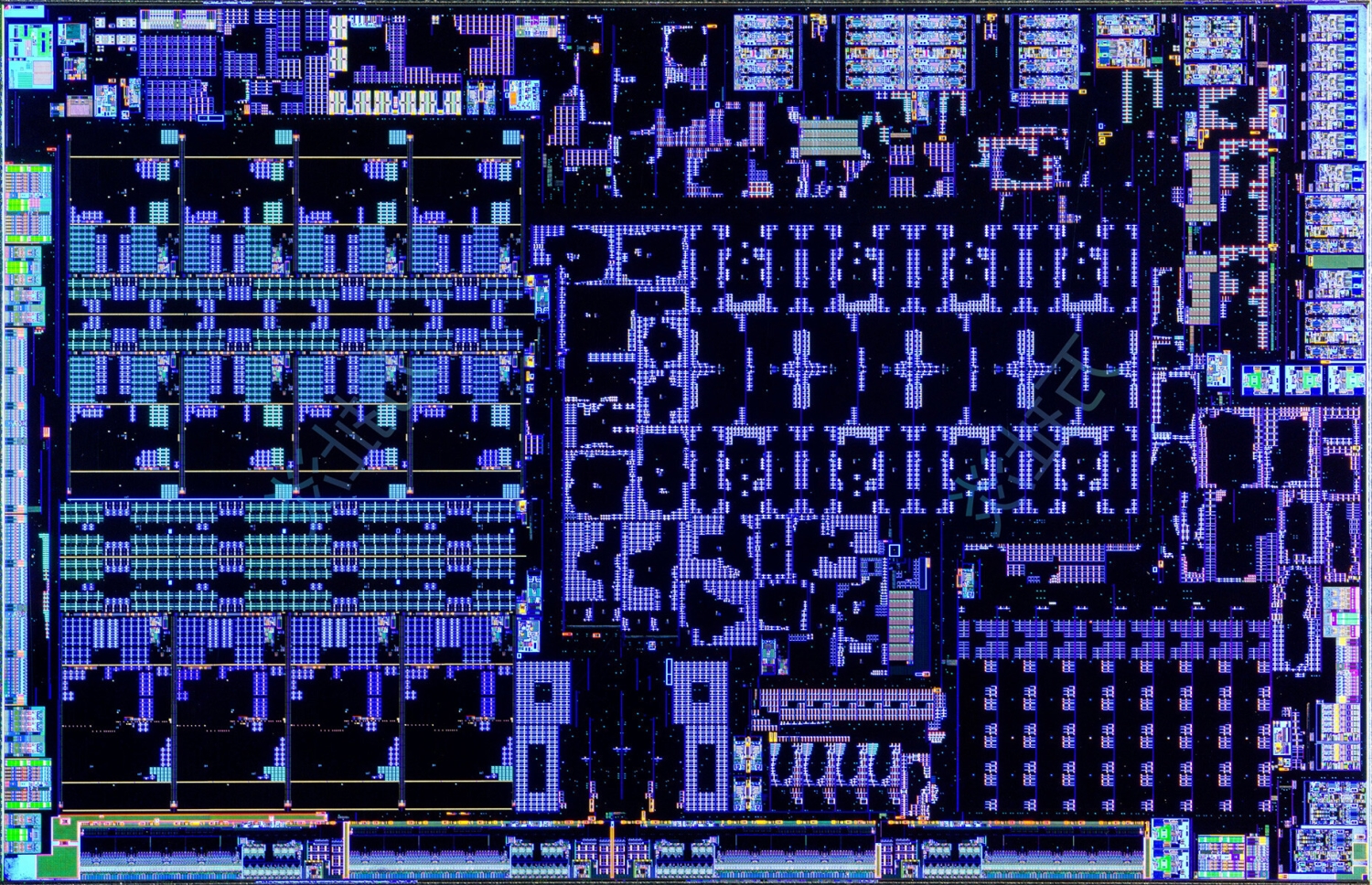

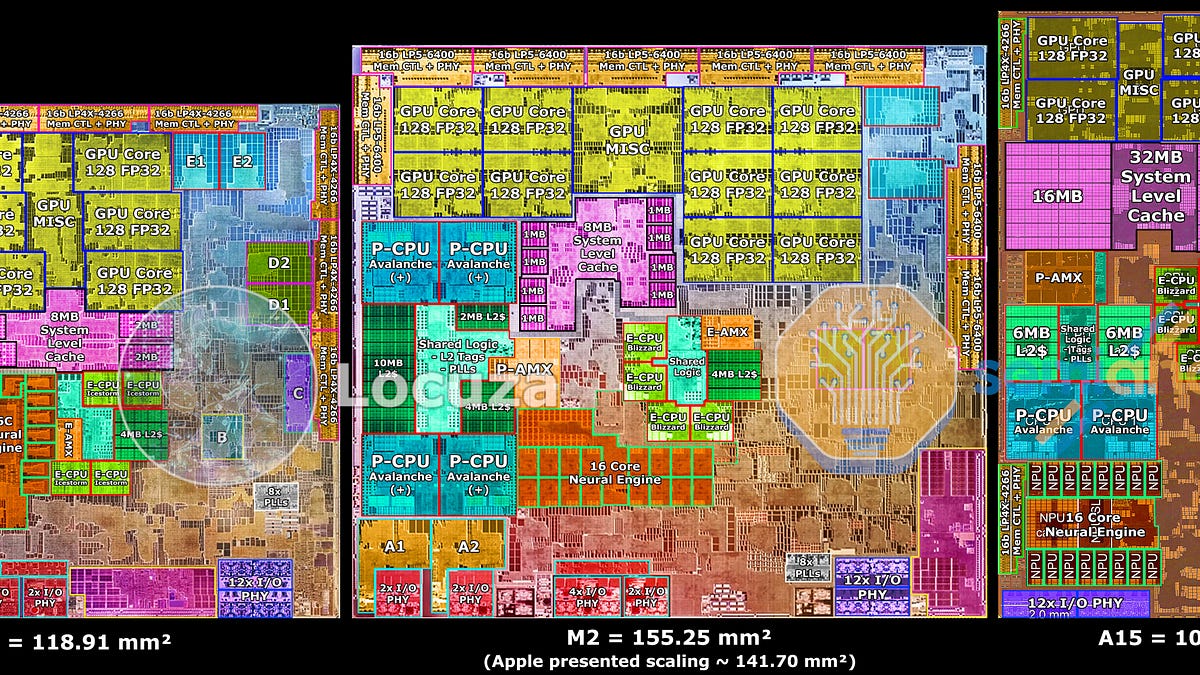

Comparing the die shots of the Snapdragon Elite with the M4 and the AMD Strix Point:

www.tomshardware.com

www.tomshardware.com

www.tweaktown.com

www.tweaktown.com

So one thing I didn't realize is that I thought the AMD chip was on regular N4, which I believe is what the Qualcomm Snapdragon SOC is fabbed on, but in fact the AMD chip is fabbed on the slightly newer N4P which has 6% more performance than N4 but should have the same transistor density which is what we care about here.

However, here are some interesting numbers (all numbers for the AMD chip and SLC for M4 I estimated based on square pixel area ratios compared to total die area):

*Unclear if the AMX (SME) coprocessor is being counted here, I don't think it is, so M4 numbers might be off. Maybe someone else who knows how to read a die shot can find it and confirm. Also it'd be great if someone could dig up and M2 or even better M2 Pro annotated die shot as manufactured on N5P would be the most apples-to-apples (pun intended) comparison point.

Right off the bat, this is why I don't consider comparisons the multicore performance of the Strix Point or the Elite to the base M-series "fair". We already knew this just from core count and structure alone, but we can see that the Elite CPU and Strix Point CPU areas are massive compared to the Apple M4. Another thing that stands out is that the Apple/Qualcomm SLC ('L3") seems fundamentally different from the AMD Strix Point L3 which appears to function much more similarly to the L2 of the Elite/M4 (i.e. the L3 of the AMD chip is per CPU cluster rather than a last level cache for the SOC). Thus I would actually consider the appropriate comparison of sizes to be as so:

Further not broken out are the L1 caches for the various CPUs. So here are their relative sizes in KiB (I only have data for M3, unclear if same or bigger for M4):

In other words a much larger portion of the Elite and Apple ARM core is L1 cache compared to the Strix Point. Cache is more insensitive to die shrinks and points to a smaller transistor density needed for logic even beyond what we see above where it might appear that the Zen 5 core and especially the Zen 5c core are beginning to match the Apple M4 in size and despite them being on a less dense node. That said, the M4 is clearly a beefy ARM CPU, no doubt its extra, extra wide architecture is playing a role here.

Differences in vector design likewise play a role in core size. I believe the Elite has 4x128b FP units and I can't remember if the M4 has 4 or 6 such 128b NEON units. Strix Point cores are 256b-wide but with certain features that allow them to "double-pump" AVX-512 instructions making them larger and more complex than normal AVX-256 vector units. I believe there are 4 such vector units (unsure if the "c" cores have fewer).

Comparing the Elite and the Strix Point, the Strix Point CPU is about 20% bigger (and die is overall 33% bigger too) despite slightly bigger L2 and L3 caches in the Elite. Smaller and manufactured on a slightly older node, the Elite should be significantly cheaper than the Strix Point and the smaller CPU is part of the reason why. Finally, despite being 20% smaller, from what we can see in the latest analysis, the higher end Elite chips (e.g. the Elite 80) should be on the same multicore performance/W curve as the HX370. Single thread is a similar story but greatly exaggerated: the Oryon core size is again roughly 20% smaller than the Zen 5 core but with much greater ST performance and efficiency. This represents an overall manufacturing advantage of ARM CPUs relative to x86.

Edit: thanks to Xiao Xi at the other place for tracking M2 figures down:

forums.macrumors.com

forums.macrumors.com

original source:

www.semianalysis.com

www.semianalysis.com

Based on this, the M2 Pro's P+E CPU-complex would've been roughly the same size as the Snapdragon Elite's CPU, albeit with 4 smaller E-cores, 2 P-AMX units, 1 E-AMX Unit, and the 8 P-cores being slightly bigger. And a 6% density advantage for the Elite being on N4 vs N5P as I believe N5P has the same density as N5.

Qualcomm Snapdragon X die shot reveals massive CPU cores with huge caches

GPU is quite modest though.

Check out this beautiful die shot of AMD's new Ryzen AI 300 'Strix Point' APU

AMD's new Ryzen AI 300 series 'Strix Point' APU die shot revealed: TSMC 4nm process node, Zen 5 CPU, RDNA 3.5 GPU, XDNA2 NPU in great detail.

So one thing I didn't realize is that I thought the AMD chip was on regular N4, which I believe is what the Qualcomm Snapdragon SOC is fabbed on, but in fact the AMD chip is fabbed on the slightly newer N4P which has 6% more performance than N4 but should have the same transistor density which is what we care about here.

However, here are some interesting numbers (all numbers for the AMD chip and SLC for M4 I estimated based on square pixel area ratios compared to total die area):

| all sizes in mm^2 | CPU core | All CPUs + L2 | L3 | CPU + L2 + L3 | Die |

| Snapdragon Elite | 2.55 | 48.7 (36MB) | 5.09 (6MB) | 53.79 | 169.6 |

| AMD Strix Point | 3.18 Z5 / 1.97 Z5c | 42.6 (8MB) | 15.86 (16MB + 8MB) | 58.5 | 225.6 |

| Apple M4* | 3.00 P / 0.82 E | 27 (16MB + 4MB) | 5.86 (8MB?) | 32.86 | 165.9 |

Right off the bat, this is why I don't consider comparisons the multicore performance of the Strix Point or the Elite to the base M-series "fair". We already knew this just from core count and structure alone, but we can see that the Elite CPU and Strix Point CPU areas are massive compared to the Apple M4. Another thing that stands out is that the Apple/Qualcomm SLC ('L3") seems fundamentally different from the AMD Strix Point L3 which appears to function much more similarly to the L2 of the Elite/M4 (i.e. the L3 of the AMD chip is per CPU cluster rather than a last level cache for the SOC). Thus I would actually consider the appropriate comparison of sizes to be as so:

| all sizes in mm^2 | "CPU Size" |

| Snapdragon Elite | 48.7 |

| AMD Strix Point | 58.5 |

| Apple M4* | 27 |

Further not broken out are the L1 caches for the various CPUs. So here are their relative sizes in KiB (I only have data for M3, unclear if same or bigger for M4):

| L1 cache per core (instruction + data) | |

| Snapdragon Elite | 192+96 KiB |

| AMD Strix Point | 32+48 KiB |

| Apple M3 | 192+128 KiB P / 128+64 KiB E |

In other words a much larger portion of the Elite and Apple ARM core is L1 cache compared to the Strix Point. Cache is more insensitive to die shrinks and points to a smaller transistor density needed for logic even beyond what we see above where it might appear that the Zen 5 core and especially the Zen 5c core are beginning to match the Apple M4 in size and despite them being on a less dense node. That said, the M4 is clearly a beefy ARM CPU, no doubt its extra, extra wide architecture is playing a role here.

Differences in vector design likewise play a role in core size. I believe the Elite has 4x128b FP units and I can't remember if the M4 has 4 or 6 such 128b NEON units. Strix Point cores are 256b-wide but with certain features that allow them to "double-pump" AVX-512 instructions making them larger and more complex than normal AVX-256 vector units. I believe there are 4 such vector units (unsure if the "c" cores have fewer).

Comparing the Elite and the Strix Point, the Strix Point CPU is about 20% bigger (and die is overall 33% bigger too) despite slightly bigger L2 and L3 caches in the Elite. Smaller and manufactured on a slightly older node, the Elite should be significantly cheaper than the Strix Point and the smaller CPU is part of the reason why. Finally, despite being 20% smaller, from what we can see in the latest analysis, the higher end Elite chips (e.g. the Elite 80) should be on the same multicore performance/W curve as the HX370. Single thread is a similar story but greatly exaggerated: the Oryon core size is again roughly 20% smaller than the Zen 5 core but with much greater ST performance and efficiency. This represents an overall manufacturing advantage of ARM CPUs relative to x86.

Edit: thanks to Xiao Xi at the other place for tracking M2 figures down:

Qualcomm revealed X Elite's benchmark scores

P-core: E-core: GPU: https://www.semianalysis.com/p/apple-m2-die-shot-and-architecture

original source:

Apple M2 Die Shot and Architecture Analysis – Big Cost Increase And A15 Based IP

Apple announced their new 20 billion transistor M2 SoC at WWDC.

Based on this, the M2 Pro's P+E CPU-complex would've been roughly the same size as the Snapdragon Elite's CPU, albeit with 4 smaller E-cores, 2 P-AMX units, 1 E-AMX Unit, and the 8 P-cores being slightly bigger. And a 6% density advantage for the Elite being on N4 vs N5P as I believe N5P has the same density as N5.

Last edited:

Another thing that has occurred to me looking at this chart comparing the Qualcomm Elite to the M2 Pro is I once estimated that the Qualcomm Elite was missing 20% of its multicore performance in CB R24 based on how 12 M2 P-cores should behave. However, here we see that for the same ST CB R24 performance the Elite 80 is ... 20% less efficient than the M2 Pro's P-core. If that's true at lower clocks as well (i.e. in a multithreaded scenario), then that alone could explain the discrepancy. That's a big if, but it's plausible.A revisualization of Notebookcheck's Cinebench R24 performance and efficiency data.

View attachment 31532

Details: This is only results from one benchmark, Cinebench, which has gone from being one Apple's worst performing benchmarks in R23 to one of its best in R24. As I am interested in getting as close as possible to the efficiency of the chip itself, power measurements above subtract idle power which NotebookCheck does not do when calculating the efficiency of the device. With the release of Lunar Lake, an N3B chip, I've added M3, Apple's corollary to Lunar Lake, and estimated M3 Pro's efficiency based on its power usage in R23 and the base M3 CPU's power/performance in R23/R24 (NotebookCheck did not have power data for R24 for the M3 Pro). I feel it is maybe overestimating M3 Pro's efficiency a little, but not by enough to matter given the gulf between it and every other chip. The M3 was in the Air, given that Cinebench is an endurance benchmark, its score and power usage will both likely be higher in the 14" MacBook Pro. I did also have the Snapdragon X1E-84-100 but removed it since it was clutter and didn't add much. The Ultra 7 258 is one of the upper level Lunar Lake chips, but not the top bin - the 288 might improve efficiency/performance somewhat by having better silicon, but the effect will be small relative to the patterns we see.

So what do we see? First off, Lunar Lake has great single core performance and efficiency ... for an x86 chip - helped perhaps by being on a slightly better node, N3B, than the M2 Pro (N5P), HX 370 (N4), and Snapdragon (N4). Despite this advantage, the Snapdragons on N4 and M2 Pro on N5P are still superior in ST performance and efficiency. The Intel 7 288V might increase performance to match or slightly beat the Elite 84 (not pictured), but it would be at the cost of even more power. That said, the efficiency and performance improvements here are enough to make x86 potentially competitive with this first generation of Snapdragons - at least enough that with compatibility issues, Intel can claim wins over Qualcomm and begin to lessen its appeal.

However, this has come at a cost of MT performance. The prevailing narrative is that without SMT2/HT, Intel struggles to compete against AMD and Qualcomm. And to certain extent that's true, but with only 4 P-cores and 4 E-cores in a design optimized for low power settings, it was never going to compete anyway. The review mentions it gets great battery and decent performance on "everyday" tasks in stark contrast to full tilt performance represented by Cinebench R24 and that after all this for thin and lights. The closest non-Apple Lunar Lake analog is the 8c/8T Snapdragon Plus 42 whose ST performance is a little lower than the 258V, but with much, much greater efficiency and whose MT performance and efficiency is much better than the Lunar Lake chip. However, the Snapdragon Plus 42 has a significantly cut down GPU which was already the weakest part of the processor. I'm not saying it can't provide compelling product, especially if priced well, but given the compatibility issues it's tougher sell for Qualcomm that it would've been last year. As for AMD, there is no current analog to the Lunar Lake in AMD's lineup. Sure, a down clocked HX 370 gets fantastic performance/efficiency at 18W ... but that's to be expected from a 12c/24T design which would frankly be cramped inside thin and lights - its not really meant for that kind of device. It's a Mx Pro level chip at heart and should be compared to the upcoming Intel Arrow Lake mobile processors. AMD's smaller Kraken Point is supposedly coming out next year with a more similar CPU but again is rumored to cut the GPU and according to the notebookcheck review, the Intel iGPU in Lunar Lake is already competitive with if not better than the AMD iGPU in the larger Strix Point. It's fascinating how AMD and Qualcomm both designed more workload-oriented CPU-heavy designs while Intel has basically designed Lunar Lake to be like the base M3, more well rounded.

But that brings us to the M3 and the comparisons here are pretty ugly for all of its competitors. Again, Apple tends to do very well in CB R24, so we shouldn't extrapolate from this one benchmark that it will be quite this superior to AMD, Intel, and Qualcomm in every benchmark. With that caveat aside ... damn. The ST performance and efficiency are out of this world and simply blow the other N3B chip, the Lunar Lake 258V, away with both a large performance gap and an even larger efficiency gap, nearly 3x. Even the M2 Pro and Snapdragons are just not that close to it. Sure in MT a down clocked Strix Point can match the base M3's performance profile at 18W, but that is a massive CPU by comparison and the comparable Apple chip to the HX 370, the M3 Pro, is leagues better than anything else in this chart, including the HX 370. I have to admit: while Apple adopted the 6 E-core design for the base M4, if the M4 Pro doesn't have its own bespoke SOC design and is a chop of the Max, then, depending on how Apple structures the upcoming M4 Max/Pro SOC, it'll be a shame to see Apple lose a product at this performance/efficiency point. The M3 Pro is rather unique. Also, its 6+6 design really highlights how improved the E-cores (and P-cores) were moving from the M2 to the M3, especially in this workload.

Meanwhile the two chips of comparable CPU design to the base M3, the Plus 42 and the 258V, are simply not a match for the base M3 in MT requiring double or more power to match its performance or otherwise offering significantly reduced performance at the same power level. Intel claimed to match/beat the M3 in a variety of MT tasks in its marketing material, but aside from specially compiled SPEC benchmarks, you can see how much power it takes for it to actually do that. Basically Apple can offer a high level of performance (for the form factor) in a fan-less design and its competitors, including the N3B Lunar Lake, simply cannot. Also like Lunar Lake, Apple also went for a balanced design here opting for powerful-for-its-class iGPUs to be paired with its CPUs (though obviously some of these chips, especially the Strix Point can also be paired with mobile dGPUs). There is a point to be made about the base MacBook Pro 14"'s price which is quite expensive, has a fan, and is still the base M3 with a low base memory/storage option - but even so, as we can see above, the base Apple chip is not without its merits at that price point/form factor. To reference @casperes1996, now that both Intel and Apple are on N3B ... I guess we figured out who orders pizza the best.

Oh and ... this is the performance/efficiency gulf of the newest generation of AMD, Intel, and Qualcomm processors with the M3 ... with the M4 Macs about to come out.

References:

Qualcomm Snapdragon X Elite Analysis - More efficient than AMD & Intel, but Apple stays ahead

Notebookcheck analysis of the new Qualcomm Snapdragon X Elite ARM processor X1E-78-100 and X1E-80-100.www.notebookcheck.net

AMD Zen 5 Strix Point CPU analysis - Ryzen AI 9 HX 370 versus Intel Core Ultra, Apple M3 and Qualcomm Snapdragon X Elite

Notebookcheck takes a look at the new AMD Zen 5 Ryzen AI 9 HX 370 processor and compares it with the Intel Core Ultra, Apple M3 Pro and Qualcomm Snapdragon X Elite.www.notebookcheck.net

Intel Lunar Lake CPU analysis - The Core Ultra 7 258V's multi-core performance is disappointing, but its everyday efficiency is good

Notebookcheck CPU analysis of the new Intel Lunar Lake Core Ultra 7 258V as well as the Core Ultra 256V in comparison with the Snapdragon X Elite, AMD Zen 5 & Apple M3.www.notebookcheck.net

Apple MacBook Air 13 M3 review - A lot faster and with Wi-Fi 6E

We tested the new MacBook Air M3 with a faster 10-core graphics card, Wi-Fi 6E and 16 GB RAM.www.notebookcheck.net

Apple MacBook Air 15 M3 review - Apple’s large everyday MacBook gets a power up

Notebookcheck reviews the new Apple MacBook Air 15 with the M3 SoC, 16 GB of RAM and Wi-Fi 6E.www.notebookcheck.net

Apple MacBook Pro 16 2023 M3 Pro review - Efficiency before performance

NotebookCheck tests the new MacBook Pro 16 with the M3 Pro SoC in the basic version with 18 GB of RAM and a 512 GB SSD.www.notebookcheck.net

Apple MacBook Pro 14 2023 M3 Pro review - Improved runtimes and better performance

Notebookcheck has tested the MacBook Pro 14 with the 11-core M3 Pro, 18 GB RAM and a 512-GB SSD which costs 2,499 Euro.www.notebookcheck.net

AMD adds GFX1152 "Kraken Point" APU with RDNA3.5 graphics to open-source Linux drivers - VideoCardz.com

Kraken Point already in the works by AMD A new GFX ID has been spotted. AMD has just announced its Strix Point silicon at Computex, its first Zen5 APU.videocardz.com

=======

Edit:

Scores for the very top end Elite chip X1E-00-1DE in the dev kit have landed:

Snapdragon X Elite in Dev Kit delivers performance on par with Apple M3 Pro on Geekbench

Qualcomm has officially launched a Snapdragon X Elite mini PC in the form of a development kit. This system features the most powerful processor within the lineup, the X1E-00-1DE, and it has been shown to offer a similar level of performance to the Apple M3 Pro on Geekbench.

www.notebookcheck.net

www.notebookcheck.net

Last edited: