Not sure what anyone expected.

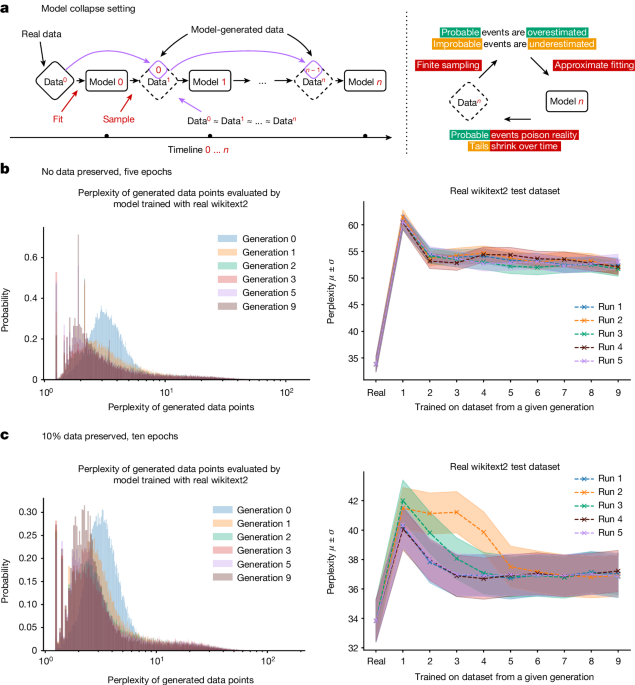

I see people swearing "agentic-AI" is different, including people I otherwise respect, and yet ... okay I haven't tried claude bot or any of them myself and maybe I would change my mind, but the underlying tech, the neural net, is the same as the chatbots and the "thinking" versions. Yes the bots and the "thinking" versions improve on the base way, the chatbot way, of interacting with the models, but at a much higher token cost and they still only partially remediate the problems with the underlying tech. Further, that such AI agents are security nightmares is obvious - the Signal president talked about that a while ago. Further this:

Microsoft says Office bug exposed customers' confidential emails to Copilot AI | TechCrunch

Microsoft said the bug meant that its Copilot AI chatbot was reading and summarizing paying customers' confidential emails, bypassing data-protection policies.techcrunch.com

doesn't feel like actual productivity gains for the most part with people addicted to their uses, burning out over it, and like people discussing which bot hallucinates more "smoothly". It's not that it can't make productivity gains ever, but ... the Tom's article mentioned Solow's paradox, how computers "at first" didn't improve measures of productivity because of what they say is workers getting overworked by the newfangled machines in line with AI, but the article Tom's actually links to on the subject has several arguments against it being a real paradox in the first place.