I’m not sure upset is the correct word. In the grand scheme of things, a computer gpu is very unimportant. Having said that, I greatly prefer macOS and desktops to other platforms, and in recent years I have found Mac desktops to be pretty uninspired and what’s worse I can’t recall a second version of any of them. Trash can- one version. iMac Pro - one version. Studio - one so far and rumors there won’t be a second. 2019 Mac Pro - maybe a second edition, but nothing yet, over three years later.

How is this acceptable for any pro desktop user?

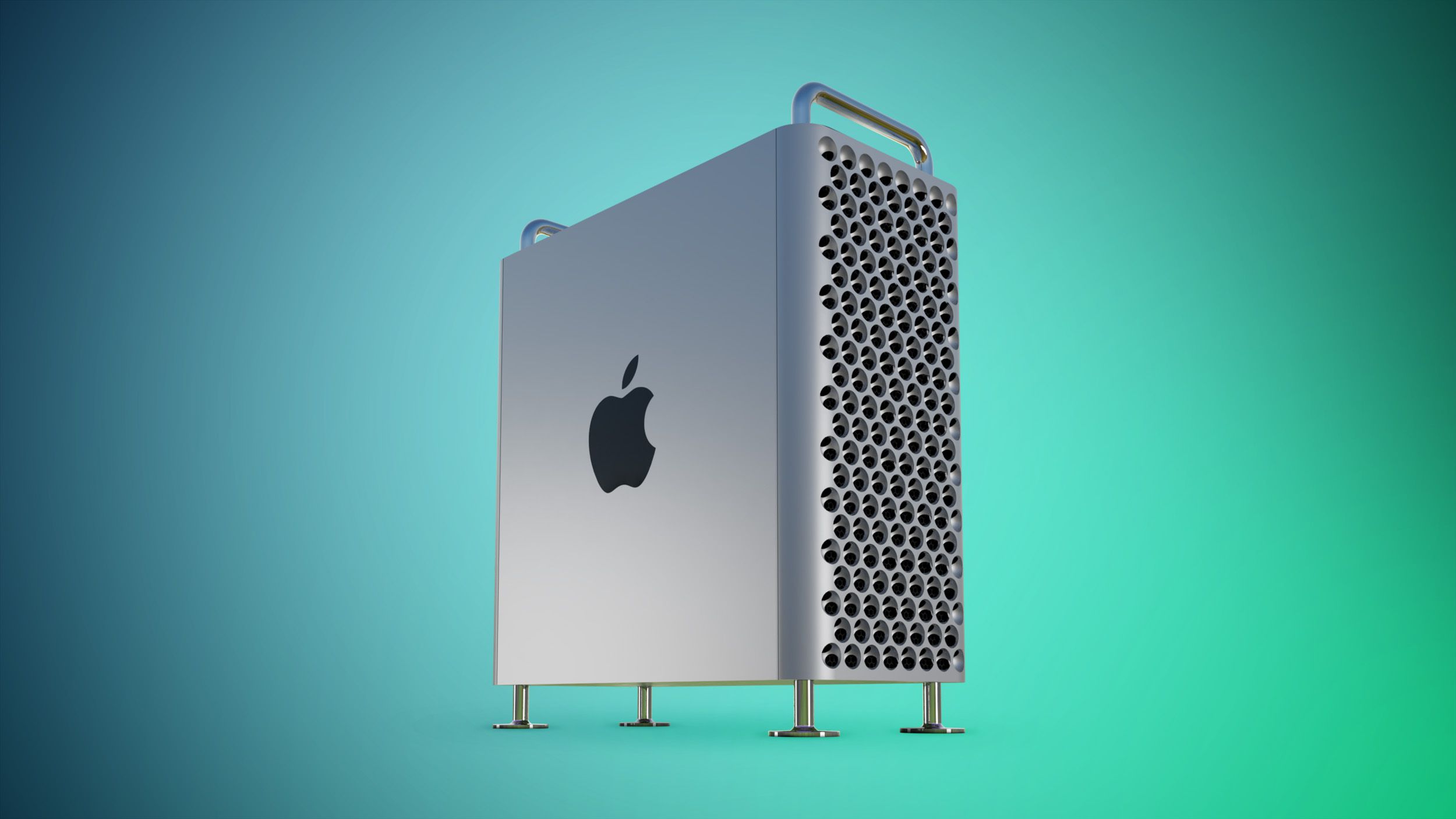

You say I’m obsessed with tactics and strategy, but I’m merely trying to find a strategy. Most pros would have been happy with a tower and replaceable components like pc manufacturers make. it feels like Apple hates that approach because it makes it easy to compare the cost with pcs. Instead they want custom components as a means of increasing margins. I’m fine with that. If the Mac Pro with their soc is the future of high end macs, I’d be happy. Only they seemingly can’t iterate successfully with this approach. They were unable to complete one full cycle. So we’re left with a situation where they don’t want to make a computer that pros prefer - tower with replaceable components, and they can’t iterate on their preferred approach.

Trash can got one version because it was a terrible mistake from the word go. As they admitted in 2017, they made a huge and bad bet on the future of how people would use GPUs by designing it entirely around two GPUs running at low frequency to save power. Everyone else in the world gravitated towards big power-hungry single GPUs instead, including application software authors, leaving Apple with a system design which made no sense and didn't have a good upgrade path. It had other major flaws too, mostly no internal PCIe expansion (which is not just about GPUs - pro audio and video editors often need lots of interface cards for external devices).

iMac Pro got one version because it was never intended to be a long term product. In 2017, they needed to announce their big course correction, but also knew they couldn't roll out the new tower any time soon. They needed to ship

something rather than nothing. As they admitted in the press event, lots of pro users had begun buying high end consumer 27" iMacs, since for many purposes they were becoming better and faster machines than the aging dead-end trashcan. So they made a pro iMac based on workstation silicon. That product did its job by shipping quickly and giving some customers something to buy in the short term, but there was lots of foreshadowing that Apple didn't think it was the best way to go in the long term.

The studio replaced iMac Pro, so presumably that's what Apple actually thinks that product category (a compact, non-expandable desktop workstation) should look like. It isn't even a year old and you're putting it in the grave?

The 2019 Mac Pro has only one version so far for a very different reason. It too is clearly part of Apple's post-2017 vision for the Mac workstation lineup. 2021 is the earliest Apple could have refreshed it, as that's when Intel shipped a newer generation of Xeon W than the 2019 generation Apple put in the 2019 model (Intel doesn't usually refresh Xeon product lines every year).

However, the very last time Apple did a major refresh cycle on any Intel Mac appears to have been the 2020 27" iMac, in August 2020, a few months before the first Apple Silicon Macs launched. Since then, Apple has done nothing with Intel Macs other than deleting models from the product lineup as they get replaced with Apple Silicon equivalents. It's pretty easy to guess why.

So I just don't see it. I think you're overinterpreting events which have much simpler explanations.