I had my own (positive!) experience with using ChatGPT to figure out how to write particularly knotty C++ meta-template code using the latest C++20 techniques so it was shorter and easier to read (also faster to compile as it turns out):And I will say that the engineers on the ground aren't unaware of this, and have been butting up against this longer than you might think. Doesn't stop the tech bros from pushing the tech though.

Nor does it help with learning. Much like how writing things out by hand for notes seems to help retention better than typing the notes. It engages more/different parts of the brain that helps with learning. Having an AI do it for you has much the same problem, but arguably worse.

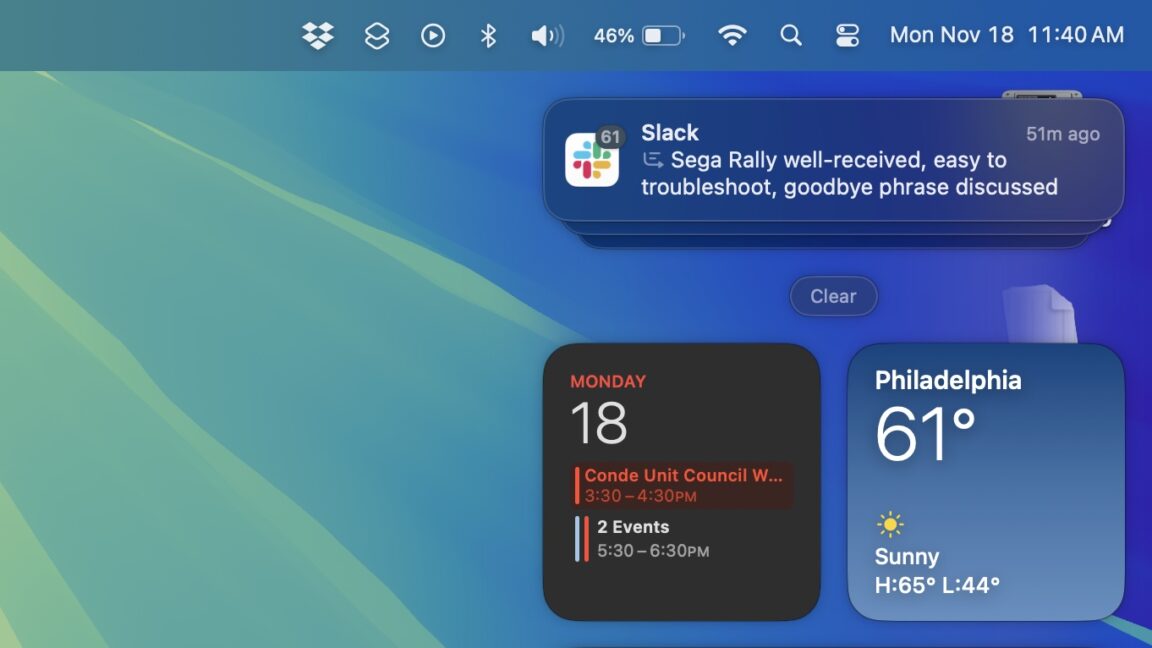

I am working in a space I'm unfamiliar with at the moment (watched my team get shuffled from owning mobile apps to owning web code), and I actually had jokes made: why wasn't I using AI to get a task done faster?

Because I'd like to actually retain the knowledge of how stuff works so I can be faster, and remain experienced. I didn't get to where I was by not learning on the job.

This was using the old, free ChatGPT 3.5 so I was not expecting the chat bot to solve the problem for me ... and it didn't. What it was able to do however was still quite useful. Using various prompts I was able to get it to output code which didn't work, but did point me in the right direction so I could then look up with more confidence, more awareness the use cases of new C++ meta template key words and algorithms where I was a little lost to begin with. During the iteration process I was able to bounce further ideas off of it and also get better explanations for what was going wrong than the standard output of C++ error messages could provide (even with the huge improvements they've made, C++ error messages can still be gnarly, especially templates again despite the improvement specifically when doing meta-template programming). I ended up created a few different solutions using this process and chose the one I liked the best. I learned a lot, understood how the code worked, and was very satisfied.

However, that would not have been the case if the chat bot had simply "solved" the problem for me right off the bat. I wouldn't have understood the code, would've struggled to know if it was working, and wouldn't have learned the new techniques myself. I do wonder if there is a missed opportunity here where instead of marketing the AI as something that can write (boilerplate) code for you, if rather it was deliberately to be constructed as a soundboard. I know there are tools specifically to use AI to explain convoluted error messages, but combining that with a more limited capability to generate code but greater ability to provide (real) references and resources with its own compiler/interpreter to test ... I could actually see that being a legitimately useful teaching tool and even programming aid - something to offer suggestions and explanations rather than complete solutions. That seems more in line, to me, what might be useful to programmers and potentially more in line with these chat bot's actual capabilities. That way also, if it is getting things wrong, you aren't expecting it deliver production ready code anyway. I am NOT suggesting we can replace human teachers, this is merely as a tool/aid. Then again maybe even this is too much for it to reliably do despite my own positive experience. I can imagine there might be scenarios where writing suggestions/references might be just as hard if not harder than providing a "solution".

Last edited: