You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Ai thread

- Thread starter Eric

- Start date

Nycturne

Elite Member

- Joined

- Nov 12, 2021

- Posts

- 1,749

Nvidia stock is taking a "small" beating, allegedly due to the release of DeepSeek AI from China.

This is one problem with AI/ML as an industry rather than a technology used by industry, there's not much of a moat. It's perhaps expensive to develop those techniques, but not to train new folks once they are known. It doesn't help that OpenAI is already chasing AGI and that's what they want to invest in, while DeepSeek is focused on making what's available today cheaper (which is pretty easy).

AI is more akin to the part of 3d graphics that is the mathematical portion of it. The techniques spread quickly, the bit that slows everything down is building the hardware to run it all on as the models scale in size. I think the irony here is that Nvidia should actually do well considering DeepSeek-V3 is still quite a large model. Much like Nvidia does well with 3d graphics because their moat is in the implementation of their acceleration hardware, not the techniques themselves. I wonder if Nvidia getting slammed is more because of the export restrictions (i.e. can't sell the best shovels to the folks behind DeepSeek), than because they missed some sort of boat here.

Eric

Mama's lil stinker

- Joined

- Aug 10, 2020

- Posts

- 15,367

- Solutions

- 18

- Main Camera

- Sony

I turned off Apple Intelligence on both my Mac and iPhone. I know I'm old and grumpy but all the auto dictation/reply suggestions is just too much. I like to take a minute and say what I want to say the way I want to say it for better or worse. This stuff is making us all too lazy to think for ourselves.

Yoused

up

- Joined

- Aug 14, 2020

- Posts

- 8,370

- Solutions

- 1

Welcome to modern consumer capitalism. Everything on the market is built to ramp up our lazy (in the name of convenience). Electric can openers? Power everything on your car. Keurig, anyone? Can you find anyone out there (besides me) who knows how to use a slide rule or can read a vernier scale? I like my computer and spend way too much time on it, but doing stuff is good for me too. For anyone, really.This stuff is making us all too lazy to think for ourselves.

- Joined

- Sep 26, 2021

- Posts

- 8,026

- Main Camera

- Sony

I can use a slide rule. I even own two.Welcome to modern consumer capitalism. Everything on the market is built to ramp up our lazy (in the name of convenience). Electric can openers? Power everything on your car. Keurig, anyone? Can you find anyone out there (besides me) who knows how to use a slide rule or can read a vernier scale? I like my computer and spend way too much time on it, but doing stuff is good for me too. For anyone, really.

Yoused

up

- Joined

- Aug 14, 2020

- Posts

- 8,370

- Solutions

- 1

I can use a slide rule. I even own two.

Have you got a bamboo one?

KingOfPain

Site Champ

- Joined

- Nov 10, 2021

- Posts

- 761

Have you got a bamboo one?

Cliff probably carved his own.

- Joined

- Sep 26, 2021

- Posts

- 8,026

- Main Camera

- Sony

I don’t think so. I inherited them from my dad, who used them in school (I was lucky to be born late enough to skate by in college with my HP-28S and -48G.) They seem to be wood, but I can’t tell wood from bamboo.Have you got a bamboo one?

KingOfPain

Site Champ

- Joined

- Nov 10, 2021

- Posts

- 761

This stuff is making us all too lazy to think for ourselves.

That's what I've been saying for decades regarding other topics.

Users coming from Windows, who are trained to expect a setup.exe or install.exe, are incapable of installing software on macOS or other operating systems.

I once almost started running after someone who kept the headlights of his car on, only for the car to turn them off a few seconds later. When those people drive a car that doesn't have this feature and that also doesn't warn about the headlights being on, the battery will be dead by the time they get back.

Not to mention that about half the drivers in Germany seem to have forgotten how the turn signal works. Although I'm not sure if they expect the car to do it, or if they are just lazy, or if the are simply assholes who don't care.

I once drove a loaner because my car was in inspection. After I had opened the gate to the parking lot of the company I work for, the car refused to drive. It took me a while to figure out that it wanted me to use the seatbelt to drive a few meters onto the parking lot.

Neighbors of mine have what one might call a smart home; they can do almost anything from their smartphones. The last two days the lights in their living room was on when I left my house in the morning. I'm a really early riser, and I doubt that someone was awake in that house around 4 am. The home is so smart that they simply forget to turn off the lights.

And I could rant all day long about "corrections" that Word/Outlook/Excel perform, which I then have to correct back to the original...

I hate products that think they are more intelligent than I am, because in most cases that's simply not true.

But a lot of people, who think that the products are "intelligent", become more and more lazy and stupid with every iteration.

I don't have any problems when knowledgable people play around with ChatGPT and the like.

I fear people who have no idea how these tools work and think that the output is the truth, because it is "artificial intelligence".

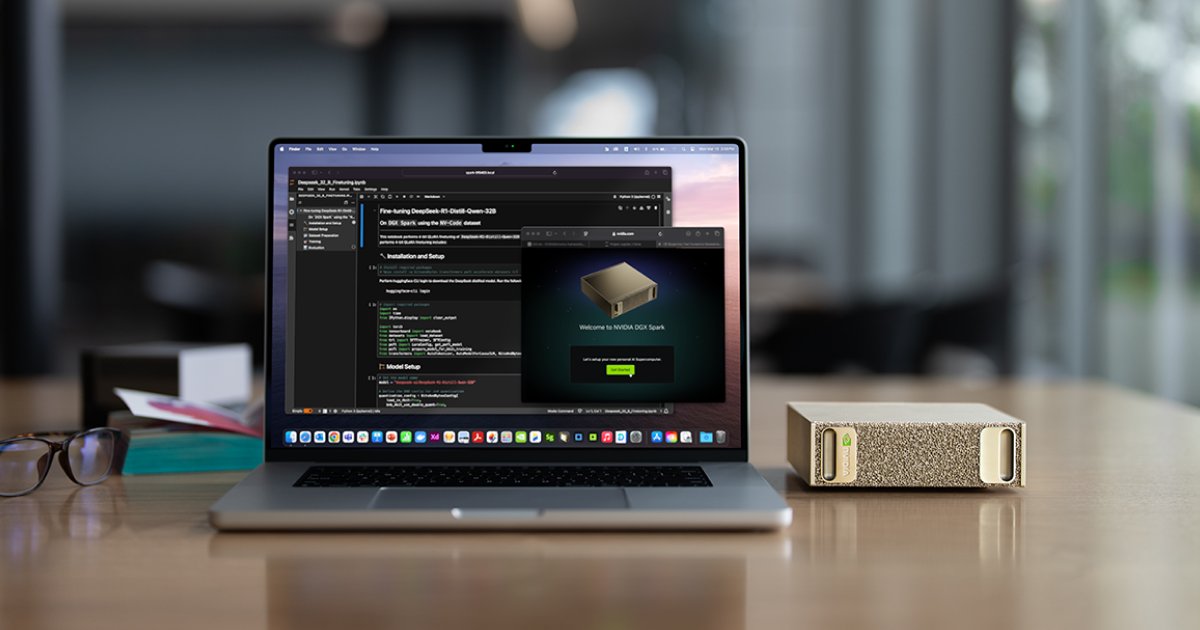

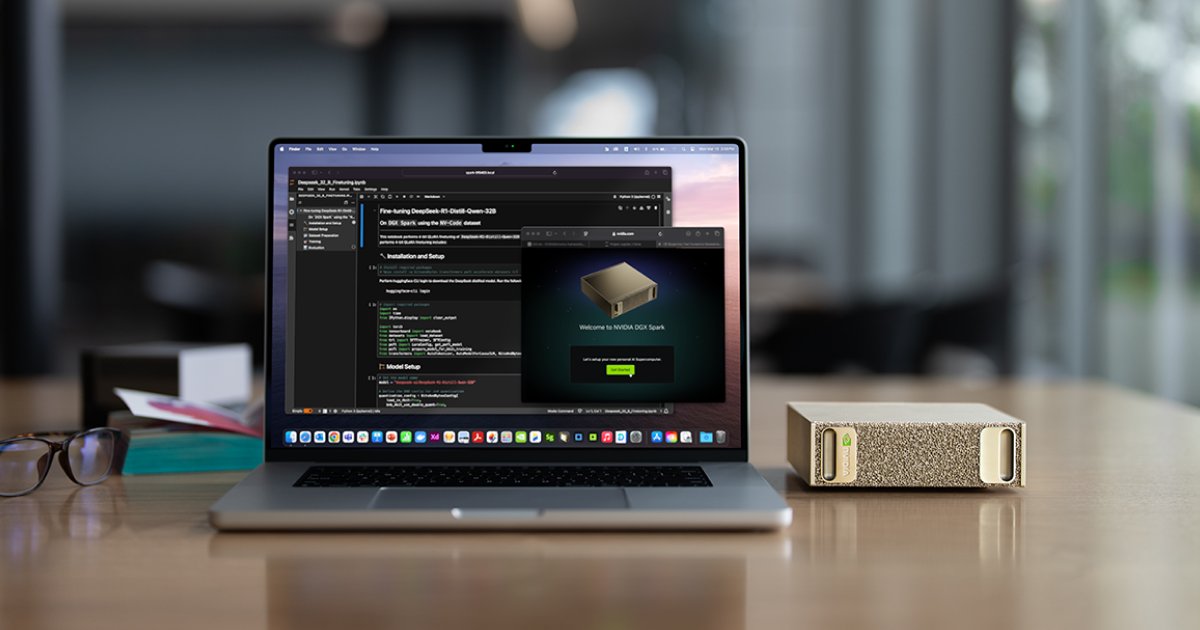

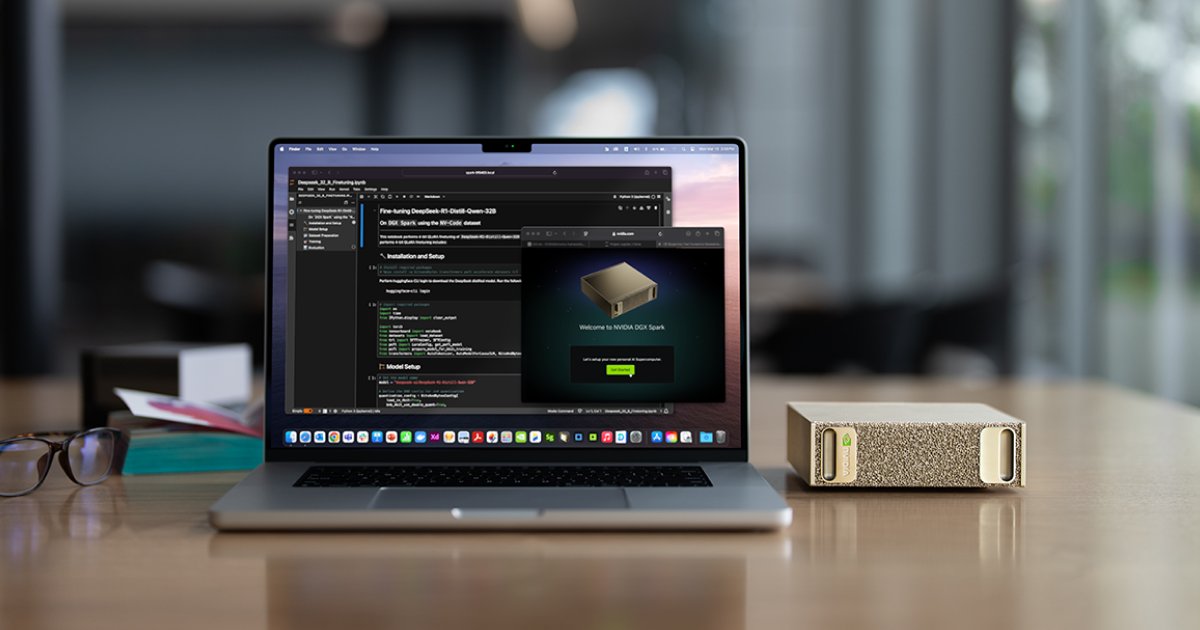

Saw some discussion of NVIDIA's recently-announced (at CES) Project DIGITS AI-focused PC on MR. Seems interesting (and didn't get any hits when I searched techboards/all threads for "Project DIGITS"), so I'm posting info about it here. Expected release: May 2025.

Basic specs:

"At its heart lies the GB10 Grace Blackwell Superchip, delivering an impressive 1 petaflop of AI performance. The system packs 128GB of unified memory and up to 4TB of high-speed NVMe storage, enabling users to run large language models with up to 200 billion parameters locally."

Source:

Starting price is $3,000, and I gather all models have 128 GB RAM/1 PFLOPS GPU.

Bandwidth was not specified. Here's an estimate that it will have 825 GB/s:

"From the renders shown to the press prior to the Monday night CES keynote at which Nvidia announced the box, the system appeared to feature six LPDDR5x modules. Assuming memory speeds of 8,800 MT/s we'd be looking at around 825GB/s of bandwidth."

Source: https://www.theregister.com/2025/01/07/nvidia_project_digits_mini_pc/"

I've seen competing claims saying it will be much lower. But 825 GB/s seems strong so, if that's correct, why didn't NVIDIA include it in their announcement along with all the other specs?

Bandwidth comparison, for context:

M4 Max, upper spec (est $4k for M4 Max Studio with 128 GB RAM/1 TB SSD, if such a machine is offered): 546 GB/s.

5080 desktop GPU (MSRP $1k, street price TBD): 960 GB/s.

5090 desktop GPU (MSRP $2k, street price TBD): 1,792 GB/s

Basic specs:

"At its heart lies the GB10 Grace Blackwell Superchip, delivering an impressive 1 petaflop of AI performance. The system packs 128GB of unified memory and up to 4TB of high-speed NVMe storage, enabling users to run large language models with up to 200 billion parameters locally."

Source:

Starting price is $3,000, and I gather all models have 128 GB RAM/1 PFLOPS GPU.

Bandwidth was not specified. Here's an estimate that it will have 825 GB/s:

"From the renders shown to the press prior to the Monday night CES keynote at which Nvidia announced the box, the system appeared to feature six LPDDR5x modules. Assuming memory speeds of 8,800 MT/s we'd be looking at around 825GB/s of bandwidth."

Source: https://www.theregister.com/2025/01/07/nvidia_project_digits_mini_pc/"

I've seen competing claims saying it will be much lower. But 825 GB/s seems strong so, if that's correct, why didn't NVIDIA include it in their announcement along with all the other specs?

Bandwidth comparison, for context:

M4 Max, upper spec (est $4k for M4 Max Studio with 128 GB RAM/1 TB SSD, if such a machine is offered): 546 GB/s.

5080 desktop GPU (MSRP $1k, street price TBD): 960 GB/s.

5090 desktop GPU (MSRP $2k, street price TBD): 1,792 GB/s

Last edited:

Saw some discussion of NVIDIA's recently-announced (at CES) Project DIGITS AI-focused PC on MR. Seems interesting (and didn't get any hits when I searched techboards/all threads for "Project DIGITS"), so I'm posting info about it here. Expected release: May 2025.

Basic specs:

"At its heart lies the GB10 Grace Blackwell Superchip, delivering an impressive 1 petaflop of AI performance. The system packs 128GB of unified memory and up to 4TB of high-speed NVMe storage, enabling users to run large language models with up to 200 billion parameters locally."

Source:

Starting price is $3,000, and I gather all models have 128 GB RAM/1 PFLOPS GPU.

Bandwidth was not specified. Here's an estimate that it will have 825 GB/s:

"From the renders shown to the press prior to the Monday night CES keynote at which Nvidia announced the box, the system appeared to feature six LPDDR5x modules. Assuming memory speeds of 8,800 MT/s we'd be looking at around 825GB/s of bandwidth."

Source: https://www.theregister.com/2025/01/07/nvidia_project_digits_mini_pc/"

I've seen competing claims saying it will be much lower. But 825 GB/s seems strong so, if that's correct, why didn't NVIDIA include it in their announcement along with all the other specs?

Bandwidth comparison, for context:

M4 Max, upper spec (est $4k for M4 Max Studio with 128 GB RAM/1 TB SSD, if such a machine is offered): 546 GB/s.

5080 desktop GPU (MSRP $1k, street price TBD): 960 GB/s.

5090 desktop GPU (MSRP $2k, street price TBD): 1,792 GB/s

Pretty sure that article is out of date and wrong information on several fronts. The CPU cores aren’t V2, they’re newer, and I’m not sure how you get six modules to add up to 128GB and 825GB/s. For context the M4 Max has a transfer speed of 8500 with 8 modules, yielding a 546GB/s bandwidth. Plus 128 isn’t divisible by 6.

Last edited:

I assume you're refering to the article in the Register, not that in the Medium. Yeah, good point about 128 not being divisible by 6. Do you have a prediction for the NVIDIA's bandwidth? I haven't tried to estimate it myself.Pretty sure that article is out of date and wrong information on several fronts.

Yeah the Register article. The lowest it could be is 256-bit bus using 4x32GB modules. That would put it around M4 Pro/Strix Halo bandwidth (~270-300GB/s depending). If they really are using six modules, as @Yoused said in a 2x32 + 4x16 configuration, then that's a 384-bit bus then it would be similar to the binned M4 Max, about 400GB/s. If using 8, like 8x16, modules, then it would be similar to the full M4 Max roughly 550GB/s. They could go higher than that too but it would require many more smaller modules. I don't remember how small the LPDDR modules go but eventually you run into issues where the smallest RAM you can offer is pretty large (the full M4 Max starts at 48GB - 8x6GB modules). Then again, they may be planning on offering only a 128GB variant.I assume you're refering to the article in the Register, not that in the Medium. Yeah, good point about 128 not being divisible by 6. Do you have a prediction for the NVIDIA's bandwidth? I haven't tried to estimate it myself.

Aye I was just about to write that. While that would be odd, I can't think of a reason why it wouldn't work.If, for some inexplicable reason, you put in two 32s and four 16s, that would be six for 128.

@thenewperson found links supporting a 273GB/s bandwidth- same as Strix Halo/M4 Pro and the smallest bandwidth they could use and still supply 128GB of RAM. It'll probably have similar or worse FP32 (graphics/non-AI compute) performance to those chips as well.

Herdfan

Resident Redneck

- Joined

- Jul 8, 2021

- Posts

- 5,965

I have no idea how accurate this is, but I have a couple of, well paranoid friends. As a result, we first moved our text group to What'sApp and all was fine until FB took it over and then we moved to Signal. All good.

But according to this guy, AI will be able to "see" what is on your screen, so it won't matter if it is end-to-end encrypted. It will only be as secure as the person you send a message to. So if User A has AI turned off, but sends a message to User B who has it turned on, then AI can see what A sent.

Any thoughts?

They are also looking at getting these:

unplugged.com

unplugged.com

But according to this guy, AI will be able to "see" what is on your screen, so it won't matter if it is end-to-end encrypted. It will only be as secure as the person you send a message to. So if User A has AI turned off, but sends a message to User B who has it turned on, then AI can see what A sent.

Any thoughts?

They are also looking at getting these:

UP Phone | Privacy-First Smartphone That Proves It

We don’t just promise privacy—we prove it. UP Phone is the only smartphone with a hardware battery disconnect switch, background VPN, encrypted cloud, and uncensored App Center.

The ability to capture text and images from bitmap images is already well understood a long time ago, before the "AI" craze started.So if User A has AI turned off, but sends a message to User B who has it turned on, then AI can see what A sent.

Fear mongering, IMHO.

Similar threads

- Replies

- 7

- Views

- 433