Apple will be covering the latest iteration of the Apple TV, this time with 8K support, and I'll step away as a result of a severe case of monotonous boredom, having hit the mute button, while idly playing with my nephew's pro wrestling action figures. During those 37 seconds, the Apple Silicon Mac Pro will be announced, I'll miss it entirely, only to come here on TechBoards and bitch about it for multiple pages, as is tradition. Nobody will do me the courtesy of telling me of the announcement, and just play along with my misery, as is tradition.

My grousing aside, I'm hoping that WWDC will finally put an end to my Mac vs. PC decision making process. While I've always been likely to stay with the fruit company for my desktop computing needs, that has become more likely over the past few weeks. My three greatest concerns:

1. The ability to play Alan Wake 2, the sequel to my all-time favorite computer game. Details of the game have

finally been announced and a release date of October 17th has been set, just in time for Halloween. Much to my dismay, the game will feature two campaigns, with the returning hero being playable in only about 50% of the game, and only appearing in locations from the expansion, areas which I very much disliked.

Alan Wake 2 has gone from "crawl across broken glass" to "wait for reviews" since that reveal. Perhaps this is actually a good thing for me, personally, because it would have been insanity to build an entire PC just for one game. Now that the irrational exuberance has subsided, the imperative to build a PC, for this specific purpose, has waned considerably.

2. As we have covered

in the gaming forum, the number of native Apple Silicon titles announced for Mac before WWDC has been significantly more substantial than I had expected. (Something that

@Cmaier predicted over a year ago.)

I've always said that I don't need access to the entire Windows PC library, just enough to keep me entertained. I would say that 80% of the games that I play are turn-based isometric RPGs, and essentially all of those have Mac native versions already, including the upcoming Baldur's Gate 3. Of the remaining 20%, I prefer horror and science fiction, which just so happen to be plentiful among the upcoming Mac games.

On top of that, as

@dada_dave has been extensively covering, the Asahi Linux team have made remarkable progress with support for Windows games, and continue their endeavors with Proton compatibility for their distro. As I just

mentioned in that thread, CodeWeavers have announced initial support for DirectX 12 in CrossOver, thus expanding gaming options further.

3. Finally, concerning

@dada_dave's words of wisdom as written in the above post...

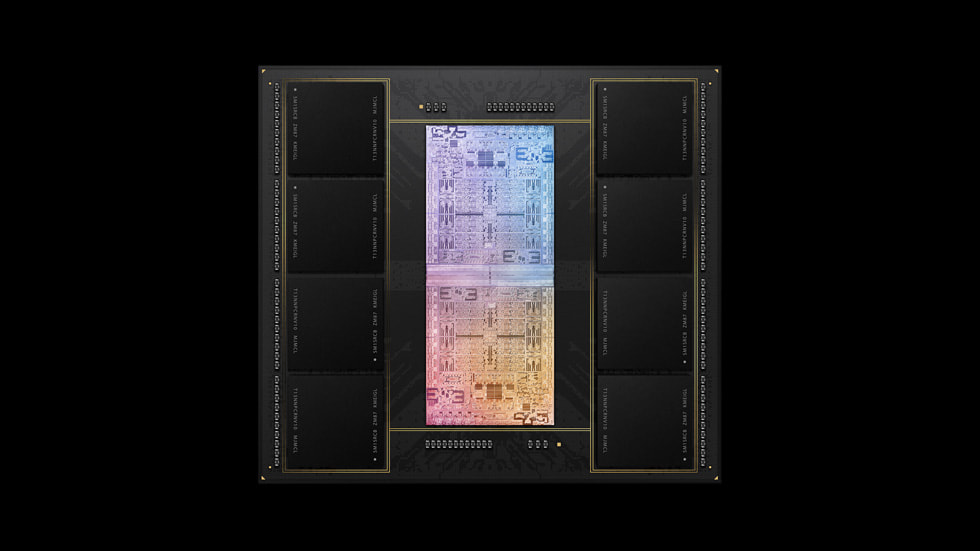

Assuming our resident one-armed CPU architect is correct, there's a good chance that we will know more about the Apple Silicon Mac Pro by the time WWDC is over, being the final piece of the transition puzzle. As I've been bellyaching about, I'm concerned that Apple will punt its GPU efforts back to AMD, which is a solution that I find disquieting. I'm not going to purchase a Mac Pro just to play games. I would purchase a M(x) Pro Mac mini or M(x) Max Mac Studio with upgraded graphics for such activities. If Apple's solution for performance GPUs is to go crawling back to AMD, then lower-tier Macs would be stuck with eGPU options, which fits in the "never again" category for me. At this point, that's the only disqualifier.

Having chatted with a number of big brain folks here about the issue, both publicly and privately, my understanding is that the likelihood of Apple using third-party GPUs is dubious at best. As an example, over at the fever dream realm known as the MacRumors forum, the esteemed

@leman just posted the following on the matter:

That's the short version of what I've heard from multiple smart folks on the subject. There are technical, financial, and cultural reasons that Apple is unlikely to use AMD GPUs, but until the Apple Silicon Mac Pro is finally announced, I'll still have a nagging feeling in the back of my skull. From my perspective, if Apple has confidence in their ability to release performant GPUs, then I'll have confidence in purchasing a new Mac as my next computer.

Thus far, all signs point to me firmly staying on the Apple ranch, which is a huge relief. It also means that, with my continued use of Macs, I won't become obsolete on these forums. Whether that is a good thing or a bad thing depends upon one's opinion on the value of my posts. Ha!