Comparing across APIs when the hardware is different is extremely difficult. For OpenCL/GL on macOS, we do occasionally have the same (AMD) hardware running the other APIs but then the OpenGL/CL implementation on macOS is practically deprecated and/or running through a Metal translation layer anyway. In general though, I’ve tried looking at this using benchmarks that use different APIs for the same task (Geekbench, some 3D Mark ones, Aztec Ruins, etc …) and while I haven’t charted them all out rigorously, I’ve never noted a consistent pattern. From what I gather from people who work in the field, is that none of the modern APIs (DirectX, Vulkan, Metal) are innately, substantially superior in regards to performance, but drivers for the particular hardware matter a lot and basically swamp most things, even competing with or surpassing hardware differences.One thing I am curious about: there are charts on which you can compare M-series Macs against nVidia and AMD graphics cards; in OpenCL, the Mac GPU worse than half the score of the dGPUs, but in Metal, the separation is quite a bit closer, the highest Mac being behind the highest card by around 5%. I realize that OpenCL has some serious deficiencies and should not be relied on as a good measure. What I am curious about is whether there are performance/efficiency comparisons between Metal and the other graphics APIs. How does Metal compare to Vulkan, DirectX and OpenCL for the same jobs?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

M4 Mac Announcements

- Thread starter casperes1996

- Start date

I re-read the article (thanks again for linking it) and have some additional thoughts:Some scores for a variety of LLMs being run on M3 Ultra, M3 Max and a 5090

From the review here: https://creativestrategies.com/mac-studio-m3-ultra-ai-workstation-review/

View attachment 34155

(1) In describing the table comparing a 5090 PC to AS Macs, he says "Below is just a quick ballpark of the same prompt, same seed, same model on 3 machines from above. This is all at 128K token context window (or largest supported by the model) and using llama.cpp on the gaming PC and MLX on the Macs....The theoretical performance of an optimized RTX 5090 using the proper Nvidia optimization is far greater than what you see above on Windows, but this again comes down to memory. RTX 5090 has 32GB, M3 Ultra has a minimum of 96GB and a maximum of 512GB. [emphasis his]"

The problem is that, when he presents that table, he doesn't explictly provide the size of the model he's using, so I don't know the extent to which it exceeds the 32 GB RAM on the 5090. I don't understand why tech people omit such obvious stuff in their writing. Well, actually, I do; they're not trained as educators, and thus not trained to ask "If I someone else were reading this, what key info. would they want to know?" OK, rant over. Anyways, can you extract this info from the article?

(2) This is interesting:

"You can actually connect multiple Mac Studios using Thunderbolt 5 (and Apple has dedicated bandwidth for each port as well, so no bottlenecks) for distributed compute using 1TB+ of memory, but we’ll save that for another day."

I've read you can also do this with the Project DIGITS boxes. It would be interesting to see a shootout between an M3 Ultra with 256 GB RAM ($5,600 with 60-core GPU or $7,100 with 80-core GPU) and 2 x DIGITS ($6,000, 256 GB combined VRAM). Or, if you can do 4 x DIGITS, then that ($12,000, 512 GB VRAM) vs. a 512 GB Ultra ($9,500 with 80-core GPU).

(3) And this is surprising:

"...almost every AI developer I know uses a Mac! Essentially, and I am generalizing: Every major lab, every major developer, everyone uses a Mac."

How can that be, given that AI-focused data centers are commonly NVIDIA/CUDA-based. To develop for those, you would (I assume) want to be working on an NVIDIA workstation. Is the fraction of AI developers writing code for data center use really that tiny?

Last edited:

NotEntirelyConfused

Power User

- Joined

- May 15, 2024

- Posts

- 186

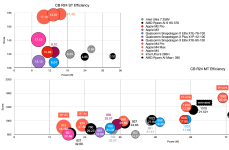

Why is that interesting? Isn't it completely expected? Being lower on the curve implies being more efficient.The interesting thing here is that in the fanless Air (and presumably iPad), the M4 is indeed constrained in terms of performance, but that actually makes it a much more efficient performer.

I don't have a large sample to observe but I'll bet they all use Mac laptops, remotely accessing AI servers over ssh (or possibly RDC?).(3) And this is surprising:

"...almost every AI developer I know uses a Mac! Essentially, and I am generalizing: Every major lab, every major developer, everyone uses a Mac."

How can that be, given that AI-focused data centers are commonly NVIDIA/CUDA-based. To develop for those, you would (I assume) want to be working on an NVIDIA workstation. Is the fraction of AI developers writing code for data center use really that tiny?

I was surprised by the amount of efficiency gained. That implies Apple is pushing the base M4 (and potentially the others) much further on its curve than I had thought - that it was pushed further than the base M3 was obvious but it wasn’t clear what the shape of that curve was until now, especially with the two added E-cores and change in architecture. In brief, it was the “much more efficient performer” that was interesting (to me).Why is that interesting? Isn't it completely expected? Being lower on the curve implies being more efficient.

I was referring to code development rather than access—that if they want to develop code locally for use on an NVIDIA-based AI server, they'd want to write their code on an NVIDIA-based workstation.I don't have a large sample to observe but I'll bet they all use Mac laptops, remotely accessing AI servers over ssh (or possibly RDC?).

Or are you saying most who develop server-based AI models only use their personal computers to access the server, and do their development work on the server system itself (on, say, dedicated development nodes that are firewalled from the production nodes)? That's also possible but, in that case, the Mac's AI capabilities become irrelevant, which wouldn't make sense within the context of the article (the article's author was saying the high percentage of Mac users among AI developers indicates how suitable the Mac is for AI development work).

Last edited:

NotEntirelyConfused

Power User

- Joined

- May 15, 2024

- Posts

- 186

I expect it's some combination of both. I don't have data, though.Or are you saying most who develop server-based AI models only use their personal computers to access the server, and do their development work on the server system itself (on, say, dedicated development nodes that are firewalled from the production nodes)? That's also possible but, in that case, the Mac's AI capabilities become irrelevant, which wouldn't make sense within the context of the article (the article's author was saying the high percentage of Mac users among AI developers indicates how suitable the Mac is for AI development work).

NotEntirelyConfused

Power User

- Joined

- May 15, 2024

- Posts

- 186

Fascinating translation/repost today in an AT forum: M4 chip analysis

Assuming that that site is correct, then the M4Pro *is* in fact a chop of the M4 Max, and it does actually have 12 P cores, of which two are fused off.

This is a really interesting reversal of Apple's decisions for the M3, where the Pro was a very different chip from the Max, wth its own layout and masks. Presumably, that was worthwhile for the M3 Pro, which was a much smaller CPU than the Max, but not for the M4, where the CPU is only a bit smaller.

Assuming that that site is correct, then the M4Pro *is* in fact a chop of the M4 Max, and it does actually have 12 P cores, of which two are fused off.

This is a really interesting reversal of Apple's decisions for the M3, where the Pro was a very different chip from the Max, wth its own layout and masks. Presumably, that was worthwhile for the M3 Pro, which was a much smaller CPU than the Max, but not for the M4, where the CPU is only a bit smaller.

My guess is that the M3 Pro was an experiment (also in a 6 E-core cluster as well as the Pro having its own die). Given the lead times on development I wouldn't expect the reversal of the Pro being a chopped Max in the M4 generation to mean much ... yet. We'll see if Apple ever returns to the idea of the Pro getting its own die.Fascinating translation/repost today in an AT forum: M4 chip analysis

Assuming that that site is correct, then the M4Pro *is* in fact a chop of the M4 Max, and it does actually have 12 P cores, of which two are fused off.

This is a really interesting reversal of Apple's decisions for the M3, where the Pro was a very different chip from the Max, wth its own layout and masks. Presumably, that was worthwhile for the M3 Pro, which was a much smaller CPU than the Max, but not for the M4, where the CPU is only a bit smaller.

For instance looking at the M4 in CBR24:

there is a pretty large gap in power levels between the smallest M4 Pro and the most power hungry version of the base M4. Definitely room for a processor in there eventually. My own thoughts: every die gets its own 6 E-core cluster then the base Mx is 4 P-cores, the Pro is 6 and 8 P-cores, and the Max is 10 and 12 P-cores. This is not necessarily what I think Apple will do, this just appeals to my sense of progression? for lack of a better word. It fills in the power level gap and makes a nice pattern. What can I say?

But yay a die shot! Not that the die shot means as much anymore since a) Apple already basically came out and said not to expect the M4 Ultra and b) the original M3 Max die shots didn't show a connector and ... well ... in the end Apple (probably) went back and added one (along with TB 5). On the other hand maybe I'll be able to use those die shots to confirm if my estimates of the M4 Max CPU size are correct. It would be helpful if they were annotated but even I might be able to do it based on annotated M3 Max die shots. Not sure when I'll get around to doing that.

Jimmyjames

Elite Member

- Joined

- Jul 13, 2022

- Posts

- 1,434

The main thing I take from this kind of information and the M3 Ultra is…I have no idea what Apple will do and there is no point trying! Other than fun I suppose.Fascinating translation/repost today in an AT forum: M4 chip analysis

Assuming that that site is correct, then the M4Pro *is* in fact a chop of the M4 Max, and it does actually have 12 P cores, of which two are fused off.

This is a really interesting reversal of Apple's decisions for the M3, where the Pro was a very different chip from the Max, wth its own layout and masks. Presumably, that was worthwhile for the M3 Pro, which was a much smaller CPU than the Max, but not for the M4, where the CPU is only a bit smaller.

Also it looks like the die shots censors where the ultra fusion would be - there's a watermark than auto-translates to "unlisted"/"non-discovered". Also the original eetimes article states: "Figure 8 shows the opening of the chips of the M4 Pro and M4 Max (the internal photo of the details of the wiring layer peeling is omitted)."My guess is that the M3 Pro was an experiment (also in a 6 E-core cluster as well as the Pro having its own die). Given the lead times on development I wouldn't expect the reversal of the Pro being a chopped Max in the M4 generation to mean much ... yet. We'll see if Apple ever returns to the idea of the Pro getting its own die.

For instance looking at the M4 in CBR24:

View attachment 34290

there is a pretty large gap in power levels between the smallest M4 Pro and the most power hungry version of the base M4. Definitely room for a processor in there eventually. My own thoughts: every die gets its own 6 E-core cluster then the base Mx is 4 P-cores, the Pro is 6 and 8 P-cores, and the Max is 10 and 12 P-cores. This is not necessarily what I think Apple will do, this just appeals to my sense of progression? for lack of a better word. It fills in the power level gap and makes a nice pattern. What can I say?

But yay a die shot! Not that the die shot means as much anymore since a) Apple already basically came out and said not to expect the M4 Ultra and b) the original M3 Max die shots didn't show a connector and ... well ... in the end Apple (probably) went back and added one (along with TB 5). On the other hand maybe I'll be able to use those die shots to confirm if my estimates of the M4 Max CPU size are correct. It would be helpful if they were annotated but even I might be able to do it based on annotated M3 Max die shots. Not sure when I'll get around to doing that.

I guess they didn't have permission to post those parts of the die shot specifically so TechanaLye could of course sell their report. Although, as already said, given Apple’s comments and Apple’s willingness to modify old dies, it’s not clear that the die shot would be as useful here anyway.

Also unfortunately there is no absolute die size given in the article that I could see so even if I were able to roughly suss out the sizes of the E and P-core clusters in pixels, I wouldn't be able to translate that into mm^2 to sanity check my earlier approximations. Ah well. Probably close enough. I suppose I could do the same approach I used for the M4 chips and apply it to the M3 chips to see how close the approximation is to the actual die shots. Might do that eventually just out of my own personal interest.

Last edited:

Quick update: sadly no Fire Range or extended data on Strix Halo, but NBC posted a 13" M4 review:

It manages to use less power than the M3 (can't remember which form factor the M3 plotted was measured in) while attaining significantly more performance. This might have also been a particularly good piece of silicon as the single threaded efficiency of this particular M4 was 8% better than the previously found (not shown). Overall, Apple managed to design a performance core that could clock higher for both ST and MT but didn't sacrifice efficiency when thermally constrained from doing so.

Edit: of course it does have two extra E-cores compared to the M3. Also it is worth reiterating that if this were an x86 chip instead of the M4, more than doubling the power and only getting 20% more performance is pretty damn bad! The M4 is being pushed way, way along is curve here. It's just that the base level efficiency of Apple Silicon, and the M4 in particular, relative to x86 is so high, especially factoring in die size, that Apple can effectively get away with it. This is true even relative to its direct predecessor the M3. Even at its worst, the M4 in the mini pushed all the way, is only 20% less efficient than the M3 was for a gain of 64% more performance! How much of that advantage is the two extra E-cores versus the new E and P core designs is unclear, but that the M4 has a huge advantage over its predecessor is definitely clear. And of course it is still more efficient than any x86 in its power/performance envelope - though the far larger Strix Point gets close.

It manages to use less power than the M3 (can't remember which form factor the M3 plotted was measured in) while attaining significantly more performance. This might have also been a particularly good piece of silicon as the single threaded efficiency of this particular M4 was 8% better than the previously found (not shown). Overall, Apple managed to design a performance core that could clock higher for both ST and MT but didn't sacrifice efficiency when thermally constrained from doing so.

Edit: of course it does have two extra E-cores compared to the M3. Also it is worth reiterating that if this were an x86 chip instead of the M4, more than doubling the power and only getting 20% more performance is pretty damn bad! The M4 is being pushed way, way along is curve here. It's just that the base level efficiency of Apple Silicon, and the M4 in particular, relative to x86 is so high, especially factoring in die size, that Apple can effectively get away with it. This is true even relative to its direct predecessor the M3. Even at its worst, the M4 in the mini pushed all the way, is only 20% less efficient than the M3 was for a gain of 64% more performance! How much of that advantage is the two extra E-cores versus the new E and P core designs is unclear, but that the M4 has a huge advantage over its predecessor is definitely clear. And of course it is still more efficient than any x86 in its power/performance envelope - though the far larger Strix Point gets close.

Last edited:

A little delayed, but finally a release date:Should probably be in the gaming section, but Cyberpunk 2077 is coming to macOS early next year.

Just Announced — Cyberpunk 2077: Ultimate Edition Coming to Mac!

Available early next year on Macs with Apple silicon, the Ultimate Edition will launch on the Mac App Store and Steam.www.cyberpunk.net

'Cyberpunk 2077' comes to the Mac July 17 — patient Apple gamers get support for every Apple Silicon chip, new Metal features, and Spatial Audio

16GB of RAM is recommended.

Sounds like an impressive port. Hopefully is indeed a good one.

Also this:

CD Projekt Red has embraced Apple's version of tiled rendering, which breaks up the rendering into smaller chunks, and assigns each one to a GPU core. To do this, the developer added support for Apple's Metal API, and used the Metal C++ interface and shader converter to optimize for Apple Silicon GPUs.

Jimmyjames

Elite Member

- Joined

- Jul 13, 2022

- Posts

- 1,434

Ha! I was just coming here to mention this. I first saw it mentioned on the Appleinsider article. Given the source I thought they may have been mistaken. If more than one place mentions it, it leads me to think there may be truth to it. Very curious to see how it performs given afaik no other AAA games on macOS use TBDR.A little delayed, but finally a release date:

'Cyberpunk 2077' comes to the Mac July 17 — patient Apple gamers get support for every Apple Silicon chip, new Metal features, and Spatial Audio

16GB of RAM is recommended.www.tomshardware.com

Sounds like an impressive port. Hopefully is indeed a good one.

Also this:

diamond.g

Elite Member

- Joined

- Dec 24, 2021

- Posts

- 1,087

I thought all Metal API games were TBDR. You have to do something special for that to be the case?Ha! I was just coming here to mention this. I first saw it mentioned on the Appleinsider article. Given the source I thought they may have been mistaken. If more than one place mentions it, it leads me to think there may be truth to it. Very curious to see how it performs given afaik no other AAA games on macOS use TBDR.

exoticspice1

Site Champ

- Joined

- Jul 19, 2022

- Posts

- 431

I wonder if 4K60 native Ultra is possible with M5 Max/Ultra?

I have to admit I’m confused by this as well. As far as I can tell, yes the GPU is always trying to leverage the TBDR capability and avoid rendering objects it doesn’t have to, but unless the graphics engine is properly tuned, the GPU may not be able to do so effectively. I have little personal knowledge and zero experience so I’m not sure why, but I’ve seen multiple people talking about TBDR as though some work is required by the developers to make it useful. I know some GPUs like Qualcomm even come with a switch that lets the GPU change rendering modes (including one that sounds like TBDR) so that developers can use the one that suits their engine. One could simply say that this just means there are tradeoffs for different rendering modes for different types of scenes (and there are), but taken together it seems like an engine itself can be better or worse for TBDR.I thought all Metal API games were TBDR. You have to do something special for that to be the case?

Last edited:

exoticspice1

Site Champ

- Joined

- Jul 19, 2022

- Posts

- 431

yikes the For your Mac Present doesn't have RT on by default. So all these requirements are WITHOUT RT. The fact that M4 Max is only capable of 1440p60 without RT for a $2K desktop is not good. Apple really needs to step it up with M5 series.View attachment 35880

I wonder if 4K60 native Ultra is possible with M5 Max/Ultra?

“For this Mac” preset — Cyberpunk 2077 | Technical Support — CD PROJEKT RED

Welcome to CD PROJEKT RED Technical Support! Here you will find help regarding our games and services, as well as answers to frequently asked questions.

Here are the ray tracing recommendations:yikes the For your Mac Present doesn't have RT on by default. So all these requirements are WITHOUT RT. The fact that M4 Max is only capable of 1440p60 without RT for a $2K desktop is not good. Apple really needs to step it up with M5 series.

“For this Mac” preset — Cyberpunk 2077 | Technical Support — CD PROJEKT RED

Welcome to CD PROJEKT RED Technical Support! Here you will find help regarding our games and services, as well as answers to frequently asked questions.support.cdprojektred.com

Ray Tracing on Mac — Cyberpunk 2077 | Technical Support — CD PROJEKT RED

Welcome to CD PROJEKT RED Technical Support! Here you will find help regarding our games and services, as well as answers to frequently asked questions.

30 FPS Performance Target

Chip: Apple M3 Pro

RAM: 18 GB

Preset: Ray Tracing: Medium

Resolution: 1800x1125 or 1920x1080 (with MetalFX DRS enabled manually)

60 FPS Performance Target

Chip: Apple M3 Max

RAM: 36 GB

Preset: Ray Tracing: Medium

Resolution: 1800x1125 or 1920x1080 (with MetalFX DRS enabled manually)

That’s not too bad.

exoticspice1

Site Champ

- Joined

- Jul 19, 2022

- Posts

- 431

This is really disappointing. This is NOT path tracing. Just basic RT. No wonder Apple did go into specifics in the press release.

It looks like current Apple GPUs are simply not meant for AAA gaming. The PPA sucks.

exoticspice1

Site Champ

- Joined

- Jul 19, 2022

- Posts

- 431

Yeah I saw. Just well below my expectations. I just hope they deliver with M5 cause AMD won't stand still.Here are the ray tracing recommendations:

Ray Tracing on Mac — Cyberpunk 2077 | Technical Support — CD PROJEKT RED

Welcome to CD PROJEKT RED Technical Support! Here you will find help regarding our games and services, as well as answers to frequently asked questions.support.cdprojektred.com

Similar threads

- Replies

- 0

- Views

- 950